1Open the Read History from File process.

2On the Start tab of the Start step, select the same Secure Agent that you had selected in the Run On field for the FileConnectionChatHistorydefault connection.

3 Save and publish the process.

4Open the Write Chat History in File process.

5Optionally, in the Prepare History to save in File step, click the Assignments tab. Open the Expression Editor for the File_Name field and enter the format to save the file.

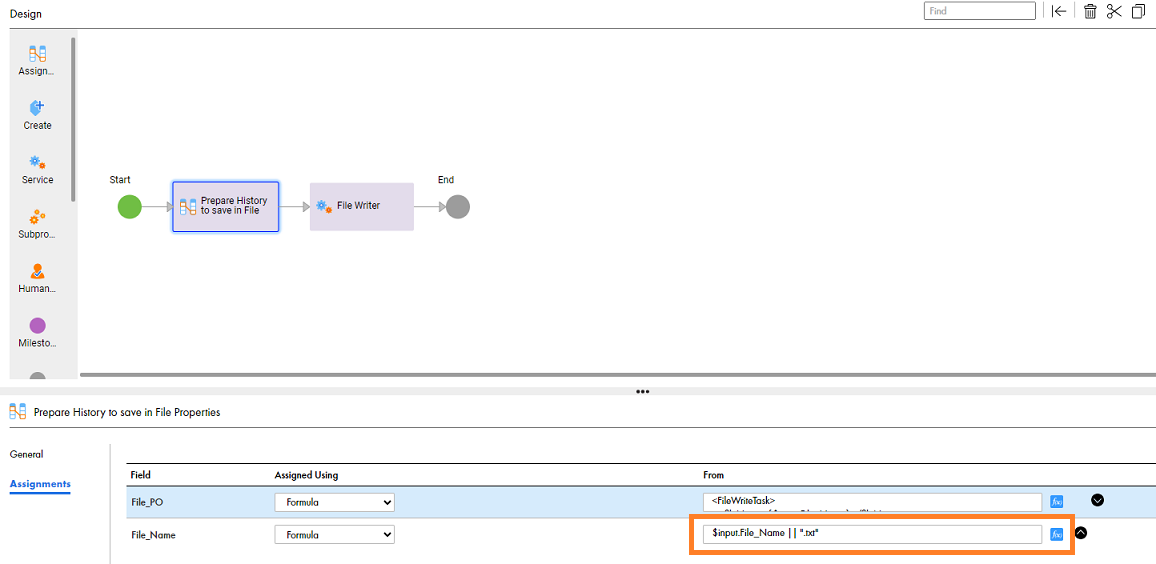

The following image shows the assignments of the File_Name input field:

6 Save and publish the process.

7Open the Chat with History parent process.

8On the Temp Fields tab of the Start step, enter values for the following fields:

- - In the api_version field, enter the API version of the LLM model. Default is 2024-06-01. You can optionally edit the api version.

- - In the deployment_id field, enter the user-specific deployment ID.

9Optionally, in the Configure Request Parameters step, click the Assignments tab. Open the Expression Editor for the Prompt_Configuration field and enter the prompt instructions as shown in the following sample code:

<generationConfig_AzureAI>

<max_tokens>200</max_tokens>

<temperature>1</temperature>

<topP>1</topP>

</generationConfig_AzureAI>

For the Prompt_Configuration field, enter values for the following properties:

Property | Description |

|---|---|

maxTokens | Defines the maximum number of tokens that the model can generate in its response. Setting a limit ensures that the response is concise and fits within the desired length constraints. |

temperature | Controls the randomness of the model's output. A lower value makes the output more deterministic, while a higher value increases randomness and creativity. For example, a temperature of 0.9 balances between deterministic and creative outputs. |

topP | An alternative to sampling with temperature where the model considers the results of the token with topP probability. For example, if topP is set to 0.1, the model considers only the top 10% most probable tokens at each step. |

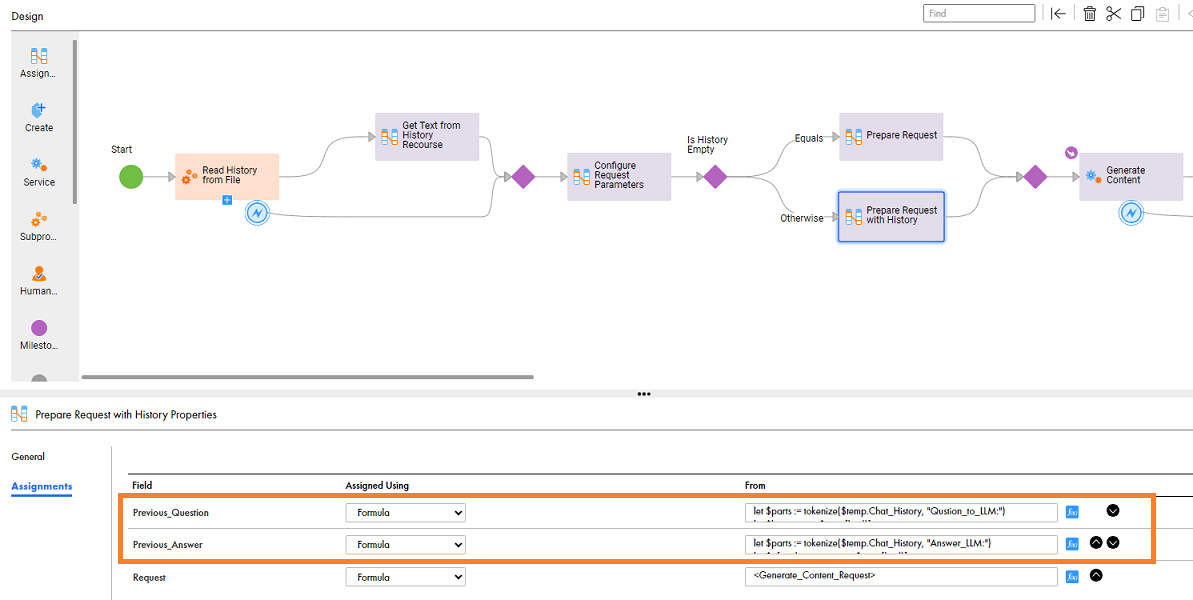

The following image shows the assignments of the Previous_Question and Previous_Answer input fields:

10 Save and publish the process.