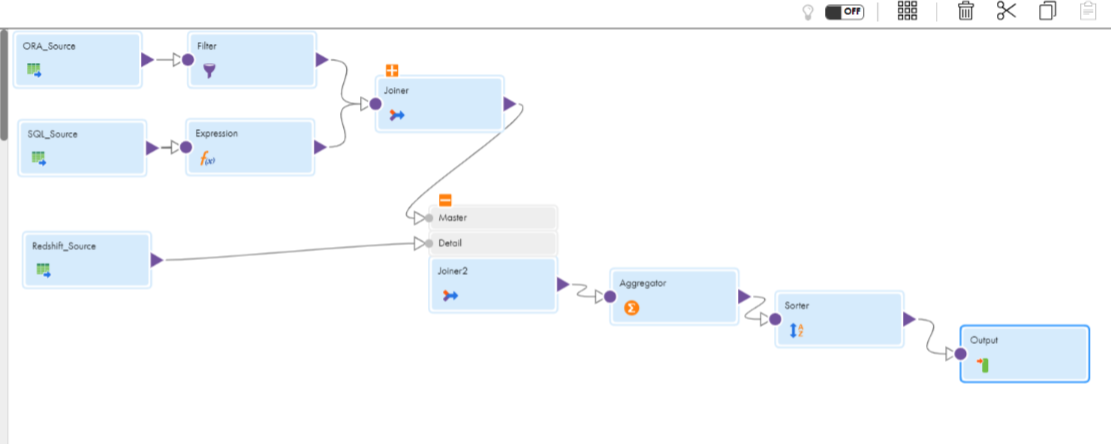

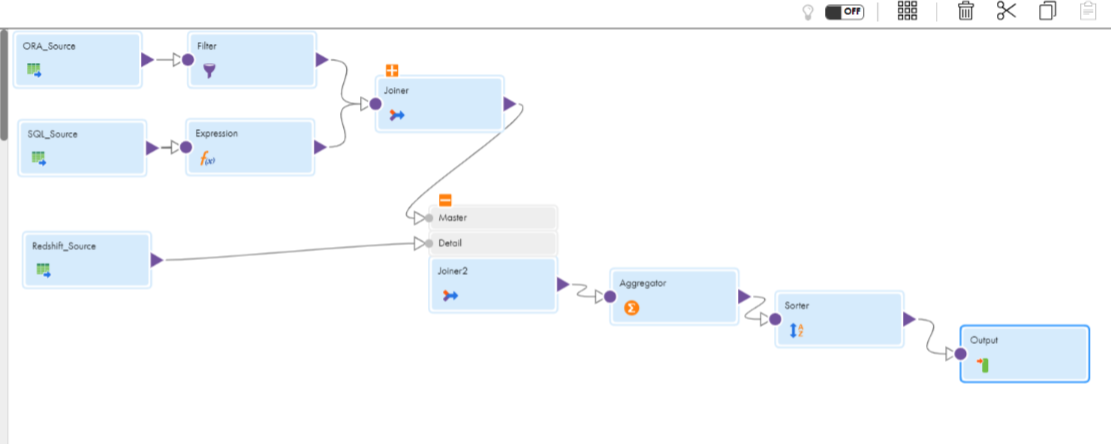

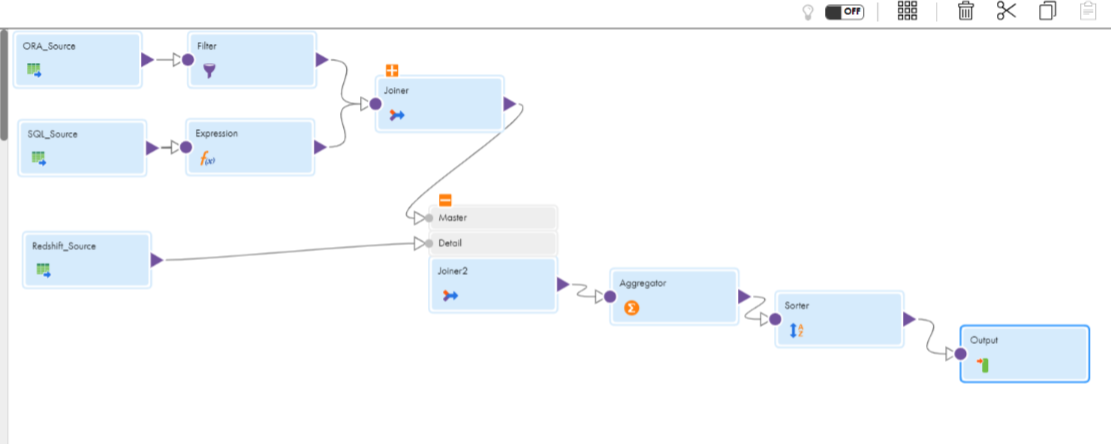

The following image shows a source mapplet that has multiple source connections, transformations, and a mapplet output:

Role | Create | Read | Update | Delete | Run | Set Permissions | Features |

|---|---|---|---|---|---|---|---|

Admin | X | X | X | X | X | X | Compare Columns Compare Data Profiling Runs Data Profiling results - view Drill down Export Data Profiling Results Manage Rules Query - Create Query - Submit Operational Insights - view Sensitive Data - view |

Data Integration Data Previewer* | X | Data Integration - Data Preview | |||||

Designer | X | X | X | X | X | X | Compare Columns Compare Data Profiling Runs Data Profiling results - view Drill down Export Data Profiling Results Manage Rules Query - Create Query - Submit Sensitive Data - view |

Monitor | X | Compare Columns Compare Data Profiling Runs Data Profiling results - view | |||||

Operator | X | Compare Columns Compare Data Profiling Runs Data Profiling results - view Operational Insights - view | |||||

*In addition to the Data Integration Data Previewer role, you also need the Admin or Designer role to view the Data Preview tab. | |||||||

Privilege | Description |

|---|---|

Data Profiling | Create, read, update, delete, run, and set permissions for a data profiling task. |

Data Profiling - Compare Columns | Compare columns in a profile run. |

Data Profiling - Compare Data Profiling Runs | Compare multiple profile runs. |

Data Profiling - Data Profiling Results - View* |

|

Data Profiling - Drill down* | View and select the drill-down option when you create a data profiling task. |

Data Profiling - Export Data Profiling Results | Export the profiling results to a Microsoft Excel file. |

Data Profiling - Manage Rules | Add or delete rules for a data profiling task. |

Data Profiling - Query - Create | Create a query. |

Data Profiling - Query - Submit | Run a query and view query results. |

Data Integration - Data Preview | View source object data in the Data Preview area. |

Data Profiling Sensitive Data - view | Hide sensitive information for a particular user role. When the Sensitive Data - view privilege is configured, you cannot view the minimum value, maximum value, and most frequent values information in the compare column tab. |

Data Profiling Disable Data Value Storage | Does not store minimum, maximum, and most frequent values in the profiling warehouse. When you configure the Disable Data Value Storage feature, the sensitive information is not stored in the profile results and the source system. The values are not stored even if you have permissions to view the sensitive data, or if you configure a profiling task with the Maximum Number of Value Frequency Pairs option. By default, this feature is disabled. When the feature is disabled, the values are stored as expected. |

* To perform drill down, and to view the Preview of Successful Rows and Preview of Unsuccessful Rows in a Data Governance and Catalog scorecard, you need the following privileges:

| |

Features | Custom role | Administrator or Designer | Result |

|---|---|---|---|

Disable Data Value Storage | Inactive | Active | Sensitive information is not stored. |

Active | Inactive | Sensitive information is not stored. | |

Sensitive Data- view | Inactive | Active | Sensitive information is displayed. |

Active | Inactive | Sensitive information is displayed. |

Connections | Supported source object |

|---|---|

Amazon Athena | Amazon Athena |

Amazon Redshift V2 | Amazon Redshift |

Amazon S3 v2 | Amazon S3 |

Azure Data Lake Store Gen2 | Azure Data Lake Store |

Flat file | Flat file |

Databricks Delta | Delta Tables External Tables in Delta format. Also supports Databricks Unity Catalog. |

Google BigQuery V2 | Google BigQuery |

Google Cloud Storage V2 | Google Cloud Storage |

JDBC V2 | Applicable for the data sources that are not supported with native driver and have a compliant Type 4 JDBC driver. |

Mapplet | Source mapplets |

MariaDB | MySQL |

Microsoft Azure Synapse SQL | Azure Synapse SQL |

Microsoft Fabric Data Warehouse | Microsoft Fabric Data Warehouse |

Microsoft SQL Server | Microsoft SQL Server Azure SQL Database |

MySQL | MySQL |

ODBC | Applicable for the data sources that are not supported with a native driver and that have an ODBC-compliant driver. |

Oracle | Oracle |

Oracle Cloud Object Storage | Oracle Cloud Object Storage |

PostgreSQL | PostgreSQL |

Salesforce | Salesforce Sales Cloud Salesforce Service Cloud Applications on Force.com Salesforce Verticals which include Salesforce Health Cloud and Salesforce Financial Cloud. |

SAP BW Reader | SAP BW |

SAP HANA | SAP HANA |

SAP Table | SAP ERP and SAP S/4 HANA |

Snowflake Data Cloud | Snowflake Data Cloud |

Property | Description |

|---|---|

Connection Name | Name of the connection. Each connection name must be unique within the organization. Connection names can contain alphanumeric characters, spaces, and the following special characters: _ . + -, Maximum length is 255 characters. |

Description | Description of the connection. Maximum length is 4000 characters. |

Type | The Amazon Athena connection type. |

Runtime Environment | Name of the runtime environment where you want to run the tasks. Select a Secure Agent, Hosted Agent, or serverless runtime environment. |

Authentication Type | The authentication mechanism to connect to Amazon Athena. Select Permanent IAM Credentials or EC2 instance profile. Permanent IAM credentials is the default authentication mechanism. Permanent IAM requires an access key and secret key to connect to Amazon Athena. Use the EC2 instance profile when the Secure Agent is installed on an Amazon Elastic Compute Cloud (EC2) system. This way, you can configure AWS Identity and Access Management (IAM) authentication to connect to Amazon Athena. For more information about authentication, see Prepare for authentication. |

Access Key | Optional. The access key to connect to Amazon Athena. |

Secret Key | Optional. The secret key to connect to Amazon Athena. |

JDBC URL | The URL of the Amazon Athena connection. Enter the JDBC URL in the following format: jdbc:awsathena://AwsRegion=<region_name>;S3OutputLocation=<S3_Output_Location>; You can use pagination to fetch the Amazon Athena query results. Set the property UseResultsetStreaming=0 to use pagination. Enter the property in the following format: jdbc:awsathena://AwsRegion=<region_name>;S3OutputLocation=<S3_Output_Location>;UseResultsetStreaming=0; You can also use streaming to improve the performance and fetch the Amazon Athena query results faster. When you use streaming, ensure that port 444 is open. By default, streaming is enabled. |

Customer Master Key ID | Optional. Specify the customer master key ID generated by AWS Key Management Service (AWS KMS) or the Amazon Resource Name (ARN) of your custom key for cross-account access. You must generate the customer master key ID for the same region where your Amazon S3 bucket resides. You can either specify the customer-generated customer master key ID or the default customer master key ID. |

Property | Value |

|---|---|

Runtime Environment | Name of the runtime environment where you want to run the tasks. Specify a Secure Agent or serverless runtime environment. Note: You cannot run a database ingestion task on a serverless runtime environment. |

Username | Enter the user name for the Amazon Redshift account. |

Password | Enter the password for the Amazon Redshift account. |

Access Key ID | Access key to access the Amazon S3 bucket. Provide the access key value based on the following authentication methods:

If you want to use the connection for a database ingestion task, you must use the basic authentication method to provide the access key value. |

Secret Access Key | Secret access key to access the Amazon S3 bucket. The secret key is associated with the access key and uniquely identifies the account. Provide the access key value based on the following authentication methods:

If you want to use the connection for a database ingestion task, you must provide the actual access secret value. |

¹ IAM Role ARN | The Amazon Resource Number (ARN) of the IAM role assumed by the user to use the dynamically generated temporary security credentials. Set the value of this property if you want to use the temporary security credentials to access the AWS resources.You cannot use the temporary security credentials in streaming ingestion tasks. For more information about how to obtain the ARN of the IAM role, see the AWS documentation. |

¹ External Id | Optional. Specify the external ID for a more secure access to the Amazon S3 bucket when the Amazon S3 bucket is in a different AWS account. |

¹ Use EC2 Role to Assume Role | Optional. Select the check box to enable the EC2 role to assume another IAM role specified in the IAM Role ARN option. Note: The EC2 role must have a policy attached with a permission to assume an IAM role from the same or different account. By default, the Use EC2 Role to Assume Role check box is not selected. |

¹ Master Symmetric Key | Optional. Provide a 256-bit AES encryption key in the Base64 format when you enable client-side encryption. You can generate a key using a third-party tool. |

JDBC URL | The URL of the Amazon Redshift V2 connection. Enter the JDBC URL in the following format: jdbc:redshift://<amazon_redshift_host>:<port_number>/<database_name> |

¹ Cluster Region | Optional. The AWS cluster region in which the bucket you want to access resides. Select a cluster region if you choose to provide a custom JDBC URL that does not contain a cluster region name in the JDBC URL connection property.If you specify a cluster region in both Cluster Region and JDBC URL connection properties, the Secure Agent ignores the cluster region that you specify in the JDBC URL connection property.To use the cluster region name that you specify in the JDBC URL connection property, select None as the cluster region in this property.Select one of the following cluster regions:

Default is None . You can only read data from or write data to the cluster regions supported by AWS SDK used by the connector. |

¹ Customer Master Key ID | Optional. Specify the customer master key ID generated by AWS Key Management Service (AWS KMS) or the ARN of your custom key for cross-account access. Note: Cross-account access is not applicable to an advanced cluster. You must generate the customer master key ID for the same region where your Amazon S3 bucket resides. You can either specify the customer-generated customer master key ID or the default customer master key ID. |

¹Does not apply to version 2021.07.M | |

Property | Value |

|---|---|

Runtime Environment | Name of the runtime environment where you want to run the tasks. Specify a Secure Agent or serverless runtime environment. |

Access Key | Access key to access the Amazon S3 bucket. |

Secret Key | Secret key to access the Amazon S3 bucket. The secret key is associated with the access key and uniquely identifies the account. |

Folder Path | Bucket name or complete folder path to the Amazon S3 objects. Do not use a slash at the end of the folder path. For example, <bucket name>/<my folder name> . |

Region Name | The AWS region of the bucket that you want to access. Select one of the following regions:

Default is US East(N. Virginia). |

IAM Role ARN | The Amazon Resource Name (ARN) of the AWS Identity and Access Management (IAM) role assumed by the user to use the dynamically generated temporary security credentials. Enter the ARN value if you want to use the temporary security credentials to access AWS resources. This property is not applicable to an application ingestion and replication task. Note: Even if you remove the IAM role that grants the agent access to the Amazon S3 bucket, the test connection is successful. For more information about how to get the ARN of the IAM role, see the AWS documentation. |

External ID | The external ID of your AWS account. External ID provides a more secure access to the Amazon S3 bucket when the Amazon S3 bucket is in a different AWS account. |

Use EC2 Role to Assume Role | Enables the EC2 role to assume another IAM role specified in the IAM Role ARN option. This property is required only if you use the IAM role authentication mechanism on an advanced cluster. The EC2 role must have a policy attached with permissions to assume an IAM role from the same or a different account. Before you configure IAM role authentication on an advanced cluster, perform the following tasks:

For information on how to set up an advanced cluster on AWS, see Advanced Clusters in the Administrator documentation. Note: For more information about AssumeRole for Amazon S3, see the Informatica How-To Library article. |

Property | Value |

|---|---|

Runtime Environment | The name of the runtime environment where you want to run the tasks. Specify a Secure Agent or serverless runtime environment. |

AccountName | Microsoft Azure Data Lake Storage Gen2 account name or the service name. |

Client ID | The ID of your application to complete the OAuth Authentication in the Azure Active Directory (AD). |

Client Secret | The client secret key to complete the OAuth Authentication in the Azure AD. |

Tenant ID | The Directory ID of the Azure AD. |

File System Name | The name of an existing file system in the Microsoft Azure Data Lake Storage Gen2 account. |

Property | Description |

|---|---|

Connection Name | Name of the connection. Each connection name must be unique within the organization. Connection names can contain alphanumeric characters, spaces, and the following special characters: _ . + -, Maximum length is 255 characters. |

Description | Description of the connection. Maximum length is 4000 characters. |

Use Secret Vault | Stores sensitive credentials for this connection in the secrets manager that is configured for your organization. This property appears only if secrets manager is set up for your organization. When you enable the secret vault in the connection, you can select which credentials that the Secure Agent retrieves from the secrets manager. If you don't enable this option, the credentials are stored in the repository or on a local Secure Agent, depending on how your organization is configured. Note: If you’re using this connection to apply data access policies through pushdown or proxy services, you cannot use the Secret Vault configuration option. For information about how to configure and use a secrets manager, see Secrets manager configuration. |

Runtime Environment | The name of the runtime environment where you want to run tasks. Select a Secure Agent, Hosted Agent, serverless, or elastic runtime environment. Hosted Agent is not applicable for mappings in advanced mode. |

SQL Warehouse JDBC URL | Databricks SQL Warehouse JDBC connection URL. This property is required only for Databricks SQL warehouse. Doesn't apply to all-purpose cluster and job cluster. To get the SQL Warehouse JDBC URL, go to the Databricks console and select the JDBC driver version from the JDBC URL menu. Specify the JDBC URL for Databricks JDBC driver version 2.6.25 or later in the following format: jdbc:databricks://<Databricks Host>:443/default;transportMode=http;ssl=1;AuthMech=3;httpPath=/sql/1.0/endpoints/<SQL endpoint cluster ID>; Ensure that you set the required environment variables in the Secure Agent. Also specify the correct JDBC Driver Class Name under advanced connection settings. Note: Specify the database name in the Database Name connection property. If you specify the database name in the JDBC URL, it is not considered. |

Property | Description |

|---|---|

Database | The database name that you want to connect to in Databricks Delta. Optional for SQL warehouse and Databricks cluster. For Data Integration, if you do not provide a database name, all databases available in the workspace are listed. The value you provide here overrides the database name provided in the SQL Warehouse JDBC URL connection property. By default, all databases available in the workspace are listed. |

JDBC Driver Class Name | The name of the JDBC driver class. Optional for SQL warehouse and Databricks cluster. For JDBC URL versions 2.6.22 or earlier, specify the driver class name as com.simba.spark.jdbc.Driver. For JDBC URL versions 2.6.25 or later, specify the driver class name as com.databricks.client.jdbc.Driver. Specify the driver class name as com.simba.spark.jdbc.Driver for the data loader task. For application ingestion and database ingestion tasks, specify the driver class name as: com.databricks.client.jdbc.Driver |

Staging Environment | The cloud provider where the Databricks cluster is deployed. Required for SQL warehouse and Databricks cluster. Select one of the following options:

Default is Personal Staging Location. You can select the Personal Staging Location as the staging environment instead of Azure or AWS staging environments to stage data locally for mappings and tasks. Personal staging location doesn't apply to Databricks cluster. Note: You cannot switch between clusters once you establish a connection. |

Databricks Host | The host name of the endpoint the Databricks account belongs to. Required for Databricks cluster. Doesn't apply to SQL warehouse. You can get the Databicks Host from the JDBC URL. The URL is available in the Advanced Options of JDBC or ODBC in the Databricks Delta all-purpose cluster. The following example shows the Databicks Host in JDBC URL: jdbc:spark://<Databricks Host>:443/ default;transportMode=http; ssl=1;httpPath=sql/ protocolv1/o/<Org Id>/<Cluster ID>; AuthMech=3; UID=token; PWD=<personal-access-token> The value of PWD in Databricks Host, Organization Id, and Cluster ID is always <personal-access-token>. Doesn't apply to a data loader task. |

Cluster ID | The ID of the cluster. Required for Databricks cluster. Doesn't apply to SQL warehouse. You can get the cluster ID from the JDBC URL. The URL is available in the Advanced Options of JDBC or ODBC in the Databricks Delta all-purpose cluster The following example shows the Cluster ID in JDBC URL: jdbc:spark://<Databricks Host>:443/ default;transportMode=http; ssl=1;httpPath=sql/ protocolv1/o/<Org Id>/<Cluster ID>; AuthMech=3;UID=token; PWD=<personal-access-token> Doesn't apply to a data loader task. |

Organization ID | The unique organization ID for the workspace in Databricks. Required for Databricks cluster. Doesn't apply to SQL warehouse. You can get the Organization ID from the JDBC URL. The URL is available in the Advanced Options of JDBC or ODBC in the Databricks Delta all-purpose cluster The following example shows the Organization ID in JDBC URL: jdbc:spark://<Databricks Host>:443/ default;transportMode=http; ssl=1;httpPath=sql/ protocolv1/o/<Organization ID>/ <Cluster ID>;AuthMech=3;UID=token; PWD=<personal-access-token> Doesn't apply to a data loader task. |

Min Workers1 | The minimum number of worker nodes to be used for the Spark job. Minimum value is 1. Required for Databricks cluster. Doesn't apply to SQL warehouse. Doesn't apply to a data loader task. |

Max Workers1 | The maximum number of worker nodes to be used for the Spark job. If you don't want to autoscale, set Max Workers = Min Workers or don't set Max Workers. Optional for Databricks cluster. Doesn't apply to SQL warehouse. Doesn't apply to a data loader task. |

DB Runtime Version1 | The version of Databricks cluster to spawn when you connect to Databricks cluster to process mappings. Required for Databricks cluster. Doesn't apply to SQL warehouse. Select the runtime version 9.1 LTS. Doesn't apply to a data loader task. |

Worker Node Type1 | The worker node instance type that is used to run the Spark job. Required for Databricks cluster. Doesn't apply to SQL warehouse. For example, the worker node type for AWS can be i3.2xlarge. The worker node type for Azure can be Standard_DS3_v2. Doesn't apply to a data loader task. |

Driver Node Type1 | The driver node instance type that is used to collect data from the Spark workers. Optional for Databricks cluster. Doesn't apply to SQL warehouse. For example, the driver node type for AWS can be i3.2xlarge. The driver node type for Azure can be Standard_DS3_v2. If you don't specify the driver node type, Databricks uses the value you specify in the worker node type field. Doesn't apply to a data loader task. |

Instance Pool ID1 | The instance pool ID used for the Spark cluster. Optional for Databricks cluster. Doesn't apply to SQL warehouse. If you specify the Instance Pool ID to run mappings, the following connection properties are ignored:

Doesn't apply to a data loader task. |

Elastic Disk1 | Enables the cluster to get additional disk space. Optional for Databricks cluster. Doesn't apply to SQL warehouse. Enable this option if the Spark workers are running low on disk space. Doesn't apply to a data loader task. |

Spark Configuration1 | The Spark configuration to use in the Databricks cluster. Optional for Databricks cluster. Doesn't apply to SQL warehouse. The configuration must be in the following format: "key1"="value1";"key2"="value2";... For example, "spark.executor.userClassPathFirst"="False" Doesn't apply to a data loader task or to Mass Ingestion tasks. |

Spark Environment Variables1 | The environment variables to export before launching the Spark driver and workers. Optional for Databricks cluster. Doesn't apply to SQL warehouse. The variables must be in the following format: "key1"="value1";"key2"="value2";... For example, "MY_ENVIRONMENT_VARIABLE"="true" Doesn't apply to a data loader task or to Mass Ingestion tasks. |

1Doesn't apply to mappings in advanced mode. | |

Property | Description |

|---|---|

ADLS Storage Account Name | The name of the Microsoft Azure Data Lake Storage account. |

ADLS Client ID | The ID of your application to complete the OAuth Authentication in the Active Directory. |

ADLS Client Secret | The client secret key to complete the OAuth Authentication in the Active Directory. |

ADLS Tenant ID | The ID of the Microsoft Azure Data Lake Storage directory that you use to write data. |

ADLS Endpoint | The OAuth 2.0 token endpoint from where authentication based on the client ID and client secret is completed. |

ADLS Filesystem Name | The name of an existing file system to store the Databricks data. |

ADLS Staging Filesystem Name | The name of an existing file system to store the staging data. |

Property | Description |

|---|---|

S3 Authentication Mode | The authentication mode to connect to Amazon S3. Select one of the following authentication modes:

This authentication mode applies only to SQL warehouse. |

S3 Access Key | The key to access the Amazon S3 bucket. |

S3 Secret Key | The secret key to access the Amazon S3 bucket. |

S3 Data Bucket | The existing S3 bucket to store the Databricks data. |

S3 Staging Bucket | The existing bucket to store the staging files. |

S3 VPC Endpoint Type1 | The type of Amazon Virtual Private Cloud endpoint for Amazon S3. You can use a VPC endpoint to enable private communication with Amazon S3. Select one of the following options:

|

Endpoint DNS Name for S31 | The DNS name for the Amazon S3 interface endpoint. Replace the asterisk symbol with the bucket keyword in the DNS name. Enter the DNS name in the following format: bucket.<DNS name of the interface endpoint> For example, bucket.vpce-s3.us-west-2.vpce.amazonaws.com |

IAM Role ARN1 | The Amazon Resource Number (ARN) of the IAM role assumed by the user to use the dynamically generated temporary security credentials. Set the value of this property if you want to use the temporary security credentials to access the Amazon S3 staging bucket. For more information about how to get the ARN of the IAM role, see the AWS documentation. |

Use EC2 Role to Assume Role1 | Optional. Select the check box to enable the EC2 role to assume another IAM role specified in the IAM Role ARN option. The EC2 role must have a policy attached with a permission to assume an IAM role from the same or different AWS account. |

STS VPC Endpoint Type1 | The type of Amazon Virtual Private Cloud endpoint for AWS Security Token Service. You can use a VPC endpoint to enable private communication with Amazon Security Token Service. Select one of the following options:

|

Endpoint DNS Name for AWS STS1 | The DNS name for the AWS STS interface endpoint. For example, vpce-01f22cc14558c241f-s8039x4c.sts.us-west-2.vpce.amazonaws.com |

S3 Service Regional Endpoint | The S3 regional endpoint when the S3 data bucket and the S3 staging bucket need to be accessed through a region-specific S3 regional endpoint. This property is optional for SQL warehouse. Doesn't apply to all-purpose cluster and job cluster. Default is s3.amazonaws.com. |

S3 Region Name1 | The AWS cluster region in which the bucket you want to access resides. Select a cluster region if you choose to provide a custom JDBC URL that does not contain a cluster region name in the JDBC URL connection property. |

Zone ID1 | The zone ID for the Databricks job cluster. This property is optional for job cluster. Doesn't apply to SQL warehouse and all-purpose cluster. Specify the Zone ID only if you want to create a Databricks job cluster in a particular zone at runtime. For example, us-west-2a. Note: The zone must be in the same region where your Databricks account resides. |

EBS Volume Type1 | The type of EBS volumes launched with the cluster. This property is optional for job cluster. Doesn't apply to SQL warehouse and all-purpose cluster. |

EBS Volume Count1 | The number of EBS volumes launched for each instance. You can choose up to 10 volumes. This property is optional for job cluster. Doesn't apply to SQL warehouse and all-purpose cluster. Note: In a Databricks connection, specify at least one EBS volume for node types with no instance store. Otherwise, cluster creation fails. |

EBS Volume Size1 | The size of a single EBS volume in GiB launched for an instance. This property is optional for job cluster. Doesn't apply to SQL warehouse and all-purpose cluster. |

1Doesn't apply to mappings in advanced mode. | |

Functionality | Advanced Mode | Data Integration Server |

|---|---|---|

Profiling on a Managed Databricks Delta table | YES | YES |

Profiling on an External Databricks Delta table | YES | YES |

Profiling on the view table | NO | YES |

Data Preview | NO | YES |

Simple Filter | NO | YES |

Custom Query | NO | YES |

SQL Override in the Advanced Options | NO | YES |

FIRST N Rows | NO | YES |

Drilldown | NO | YES |

Query on profile results | NO | YES |

Database Name or Table Name override in the Advanced Options | NO | YES |

Ability to create a Databricks Delta source mapplet profile | NO | YES |

Support of Data Quality Insight | NO | YES |

Ability to create scorecard metrics | YES | YES |

Compare Column | YES | YES |

Compare Profile runs | YES | YES |

Export/Import of Databricks profiles | YES | YES |

Ability to link columns of files to Data Quality assets | YES | YES |

Property | Value |

|---|---|

Runtime Environment | Choose the active Secure Agent. |

Directory | Enter the full directory or click Browse to locate and select the directory. |

Date Format | Enter the date format for date fields in the flat file. Default date format is: MM/dd/yyyy HH:mm:ss. |

Code Page | Choose UTF-8. |

Property | Value |

|---|---|

Runtime Environment | Choose the active Secure Agent. |

Service Account ID | Enter the client_email value present in the JSON file that you download after you create a service account. |

Service Account Key | Enter the private_key value present in the JSON file that you download after you create a service account. |

Project ID | Enter the id of the project in the Google service account that contains the dataset that you want to connect to. |

Connection mode | Choose the mode that you want to use to read data from or write data to Google BigQuery. |

Property | Value |

|---|---|

Runtime Environment | Name of the runtime environment where you want to run the tasks. Select a Secure Agent or serverless runtime environment. |

Service Account ID | The client_email value in the JSON file that you download after you create a service account. |

Service Account Key | The private_key value in the JSON file that you download after you create a service account. |

Project ID | The project_id value in the JSON file that you download after you create a service account. If you created multiple projects with the same service account, enter the ID of the project that contains the bucket that you want to connect to. |

Is Encrypted File¹ | Specifies whether a file is encrypted. Select this option when you import an encrypted file from Google Cloud Storage. Default is unselected. |

Bucket Name | The Google Cloud Storage bucket name that you want to connect to. When you select a source object or target object in a mapping , the Package Explorer lists files and folder available in the specified Google Cloud Storage bucket. If you do not specify a bucket name, you can select a bucket from the Package Explorer to select a source or target object . |

Optimize Object Metadata Import¹ | Optimizes the import of metadata for the selected object without parsing other objects, folders, or sub-folders available in the bucket. Directly importing metadata for the selected object can improve performance by reducing the overhead and time taken to parse each object available in the bucket. Default is not selected. |

¹ Applies only to mappings in advanced mode. | |

Property | Description |

|---|---|

Connection Name | Name of the connection. |

Description | Description of the connection. |

Type | Type of connection. Select JDBC V2 from the list. |

Runtime Environment | The name of the runtime environment where you want to run tasks. Select a Secure Agent or serverless runtime environment. |

User Name | The user name to connect to the database. |

Password | The password for the database user name. |

Schema Name | Optional. The schema name. If you don't specify the schema name, all the schemas available in the database are listed. To read from or write to Oracle public synonyms, enter PUBLIC. |

JDBC Driver Class Name | Name of the JDBC driver class. To connect to Aurora PostgreSQL, specify the following driver class name: org.postgresql.Driver For more information about which driver class to use with specific databases, see the corresponding third-party vendor documentation. |

Connection String | Connection string to connect to the database. Use the following format to specify the connection string: jdbc:<subprotocol>:<subname> For example, the connection string for the Aurora PostgreSQL database type is jdbc:postgresql://<host>:<port>[/dbname]. For more information about the connection string to use with specific drivers, see the corresponding third-party vendor documentation. |

Additional Security Properties | Masks sensitive and confidential data of the connection string that you don't want to display in the session log. Specify the part of the connection string that you want to mask. When you create a connection, the string you enter in this field appends to the string that you specified in the Connection String field. |

Database Type | The database type to which you want to connect. You can select one of the following database types:

Default is Others. |

Enable Auto Commit1 | Specifies whether the driver supports connections to automatically commit data to the database when you run an SQL statement. When disabled, the driver does not support connections to automatically commit data even if the auto-commit mode is enabled in the JDBC driver. Default is disabled. |

Support Mixed-Case Identifiers | Indicates whether the database supports case-sensitive identifiers. When enabled, the Secure Agent encloses all identifiers within the character selected for the SQL Identifier Character property. Default is disabled. |

SQL Identifier Character | Type of character that the database uses to enclose delimited identifiers in SQL queries. The available characters depend on the database type. Select None if the database uses regular identifiers. When the Secure Agent generates SQL queries, it does not place delimited characters around any identifiers. Select a character if the database uses delimited identifiers. When the Secure Agent generates SQL queries, it encloses delimited identifiers within this character. |

1Doesn't apply to mappings in advanced mode. | |

Property | Value |

|---|---|

Connection Name | Name of the connection. Each connection name must be unique within the organization. Connection names can contain alphanumeric characters, spaces, and the following special characters: _ . + -, Maximum length is 255 characters. |

Description | Description of the connection. Maximum length is 4000 characters. |

Type | Type of connection. Select MySQL from the list. |

Runtime Environment | The name of the runtime environment where you want to run the tasks. Specify a Secure Agent, Hosted Agent, or serverless runtime environment. |

User Name | User name for the database login. The user name can't contain a semicolon. |

Password | Password for the database login. The password can't contain a semicolon. |

Host | Name of the machine that hosts the database server. |

Port | Network port number to connect to the database server. Default is 3306. |

Database Name | Name of the database that you want to connect to. Note: The database name is case-sensitive. Maximum length is 64 characters. The database name can contain alphanumeric and underscore characters. |

Code Page | The code page of the database server. |

Metadata Advanced Connection Properties | Optional properties for the JDBC driver to fetch the metadata. Enter properties in the following format: <parameter name>=<parameter value> If you enter more than one property, separate each key-value pair with a semicolon. For example, enter the following property to configure the connection timeout when you test a connection: connectTimeout=<value_in_miliseconds> Note: The default connection timeout is 270000 miliseconds. |

Runtime Advanced Connection Properties | Optional properties for the ODBC driver. If you specify more than one property, separate each key-value pair with a semicolon. Not applicable to MySQL. |

Property | Value |

|---|---|

Runtime Environment | The name of the runtime environment where you want to run the tasks. Specify a Secure Agent or serverless runtime environment. |

Azure DW JDBC URL | Microsoft Azure Synapse SQL JDBC connection string. Example for Microsoft SQL Server authentication: jdbc:sqlserver://<Server>.database.windows.net:1433;database=<Database> Example for Azure Active Directory (AAD) authentication: jdbc:sqlserver://<Server>.database.windows.net:1433; database=<Database>;encrypt=true;trustServerCertificate=false; hostNameInCertificate=*.database.windows.net;loginTimeout=30; Authentication=ActiveDirectoryPassword; |

Azure DW JDBC Username | User name to connect to the Microsoft Azure Synapse SQL account. Provide AAD user name for AAD authentication. |

Azure DW JDBC Password | Password to connect to the Microsoft Azure Synapse SQL account. |

Azure DW Schema Name | Name of the schema in Microsoft Azure Synapse SQL. |

Azure Storage Type | Type of Azure storage to stage the files. You can select any of the following storage type:

|

Authentication Type | Authentication type to connect to Azure storage to stage the files.Select one of the following options:

|

Azure Blob Account Name | Applicable to Shared Key Authentication for Microsoft Azure Blob Storage.Name of the Microsoft Azure Blob Storage account to stage the files. |

Azure Blob Account Key | Applicable to Shared Key Authentication for Microsoft Azure Blob Storage.Microsoft Azure Blob Storage access key to stage the files. |

ADLS Gen2 Storage Account Name | Applicable to Shared Key Authentication and Service Principal Authentication for Microsoft Azure Data Lake Storage Gen2.Name of the Microsoft Azure Data Lake Storage Gen2 account to stage the files. |

ADLS Gen2 Account Key | Applicable to Shared Key Authentication for Microsoft Azure Data Lake Storage Gen2.Microsoft Azure Data Lake Storage Gen2 access key to stage the files. |

Client ID | Applicable to Service Principal Authentication for Microsoft Azure Data Lake Storage Gen2.The application ID or client ID for your application registered in the Azure Active Directory. |

Client Secret | Applicable to Service Principal Authentication for Microsoft Azure Data Lake Storage Gen2.The client secret for your application. |

Tenant ID | Applicable to Service Principal Authentication for Microsoft Azure Data Lake Storage Gen2.The directory ID or tenant ID for your application. |

Blob End-point | Type of Microsoft Azure endpoints. You can select any of the following endpoints:

|

VNet Rule | Enable to connect to a Microsoft Azure Synapse SQL endpoint residing in a virtual network (VNet).When you use a serverless runtime environment, you cannot connect to a Microsoft Azure Synapse SQL endpoint residing in a virtual network. |

Property | Value |

|---|---|

Runtime Environment | The name of the runtime environment where you want to run tasks. Select a Secure Agent, Hosted Agent, or serverless runtime environment. |

SQL Connection String | The SQL connection string to connect to Microsoft Fabric Data Warehouse. Specify the connection string in the following format: <Server>.datawarehouse.pbidedicated.windows.net |

Client ID | The application ID or client ID of your application registered in Azure Active Directory for service principal authentication. |

Client Secret | The client secret for your application registered in Azure Active Directory. |

Tenant ID | The tenant ID of your application registered in Azure Active Directory. |

Workspace | The name of the workspace in Microsoft Fabric Data Warehouse that you want to connect. |

Database | The name of the database in Microsoft Fabric Data Warehouse that you want to connect. |

Property | Value |

|---|---|

Runtime Environment | Choose the active Secure Agent. |

SQL Server Version | Enter the Microsoft SQL Server database version. |

Authentication Mode | Choose SQL Server or Windows Authentication v2. |

User Name | Enter the user name for the database login. |

Password | Enter the password for the database login. |

Host | Enter the name of the machine that hosts the database server. |

Port | Enter the network port number used to connect to the database server. Default is 1433. |

Database Name | Enter the database name for the Microsoft SQL Server target. |

Schema | Enter a schema name. |

Code Page | Choose UTF-8. |

Property | Value |

|---|---|

Runtime Environment | Choose the active Secure Agent. |

SQL Server Version | Enter the Azure SQL Server database version. |

Authentication Mode | Choose SQL Server or Windows Authentication v2. |

User Name | Enter the user name for the database login. |

Password | Enter the password for the database login. |

Host | Enter the name of the machine that hosts the database server. |

Port | Enter the network port number used to connect to the database server. Default is 1433. |

Database Name | Enter the database name for the Microsoft SQL Server target. |

Schema | Enter the schema used for the target connection. |

Code Page | Choose UTF-8. |

Encryption Method | Enter SSL. |

Crypto Protocol Version | Enter TLSv1.2. |

Property | Value |

|---|---|

Connection Name | Name of the connection. Each connection name must be unique within the organization. Connection names can contain alphanumeric characters, spaces, and the following special characters: _ . + -, Maximum length is 255 characters. |

Description | Description of the connection. Maximum length is 4000 characters. |

Type | Type of connection. Select MySQL from the list. |

Runtime Environment | The name of the runtime environment where you want to run the tasks. Specify a Secure Agent, Hosted Agent, or serverless runtime environment. |

User Name | User name for the database login. The user name can't contain a semicolon. |

Password | Password for the database login. The password can't contain a semicolon. |

Host | Name of the machine that hosts the database server. |

Port | Network port number to connect to the database server. Default is 3306. |

Database Name | Name of the database that you want to connect to. Note: The database name is case-sensitive. Maximum length is 64 characters. The database name can contain alphanumeric and underscore characters. |

Code Page | The code page of the database server. |

Metadata Advanced Connection Properties | Optional properties for the JDBC driver to fetch the metadata. Enter properties in the following format: <parameter name>=<parameter value> If you enter more than one property, separate each key-value pair with a semicolon. For example, enter the following property to configure the connection timeout when you test a connection: connectTimeout=<value_in_miliseconds> Note: The default connection timeout is 270000 miliseconds. |

Runtime Advanced Connection Properties | Optional properties for the ODBC driver. If you specify more than one property, separate each key-value pair with a semicolon. Not applicable to MySQL. |

Property | Value |

|---|---|

Connection Name | Name of the connection. Each connection name must be unique within the organization. Connection names can contain alphanumeric characters, spaces, and the following special characters: _ . + -, Maximum length is 255 characters. |

Description | Description of the connection. Maximum length is 4000 characters. |

Type | Type of connection. Select ODBC from the list. |

Runtime Environment | Choose an active Secure Agent. |

ODBC Subtype | The ODBC connection subtype that you'll use to connect to an ODBC-compliant endpoint. |

User Name | The user name to connect to the ODBC-compliant endpoint. |

Password | The password to connect to the ODBC-compliant endpoint. The password cannot contain a semicolon. |

Data Source Name | The name of the data source. |

Schema | The name of the schema that the data source specifies. |

Code Page | The code page of the endpoint server or flat file defined in the connection. |

Property | Value |

|---|---|

Driver Manager for Linux | The driver manager for a Secure Agent machine hosted on Linux. When you create a new ODBC connection on Linux, select one of the following driver managers from the list:

Default is UnixODBC2.3.0. |

Connection Environment SQL | The SQL statement to set up the ODBC-compliant endpoint environment when you connect to a PostgreSQL or Teradata database. The database environment applies for the session that uses this connection. You can add single or multiple SQL statements. Separate the SQL statements with a semicolon. For example, you can enter the following statement to set the time zone: SET timezone to 'America/New_York'; For mappings that run on a PostgreSQL database, the property applies when you enable SQL ELT optimization. For mappings that run on a Teradata database, the property applies with or without SQL ELT optimization. |

Property | Value |

|---|---|

Runtime Environment | Choose the active Secure Agent. |

User Name | Enter the user name of the database login. |

Password | Enter the password of the database login. |

Host | Enter the name of the machine that hosts the database server. |

Port | Enter the network port number used to connect to the database server. Default is 1521. |

Service Name | Enter the service name or System ID (SID) that uniquely identifies the Oracle database. |

Schema | Enter the schema name. If you do not specify a schema, Data Profiling uses the default schema. |

Code Page | Choose UTF-8. |

Property | Value |

|---|---|

Runtime Environment | Name of the runtime environment where you want to run the tasks. |

Authentication Type | Authentication type to connect to Oracle Cloud Object Storage to stage the files. Select one of the following options:

|

User | The Oracle Cloud Identifier (OCID) of the user for whom the key pair is added. |

Finger Print | Fingerprint of the public key. |

Tenancy | Oracle Cloud Identifier (OCID) of the tenancy, that is the globally unique name of the OCI account. |

Config File Location | Location of configuration file on the Secure Agent machine. Enter the absolute path. If you do not enter any value, <system_default_location>/.oci/config is used to retrieve the configuration file. |

Private Key File Location | Location of the private key file in .PEM format on the Secure Agent machine. |

Profile Name | Required if you use the ConfigFile for authentication. Name of the profile in the configuration file that you want to use. Default is DEFAULT. |

Bucket Name | The Oracle Cloud Storage bucket name. This bucket contains the objects and files. |

Folder Path | The path to the folder under the specified Oracle Cloud Storage bucket. For example, bucket/Dir_1/Dir_2/FileName.txt. Here, Dir_1/Dir_2 is the folder path. |

Region | Oracle Cloud Object Storage region where the bucket exists. Select the Oracle Cloud Object Storage region from the list. |

Property | Value |

|---|---|

Runtime Environment | The name of the runtime environment where you want to run tasks. |

Authentication Type | The authentication mechanism to connect to PostgreSQL. Select Database. |

User Name | User name to access the PostgreSQL database. |

Password | Password for the PostgreSQL database user name. |

Host Name | Host name of the PostgreSQL server to which you want to connect. |

Port | Port number for the PostgreSQL server to which you want to connect. Default is 5432. |

Database Name | The PostgreSQL database name. |

Property | Value |

|---|---|

Runtime Environment | Choose the active Secure Agent. |

User Name | Enter the user name for the Salesforce account. |

Password | Enter the password for the Salesforce account. |

Security Token | Enter the security token generated from the Salesforce application. To generate a security token in the Salesforce application, click Reset My Security Token in the Setup > Personal Setup > My Personal Information section. You do not need to generate the security token every time if you add the Informatica Cloud IP address ranges: 209.34.91.0-255, 206.80.52.0-255, 206.80.61.0-255, and 209.34.80.0-255 to the Trusted IP Ranges field. To add the Informatica Cloud IP address ranges, navigate to the Setup > Security Controls > Network Access section in your Salesforce application. |

Service URL | Enter the URL of the Salesforce service. Maximum length is 100 characters. |

Property | Value |

|---|---|

Runtime Environment | Choose the active Secure Agent. |

OAuth Consumer Key | Enter the consumer key that you get from Salesforce, which is required to generate a valid refresh token. |

OAuth Consumer Secret | Enter the consumer secret that you get from Salesforce, which is required to generate a valid refresh token. |

OAuth Refresh Token | Enter the refresh token generated in Salesforce using the consumer key and consumer secret. |

Service URL | Enter the URL of the Salesforce service endpoint. Maximum length is 100 characters. |

Property | Value |

|---|---|

Runtime Environment | Runtime environment that contains the Secure Agent that you want to use to read data from SAP BW objects. |

Username | SAP user name with the appropriate user authorization. Important: Mandatory parameter to run a profile. |

Password | SAP password. Important: Mandatory parameter to run a profile. |

Connection type | Type of connection that you want to create. Select one of the following values:

Default is Application. |

Host name | Required when you create an SAP application connection. Host name or IP address of the SAP BW server that you want to connect to. |

System number | Required when you create an SAP application connection. SAP system number. |

Message host name | Required when you create an SAP load balancing connection. Host name of the SAP message server. |

R3 name/SysID | Required when you create an SAP load balancing connection. SAP system name. |

Group | Required when you create an SAP load balancing connection. Group name of the SAP application server. |

Client | Required. SAP client number. Important: Mandatory parameter to run a profile. |

Language | Language code that corresponds to the language used in the SAP system. Important: Mandatory parameter to run a profile. |

Trace | Use this option to track the JCo calls that the SAP system makes. Specify one of the following values:

Default is 0.SAP stores information about the JCo calls in a trace file. You can access the trace files from the following directories:

<Informatica Secure Agent installation directory>\apps\Data_Integration_Server\<Latest version>\ICS\main\tomcat <Informatica Secure Agent installation directory>\apps\Data_Integration_Server\<Latest version>\ICS\main\bin\rdtm |

Additional parameters | Additional JCo connection parameters that you want to use. Use the following format: <parameter name1>=<value1>, <parameter name2>=<value2> |

Port Range | HTTP port range that the Secure Agent must use to read data from the SAP BW server in streaming mode. Enter the minimum and maximum port numbers with a hyphen as the separator. The minimum and maximum port number can range between 10000 and 65535.Default is 10000-65535. |

Use HTTPS | Select this option to enable https streaming. |

Keystore location | Absolute path to the JKS keystore file. |

Keystore password | Password for the .JKS file. |

Private key password | Export password specified for the .P12 file. |

SAP Additional Parameters | Additional SAP parameters that the Secure Agent uses to connect to the SAP system as an RFC client. You can specify the required RFC-specific parameters and connection information to enable communication between Data Integration and SAP. For example, you can specify the SNC connection parameters as additional arguments to connect to SAP: GROUP=interfaces ASHOST=tzxscs20.bmwgroup.net SYSNR=20 SNC_MODE=1 SNC_PARTNERNAME=p:CN=ZXS, OU=SAP system, O=BMW Group SNC_MYNAME=p:CN=CMDB_SWP-2596, OU=SNC partner system, O=BMW Group SNC_LIB=/global/informatica/104/server/bin/libsapcrypto.so X509CERT=/global/informatica/104/SAPSNCertfiles/ROOT_CA_V3.crt TRACE=2 Note: For information about the SNC parameters that you can configure in this field, see the Informatica How-To Library article. Note: If you have specified the mandatory connection parameters in the connection, those values override the additional parameter arguments. |

Property | Value |

|---|---|

Connection Name | Name of the connection. Each connection name must be unique within the organization. Connection names can contain alphanumeric characters, spaces, and the following special characters: _ . + -, Maximum length is 255 characters. |

Description | Description of the connection. Maximum length is 4000 characters. |

Type | SAP HANA |

Runtime Environment | The name of the runtime environment where you want to run tasks. Select a Secure Agent or serverless runtime environment. |

Host | SAP HANA server host name. |

Port | SAP HANA server port number. |

Database Name | Name of the SAP HANA database. |

Current Schema | SAP HANA database schema name. Specify _SYS_BIC when you use SAP HANA database modeling views. |

Code Page | The code page of the database server. Select the UTF-8 code page. |

Username | User name for the SAP HANA account. |

Password | Password for the SAP HANA account. The password can contain alphanumeric characters and the following special characters: ~ ` ! @ # $ % ^ & * ( ) _ - + = { [ } ] | : ; ' < , > . ? / Note: You can't use a semicolon character in combination with a left brace or right brace character. |

Property | Value |

|---|---|

Metadata Advanced Connection Properties | Optional properties for the JDBC driver to fetch the metadata. If you specify more than one property, separate each key-value pair with a semicolon. For example, you can set the following connection timeout for the JDBC driver when you connect to SAP HANA: connectTimeout=180000 |

Run-time Advanced Connection Properties | Optional properties for the ODBC driver. If you specify more than one property, separate each key-value pair with a semicolon. For example, charset=sjis;readtimeout=180 Not applicable to SAP HANA. |

Property | Value |

|---|---|

Connection Name | Name of the connection. |

Description | Description of the connection. |

Type | Type of connection. |

Runtime Environment | Required. Runtime environment that contains the Secure Agent that you want to use to access SAP tables. |

Username | Required. SAP user name with the appropriate user authorization. Important: Mandatory parameter to run a profile. |

Password | Required. SAP password. Important: Mandatory parameter to run a profile. |

Client | Required. SAP client number. Important: Mandatory parameter to run a profile. |

Language | Language code that corresponds to the SAP language. Important: Mandatory parameter to run a profile. |

Saprfc.ini Path | Required. Local directory to the sapnwrfc.ini file. To write to SAP tables, use the following directory:<Informatica Secure Agent installation directory>/apps/Data_Integration_Server/ext/deploy_to_main/bin/rdtm |

Destination | Required. DEST entry that you specified in the sapnwrfc.ini file for the SAP application server. Destination is case sensitive.Use all uppercase letters for the destination. |

Port Range | HTTP port range. The SAP Table connection uses the specified port numbers to connect to SAP tables using the HTTP protocol. Ensure that you specify valid numbers to prevent connection errors. Default: 10000-65535. Enter a range in the default range, for example, 10000-20000. When a range is outside the default range, the connection uses the default range. |

Test Streaming | Tests the connection. When selected, tests the connection using both RFC and HTTP protocol. When not selected, tests connection using RFC protocol. |

Https Connection | When selected, connects to SAP through HTTPS protocol. To successfully connect to SAP through HTTPS, verify that an administrator has configured the machines that host the Secure Agent and the SAP system. |

Keystore Location | The absolute path to the JKS keystore file. |

Keystore Password | The destination password specified for the .JKS file. |

Private Key Password | The export password specified for the .P12 file. |

Property | Value |

|---|---|

Runtime Environment | Choose an active Secure Agent with a package. |

Authentication | Select the authentication method that the connector must use to log in to Snowflake. Default is Standard. |

Username | Enter the user name for the Snowflake account. |

Password | Enter the password for the Snowflake account. |

Account | Name of the Snowflake account. In the Snowflake URL, your account name is the first segment in the domain. For example, if 123abc is your account name, the URL must be in the following format: https://123abc.snowflakecomputing.com |

Warehouse | Name of the Snowflake warehouse. |

Role | Enter the Snowflake user role name. |

Additional JDBC URL Parameters: | Optional. The additional JDBC connection parameters. Enter one or more JDBC connection parameters in the following format: <param1>=<value>&<param2>=<value>&<param3>=<value>.... For example, user=jon&warehouse=mywh&db=mydb&schema=public To override the database and schema name used to create temporary tables in Snowflake, enter the database and schema name in the following format: ProcessConnDB=<DB name>&ProcessConnSchema=<schema_name> To view only the specified database and schema while importing a Snowflake table, specify the database and schema name in the following format: db=<database_name>&schema=<schema_name> To access Snowflake through Okta SSO authentication, enter the web-based IdP implementing SAML 2.0 protocol in the following format: authenticator=https://<Your_Okta_Account_Name>.okta.com Note: Microsoft Active Directory Federation Services is not supported. For more information about configuring Okta authentication, see the following website:https://docs.snowflake.com/en/user-guide/admin-security-fed-auth-configure-snowflake.html To load data from Google Cloud Storage to Snowflake for pushdown optimization, enter the Cloud Storage Integration name created for the Google Cloud Storage bucket in Snowflake in the following format: storage_integration=<Storage Integration name> For example, if the storage integration name you created in Snowflake for the Google Cloud Storage bucket is abc_int_ef, you must specify the integration name in uppercase. For example, storage_integration=ABS_INT_EF. Note: Verify that there is no space before and after the equal sign (=) when you add the parameters. |