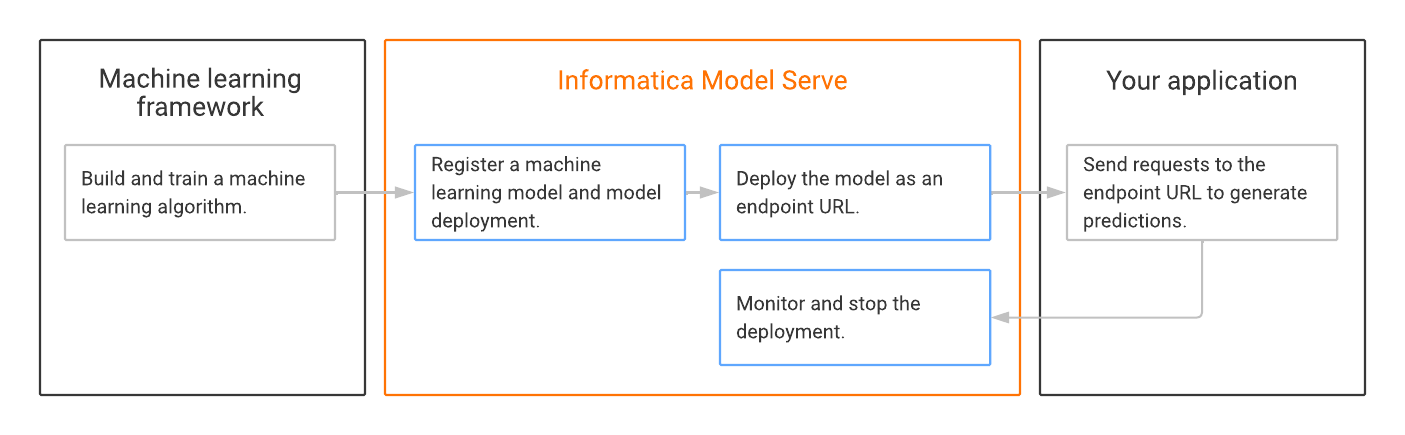

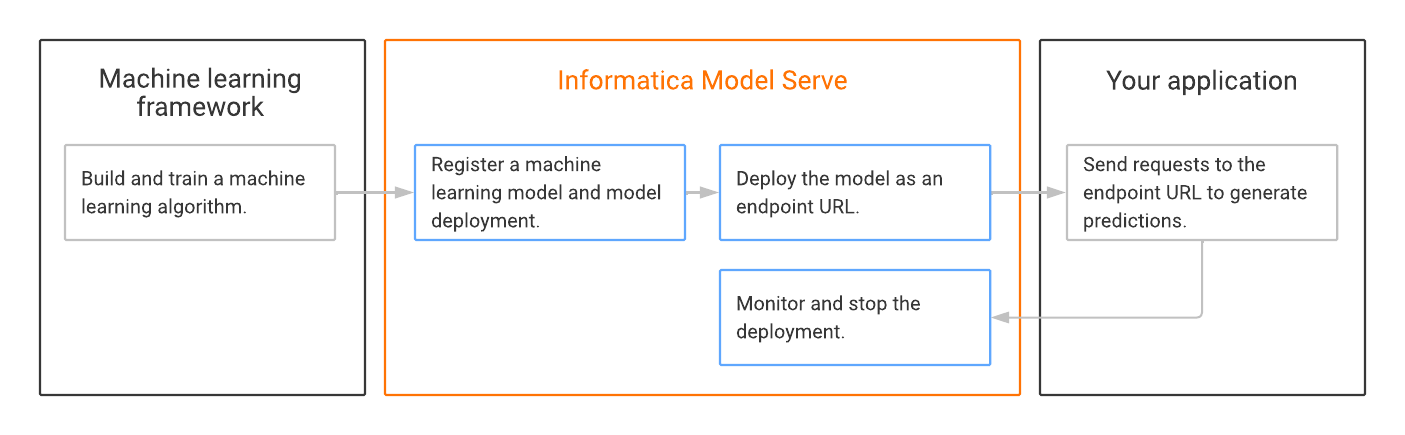

Task | Required for | Description |

|---|---|---|

Build and train a machine learning algorithm. | User-defined models | Use a third-party machine learning framework, such as TensorFlow, to build and train an algorithm. |

Register a machine learning model and a model deployment. | User-defined models | In Model Serve, configure a machine learning model where you upload the algorithm files. Then create a model deployment to define the runtime properties for deploying the model. |

Deploy the model as an endpoint URL. | Quick start models and user-defined models | Start the quick start model or model deployment to make the endpoint URL available for requests. |

Send requests to the endpoint to generate predictions. | Quick start models and user-defined models | In your application, send API calls to the endpoint URL to request predictions from the deployed model. |

Monitor and stop the deployment. | Quick start models and user-defined models | Use Model Serve to monitor the status of your deployments, download logs for troubleshooting, and stop deployments to release the cloud resources. |