Intelligent Data Lake Users

Intelligent Data Lake users include analysts who search, discover, and prepare data for analysis and administrators who manage the catalog and monitor the data lake.

Analysts work with data that reside in and outside the data lake. Analysts search for, discover, prepare, and publish data back to the data lake so that it is ready for further analysis.

Administrators use Live Data Map to extract metadata from information assets and load the metadata into the catalog. Administrators can run Informatica mappings to load data into the data lake. They also monitor data lake usage and work with Hadoop administrators to secure the data in the data lake.

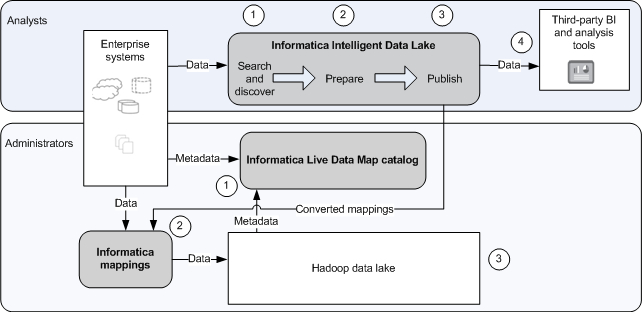

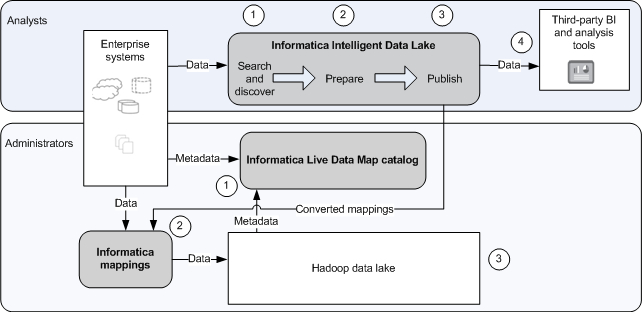

The following image displays the high-level tasks that analysts and administrators complete in Intelligent Data Lake:

Analysts perform the following high-level tasks:

- 1. Search the catalog for data that resides in and outside the data lake. Discover the lineage and relationships between data that resides in different enterprise systems.

- 2. Create a project and prepare data.

- 3. Publish prepared data to the data lake to share and collaborate with other analysts.

- 4. Optionally use third-party business intelligence or advanced analytic tools to run reports to further analyze the published data.

Administrators perform the following high-level tasks:

- 1. Use Live Data Map to create a catalog of the information assets that reside in and outside the data lake. Live Data Map extracts metadata for each asset and indexes the assets in the catalog.

- 2. Operationalize the Informatica mappings created during the publication process to regularly load data with the new structure into the data lake.

Optionally develop and run additional Informatica mappings to read data from enterprise systems and load the data to the data lake.

- 3. Secure the data in the data lake and monitor the usage of the data lake.