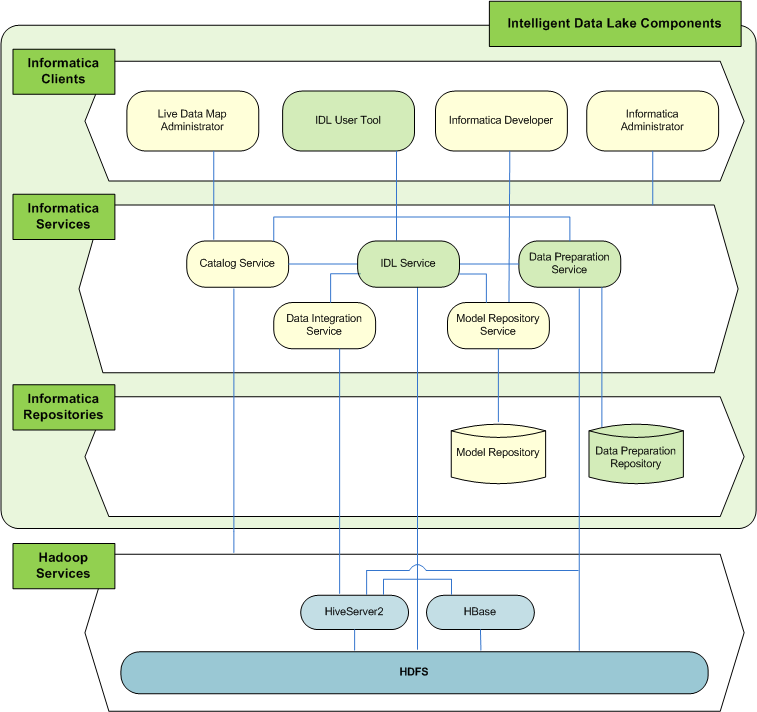

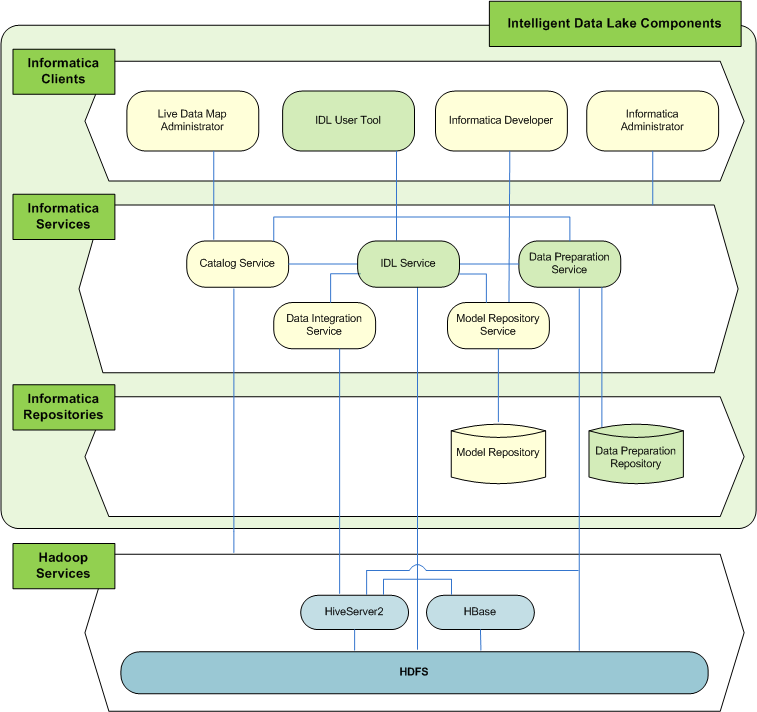

Architecture and Components

Intelligent Data Lake uses a number of components to search, discover, and prepare data.

The following image shows the components that Intelligent Data Lake uses and how they interact:

Note: You must install and configure Live Data Map before you install Intelligent Data Lake. Live Data Map requires additional clients, Informatica services, repositories, and Hadoop services. For more information about Live Data Map architecture, see the Live Data Map Administrator Guide.

Clients

Administrators and analysts use several clients to make data available for analysis in Intelligent Data Lake.

Intelligent Data Lake uses the following clients:

- Informatica Live Data Map Administrator

- Administrators use Informatica Live Data Map Administrator to administer the resources, scanners, schedules, attributes, and connections that are used to create the catalog. The catalog represents an indexed inventory of all the information assets in an enterprise.

- Informatica Administrator

- Administrators use Informatica Administrator (the Administrator tool) to manage the application services that Intelligent Data Lake requires. They also use the Administrator tool to administer the Informatica domain and security and to monitor the mappings run during the upload and publishing processes.

- Intelligent Data Lake application

- Analysts use the Intelligent Data Lake application to search, discover, and prepare data that resides in the data lake. Analysts combine, cleanse, transform, and structure the data to prepare the data for analysis. When analysts finish preparing the data, they publish the transformed data back to the data lake to make available to other analysts.

- Informatica Developer

- Administrators use Informatica Developer (the Developer tool) to view the mappings created when analysts publish prepared data in the Intelligent Data Lake application. They can operationalize the mappings so that data is regularly written to the data lake.

Application Services

Intelligent Data Lake requires application services to complete operations. Use the Administrator tool to create and manage the application services.

Intelligent Data Lake requires the following application services:

- Intelligent Data Lake Service

The Intelligent Data Lake Service is an application service that runs the Intelligent Data Lake application in the Informatica domain. When an analyst publishes prepared data, the Intelligent Data Lake Service converts each recipe into a mapping.

When an analyst uploads data, the Intelligent Data Lake Service connects to the HDFS system in the Hadoop cluster to temporarily stage the data. When an analyst previews data, the Intelligent Data Lake Service connects to HiveServer2 in the Hadoop cluster to read from the Hive table.

As analysts complete actions in the Intelligent Data Lake application, the Intelligent Data Lake Service connects to HBase in the Hadoop cluster to store events that you can use to audit user activity.

- Data Preparation Service

The Data Preparation Service is an application service that manages data preparation within the Intelligent Data Lake application. When an analyst prepares data in a project, the Data Preparation Service connects to the Data Preparation repository to store worksheet metadata. The service connects to HiveServer2 in the Hadoop cluster to read sample data or all data from the Hive table, depending on the size of the data. The service connects to the HDFS system in the Hadoop cluster to store the sample data being prepared in the worksheet.

When you create the Intelligent Data Lake Service, you must associate it with a Data Preparation Service.

- Catalog Service

The Catalog Service is an application service that runs Live Data Map in the Informatica domain. The Catalog Service manages the catalog of information assets in the Hadoop cluster.

When an analyst searches for assets in the Intelligent Data Lake application, the Intelligent Data Lake Service connects to the Catalog Service to return search results from the metadata stored in the catalog.

When you create the Intelligent Data Lake Service, you must associate it with a Catalog Service.

- Model Repository Service

The Model Repository Service is an application service that manages the Model repository. When an analyst creates projects, the Intelligent Data Lake Service connects to the Model Repository Service to store the project metadata in the Model repository. When an analyst publishes prepared data, the Intelligent Data Lake Service connects to the Model Repository Service to store the converted mappings in the Model repository.

When you create the Intelligent Data Lake Service, you must associate it with a Model Repository Service.

- Data Integration Service

The Data Integration Service is an application service that performs data integration tasks for Intelligent Data Lake. When an analyst uploads data or publishes prepared data, the Intelligent Data Lake Service connects to the Data Integration Service to write the data to a Hive table in the Hadoop cluster.

When you create the Intelligent Data Lake Service, you must associate it with a Data Integration Service.

Repositories

The Intelligent Data Lake Service connects to other application services in the Informatica domain that access data from repositories. The Intelligent Data Lake Service does not directly access any repositories.

Intelligent Data Lake requires the following repositories:

- Data Preparation repository

- When an analyst prepares data in a project, the Data Preparation Service stores worksheet metadata in the Data Preparation repository.

- Model repository

- When an analyst creates a project, the Intelligent Data Lake Service connects to the Model Repository Service to store the project metadata in the Model repository. When an analyst publishes prepared data, the Intelligent Data Lake Service converts each recipe to a mapping. The Intelligent Data Lake Service connects to the Model Repository Service to store the converted mappings in the Model repository.

Hadoop Services

Intelligent Data Lake connects to several Hadoop services on a Hadoop cluster to read from and write to Hive tables, to write events, and to store sample preparation data.

Intelligent Data Lake connects to the following services in the Hadoop cluster:

- HBase

- As analysts complete actions in the Intelligent Data Lake application, the Intelligent Data Lake Service writes events to HBase. You can view the events to audit user activity.

- Hadoop Distributed File System (HDFS)

When an analyst uploads data to the data lake, the Intelligent Data Lake Service connects to the HDFS system to stage the data in HDFS files.

When an analyst prepares data, the Data Preparation Service connects to the HDFS system to store the sample data being prepared in worksheets to HDFS files.

- HiveServer2

When an analyst previews data, the Intelligent Data Lake Service connects to HiveServer2 and reads the first 100 rows from the Hive table.

When an analyst prepares data, the Data Preparation Service connects to HiveServer2. Depending on the size of the data, the Data Preparation Service reads sample data or all data from the Hive table and displays the data in the worksheet.

When an analyst uploads data, the Intelligent Data Lake Service connects to the Data Integration Service to read the temporary data staged in the HDFS system and write the data to a Hive table. When an analyst publishes prepared data, the Intelligent Data Lake Service connects to the Data Integration Service to run the converted mappings in the Hadoop environment. The Data Integration Service pushes the processing to nodes in the Hadoop cluster. The service applies the mapping to the data in the input source and writes the transformed data to a Hive table.