Grid for Jobs that Run in Remote Mode

When a Data Integration Service grid runs mappings, profiles, and workflows, you can configure the service to run jobs in separate DTM processes on remote nodes. The nodes in the grid can have a different combination of roles.

A Data Integration Service grid uses the following components to run jobs in separate remote processes:

- Master service process

- When you enable a Data Integration Service that runs on a grid, one service process starts on each node with the service role in the grid. The Data Integration Service designates one service process as the master service process. The master service process manages application deployments, logging, job requests, and the dispatch of mappings to worker service processes for optimization and compilation. The master service process also acts as a worker service process and can optimize and compile mappings.

- Worker service processes

- The Data Integration Service designates the remaining service processes as worker service processes. When a worker service process starts, it registers itself with the master service process so that the master is aware of the worker. A worker service process optimizes and compiles mappings, and then generates a grid task. A grid task is a job request sent by the worker service process to the Service Manager on the master compute node.

- Service Manager on the master compute node

When you enable a Data Integration Service that runs on a grid, the Data Integration Service designates one node with the compute role as the master compute node.

The Service Manager on the master compute node performs the following functions to determine the optimal worker compute node to run the mapping:

- - Communicates with the Resource Manager Service to manage the grid of available compute nodes. When the Service Manager on a node with the compute role starts, the Service Manager registers the node with the Resource Manager Service.

- - Orchestrates worker service process requests and dispatches mappings to worker compute nodes.

The master compute node also acts as a worker compute node and can run mappings.

- DTM processes on worker compute nodes

The Data Integration Service designates the remaining nodes with the compute role as worker compute nodes. The Service Manager on a worker compute node runs mappings in separate DTM processes started within containers.

Supported Node Roles

When a Data Integration Service grid runs jobs in separate remote processes, the nodes in the grid can contain the service role only, the compute role only, or both the service and compute roles.

A Data Integration Service grid that runs jobs in separate remote processes can contain nodes with the following roles:

- Service role

- A Data Integration Service process runs on each node with the service role. Service components within the Data Integration Service process run workflows and profiles, and perform mapping optimization and compilation.

- Compute role

DTM processes run on each node with the compute role. The DTM processes run deployed mappings, mappings run by Mapping tasks within a workflow, and mappings converted from a profile.

- Both service and compute roles

A Data Integration Service process and DTM processes run on each node with both the service and compute roles. At least one node with both service and compute roles is required to run ad hoc jobs, with the exception of profiles. Ad hoc jobs include mappings run from the Developer tool or previews, scorecards, or drill downs on profile results run from the Developer tool or Analyst tool. The Data Integration Service runs these job types in separate DTM processes on the local node.

In addition, nodes with both roles can complete all of the tasks that a node with the service role only or a node with the compute role only can complete. For example, a workflow can run on a node with the service role only or on a node with both the service and compute roles. A deployed mapping can run on a node with the compute role only or on a node with both the service and compute roles.

The following table lists the job types that run on nodes based on the node role:

Job Type | Service Role | Compute Role | Service and Compute Roles |

|---|

Perform mapping optimization and compilation. | Yes | - | Yes |

Run deployed mappings. | - | Yes | Yes |

Run workflows. | Yes | - | Yes |

Run mappings included in workflow Mapping tasks. | - | Yes | Yes |

Run profiles. | Yes | - | Yes |

Run mappings converted from profiles. | - | Yes | Yes |

Run ad hoc jobs, with the exception of profiles, from the Analyst tool or the Developer tool. | - | - | Yes |

Note: If you associate a Content Management Service with the Data Integration Service to run mappings that read reference data, each node in the grid must have both the service and compute roles.

Job Types

When a Data Integration Service grid runs jobs in separate remote processes, how the Data Integration Service runs each job depends on the job type.

The Data Integration Service balances the workload across the nodes in the grid based on the following job types:

- Workflows

- When you run a workflow instance, the master service process runs the workflow instance and non-mapping tasks. The master service process uses round robin to dispatch each mapping within a Mapping task to a worker service process. The LDTM component of the worker service process optimizes and compiles the mapping. The worker service process then communicates with the master compute node to dispatch the compiled mapping to a separate DTM process running on a worker compute node.

- Deployed mappings

- When you run a deployed mapping, the master service process uses round robin to dispatch each mapping to a worker service process. The LDTM component of the worker service process optimizes and compiles the mapping. The worker service process then communicates with the master compute node to dispatch the compiled mapping to a separate DTM process running on a worker compute node.

- Profiles

- When you run a profile, the master service process converts the profiling job into multiple mapping jobs based on the advanced profiling properties of the Data Integration Service. The master service process then distributes the mappings across the worker service processes. The LDTM component of the worker service process optimizes and compiles the mapping. The worker service process then communicates with the master compute node to dispatch the compiled mapping to a separate DTM process running on a worker compute node.

- Ad hoc jobs, with the exception of profiles

- When you run an ad hoc job, with the exception of profiles, the Data Integration Service uses round robin to dispatch the first request directly to a worker service process that runs on a node with both the service and compute roles. The worker service process runs the job in a separate DTM process on the local node. To ensure faster throughput, the Data Integration Service bypasses the master service process. When you run additional ad hoc jobs from the same login, the Data Integration Service dispatches the requests to the same worker service process.

Note: You cannot run SQL queries or web service requests on a Data Integration Service grid that is configured to run jobs in separate remote processes.

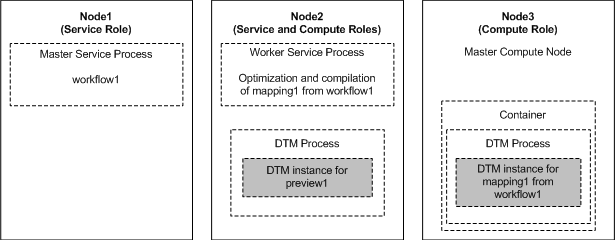

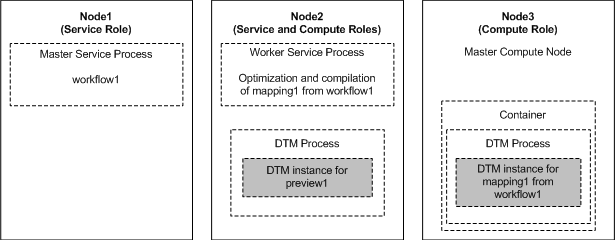

Example Grid that Runs Jobs in Remote Mode

In this example, the grid contains three nodes. Node1 has the service role only. Node2 has both the service and compute roles. Node3 has the compute role only. The Data Integration Service is configured to run jobs in separate remote processes.

The following image shows an example Data Integration Service grid configured to run mapping, profile, workflow, and ad hoc jobs in separate remote processes:

The Data Integration Service manages requests and runs jobs on the following nodes in the grid:

- •On Node1, the master service process runs the workflow instance and non-mapping tasks. The master service process dispatches a mapping included in a Mapping task from workflow1 to the worker service process on Node2. The master service process also acts as a worker service process and can optimize and compile mappings. Profile jobs can also run on Node1.

- •On Node2, the worker service process optimizes and compiles the mapping. The worker service process then communicates with the master compute node on Node3 to dispatch the compiled mapping to a worker compute node. The Data Integration Service dispatches a preview request directly to the worker service process on Node2. The service process creates a DTM instance within a separate DTM process on Node2 to run the preview job. Node2 also serves as a worker compute node and can run compiled mappings.

- •On Node3, the Service Manager on the master compute node orchestrates requests to run mappings. The master compute node also acts as a worker compute node and runs the mapping from workflow1 in a separate DTM process started within a container.

Rules and Guidelines for Grids that Run Jobs in Remote Mode

Consider the following rules and guidelines when you configure a Data Integration Service grid to run jobs in separate remote processes:

- •The grid must contain at least one node with both the service and compute roles to run an ad hoc job, with the exception of profiles. The Data Integration Service runs these job types in a separate DTM process on the local node. Add additional nodes with both the service and compute roles so that these job types can be distributed to service processes running on other nodes in the grid.

- •To support failover for the Data Integration Service, the grid must contain at least two nodes that have the service role.

- •If you associate a Content Management Service with the Data Integration Service to run mappings that read reference data, each node in the grid must have both the service and compute roles.

- •The grid cannot include two nodes that are defined on the same host machine.

- •Informatica does not recommend assigning multiple Data Integration Services to the same grid nor assigning one node to multiple Data Integration Service grids.

If a worker compute node is shared across multiple grids, mappings dispatched to the node might fail due to an over allocation of the node's resources. If a master compute node is shared across multiple grids, the log events for the master compute node are also shared and might become difficult to troubleshoot.

Recycle the Service When Jobs Run in Remote Mode

You must recycle the Data Integration Service if you change a service property or if you update the role for a node assigned to the service or to the grid on which the service runs. You must recycle the service for additional reasons when the service is on a grid and is configured to run jobs in separate remote processes.

When a Data Integration Service grid runs jobs in separate remote processes, recycle the Data Integration Service after you complete the following actions:

- •Override compute node attributes for a node assigned to the grid.

- •Add or remove a node from the grid.

- •Shut down or restart a node assigned to the grid.

To recycle the Data Integration Service, select the service in the Domain Navigator and click Recycle the Service.

Configuring a Grid that Runs Jobs in Remote Mode

When a Data Integration Service grid runs mappings, profiles, and workflows, you can configure the Data Integration Service to run jobs in separate DTM processes on remote nodes.

To configure a Data Integration Service grid to run mappings, profiles, and workflows in separate remote processes, perform the following tasks:

- 1. Update the roles for the nodes in the grid.

- 2. Create a grid for mappings, profiles, and workflows that run in separate remote processes.

- 3. Assign the Data Integration Service to the grid.

- 4. Configure the Data Integration Service to run jobs in separate remote processes.

- 5. Enable the Resource Manager Service.

- 6. Configure a shared log directory.

- 7. Optionally, configure properties for each Data Integration Service process that runs on a node with the service role.

- 8. Optionally, configure compute properties for each DTM instance that can run on a node with the compute role.

- 9. Recycle the Data Integration Service.

Step 1. Update Node Roles

By default, each node has both the service and compute roles. You can update the roles of each node that you plan to add to the grid. Enable only the service role to dedicate a node to running the Data Integration Service process. Enable only the compute role to dedicate a node to running mappings.

At least one node in the grid must have both the service and compute roles to run ad hoc jobs, with the exception of profiles.

Note: Before you can disable the service role on a node, you must shut down all application service processes running on the node and remove the node as a primary or back-up node for any application service. You cannot disable the service role on a gateway node.

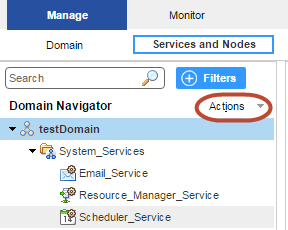

1. In the Administrator tool, click the Manage tab > Services and Nodes view.

2. In the Domain Navigator, select a node that you plan to add to the grid.

3. In the Properties view, click Edit for the general properties.

The Edit General Properties dialog box appears.

4. Select or clear the service and compute roles to update the node role.

5. Click OK.

6. If you disabled the compute role, the Disable Compute Role dialog box appears. Perform the following steps:

- a. Select one of the following modes to disable the compute role:

- ▪ Complete. Allows jobs to run to completion before disabling the role.

- ▪ Stop. Stops all jobs and then disables the role.

- ▪ Abort. Tries to stop all jobs before aborting them and disabling the role.

- b. Click OK.

7. Repeat the steps to update the node role for each node that you plan to add to the grid.

Step 2. Create a Grid

To create a grid, create the grid object and assign nodes to the grid. You can assign a node to one grid when the Data Integration Service is configured to run jobs in separate remote processes.

When a Data Integration Service grid runs mappings, profiles, and workflows in separate remote processes, the grid can include the following nodes:

- •Any number of nodes with the service role only.

- •Any number of nodes with the compute role only.

- •At least one node with both the service and compute roles to run previews and to run ad hoc jobs, with the exception of profiles.

If you associate a Content Management Service with the Data Integration Service to run mappings that read reference data, each node in the grid must have both the service and compute roles.

1. In the Administrator tool, click the Manage tab.

2. Click the Services and Nodes view.

3. In the Domain Navigator, select the domain.

4. On the Navigator Actions menu, click New > Grid.

The Create Grid dialog box appears.

5. Enter the following properties:

Property | Description |

|---|

Name | Name of the grid. The name is not case sensitive and must be unique within the domain. It cannot exceed 128 characters or begin with @. It also cannot contain spaces or the following special characters: ` ~ % ^ * + = { } \ ; : ' " / ? . , < > | ! ( ) ] [ |

Description | Description of the grid. The description cannot exceed 765 characters. |

Nodes | Select nodes to assign to the grid. |

Path | Location in the Navigator, such as: DomainName/ProductionGrids |

6. Click OK.

Step 3. Assign the Data Integration Service to the Grid

Assign the Data Integration Service to run on the grid.

1. On the Services and Nodes view, select the Data Integration Service in the Domain Navigator.

2. Select the Properties tab.

3. In the General Properties section, click Edit.

The Edit General Properties dialog box appears.

4. Next to Assign, select Grid.

5. Select the grid to assign to the Data Integration Service.

6. Click OK.

Step 4. Run Jobs in Separate Remote Processes

Configure the Data Integration Service to run jobs in separate remote processes.

1. On the Services and Nodes view, select the Data Integration Service in the Domain Navigator.

2. Select the Properties tab.

3. In the Execution Options section, click Edit.

The Edit Execution Options dialog box appears.

4. For the Launch Job Options property, select In separate remote processes.

5. Click OK.

Step 5. Enable the Resource Manager Service

By default, the Resource Manager Service is disabled. You must enable the Resource Manager Service so that the Data Integration Service grid can run jobs in separate remote processes.

1. On the Services and Nodes view, expand the System_Services folder.

2. Select the Resource Manager Service in the Domain Navigator, and click Recycle the Service.

Step 6. Configure a Shared Log Directory

When the Data Integration Service runs on a grid, a Data Integration Service process can run on each node with the service role. Configure each service process to use the same shared directory for log files. When you configure a shared log directory, you ensure that if the master service process fails over to another node, the new master service process can access previous log files.

1. On the Services and Nodes view, select the Data Integration Service in the Domain Navigator.

2. Select the Processes tab.

3. Select a node to configure the shared log directory for that node.

4. In the Logging Options section, click Edit.

The Edit Logging Options dialog box appears.

5. Enter the location to the shared log directory.

6. Click OK.

7. Repeat the steps for each node listed in the Processes tab to configure each service process with identical absolute paths to the shared directories.

Step 7. Optionally Configure Process Properties

Optionally, configure the Data Integration Service process properties for each node with the service role in the grid. You can configure the service process properties differently for each node.

To configure properties for the Data Integration Service processes, click the Processes view. Select a node with the service role to configure properties specific to that node.

Step 8. Optionally Configure Compute Properties

You can configure the compute properties that the execution Data Transformation Manager (DTM) uses when it runs jobs. When the Data Integration Service runs on a grid, DTM processes run jobs on each node with the compute role. You can configure the compute properties differently for each node.

To configure compute properties for the DTM, click the Compute view. Select a node with the compute role to configure properties specific to DTM processes that run on the node. For example, you can configure a different temporary directory or different environment variable values for each node.

Step 9. Recycle the Data Integration Service

After you change Data Integration Service properties, you must recycle the service for the changed properties to take effect.

To recycle the service, select the service in the Domain Navigator and click Recycle the Service.

Logs for Jobs that Run in Remote Mode

When a Data Integration Service grid runs a mapping in a separate remote process, the worker service process that optimizes and compiles the mapping writes log events to one log file. The DTM process that runs the mapping writes log events to another log file. When you access the mapping log, the Data Integration Service consolidates the two files into a single log file.

The worker service process writes to a log file in the shared log directory configured for each Data Integration Service process. The DTM process writes to a temporary log file in the log directory configured for the worker compute node. When the DTM process finishes running the mapping, it sends the log file to the master Data Integration Service process. The master service process writes the DTM log file to the shared log directory configured for the Data Integration Service processes. The DTM process then removes the temporary DTM log file from the worker compute node.

When you access the mapping log using the Administrator tool or the infacmd ms getRequestLog command, the Data Integration Service consolidates the two files into a single log file.

The consolidated log file contains the following types of messages:

- LDTM messages written by the worker service process on the service node

The first section of the mapping log contains LDTM messages about mapping optimization and compilation and about generating the grid task written by the worker service process on the service node.

The grid task messages include the following message that indicates the location of the log file written by the DTM process on the worker compute node:

INFO: [GCL_5] The grid task [gtid-1443479776986-1-79777626-99] cluster logs can be found at [./1443479776986/taskletlogs/gtid-1443479776986-1-79777626-99].

The listed directory is a subdirectory of the following default log directory configured for the worker compute node:

<Informatica installation directory>/logs/<node name>/dtmLogs/

- DTM messages written by the DTM process on the compute node

The second section of the mapping log contains messages about mapping execution written by the DTM process on the worker compute node.

The DTM section of the log begins with the following lines which indicate the name of the worker compute node that ran the mapping:

###

### <MyWorkerComputeNodeName>

###

### Start Grid Task [gtid-1443479776986-1-79777626-99] Segment [s0] Tasklet [t-0] Attempt [1]

The DTM section of the log concludes with the following line:

### End Grid Task [gtid-1443479776986-1-79777626-99] Segment [s0] Tasklet [t-0] Attempt [1]

Override Compute Node Attributes to Increase Concurrent Jobs

You can override compute node attributes to increase the number of concurrent jobs that run on the node. You can override the maximum number of cores and the maximum amount of memory that the Resource Manager Service can allocate for jobs that run on the compute node. The default values are the actual number of cores and memory available on the machine.

When the Data Integration Service runs jobs in separate remote processes, by default a machine that represents a compute node requires at least five cores and 2.5 GB of memory to initialize a container to start a DTM process. If any compute node assigned to the grid has fewer than five cores, then that number is used as the minimum number of cores required to initialize a container. For example, if a compute node assigned to the grid has three cores, then each compute node in that grid requires at least three cores and 2.5 GB of memory to initialize a container.

You might want to override compute node attributes to increase the number of concurrent jobs when the following conditions are true:

- •You run long-running jobs on the grid.

- •The Data Integration Service cannot reuse DTM processes because you run jobs from different deployed applications.

- •Job concurrency is more important than the job execution time.

For example, you have configured a Data Integration Service grid that contains a single compute node. You want to concurrently run two mappings from different applications. Because the mappings are in different applications, the Data Integration Service runs the mappings in separate DTM processes, which requires two containers. The machine that represents the compute node has four cores. Only one container can be initialized, and so the two mappings cannot run concurrently. You can override the compute node attributes to specify that the Resource Manager Service can allocate eight cores for jobs that run on the compute node. Then, two DTM processes can run at the same time and the two mappings can run concurrently.

Use caution when you override compute node attributes. Specify values that are close to the actual resources available on the machine so that you do not overload the machine. Configure the values such that the memory requirements for the total number of concurrent mappings do not exceed the actual resources. A mapping that runs in one thread requires one core. A mapping can use the amount of memory configured in the Maximum Memory Per Request property for the Data Integration Service modules.

To override compute node attributes, run the infacmd rms SetComputeNodeAttributes command for a specified node.

You can override the following options:

Option | Argument | Description |

|---|

-MaxCores -mc | max_number_of_cores_to_allocate | Optional. Maximum number of cores that the Resource Manager Service can allocate for jobs that run on the compute node. A compute node requires at least five available cores to initialize a container to start a DTM process. If any compute node assigned to the grid has fewer than five cores, then that number is used as the minimum number of cores required to initialize a container. By default, the maximum number of cores is the actual number of cores available on the machine. |

-MaxMem -mm | max_memory_in_mb_to_allocate | Optional. Maximum amount of memory in megabytes that the Resource Manager Service can allocate for jobs that run on the compute node. A compute node requires at least 2.5 GB of memory to initialize a container to start a DTM process. By default, the maximum memory is the actual memory available on the machine. |

After you override compute node attributes, you must recycle the Data Integration Service for the changes to take effect. To reset an option to its default value, specify -1 as the value.