Property | Description |

|---|---|

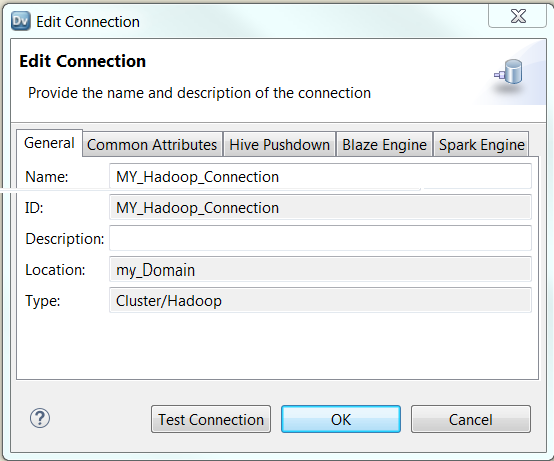

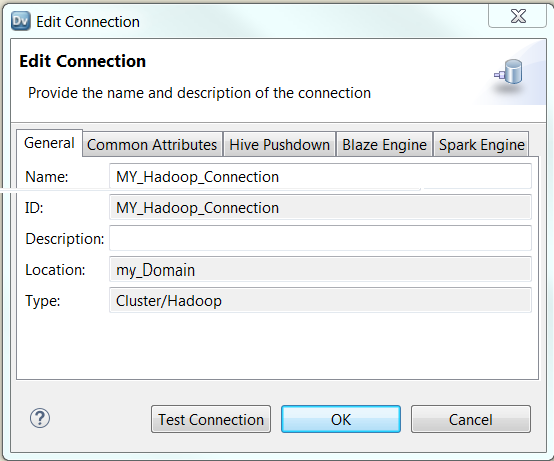

Name | The name of the connection. The name is not case sensitive and must be unique within the domain. You can change this property after you create the connection. The name cannot exceed 128 characters, contain spaces, or contain the following special characters: ~ ` ! $ % ^ & * ( ) - + = { [ } ] | \ : ; " ' < , > . ? / |

ID | String that the Data Integration Service uses to identify the connection. The ID is not case sensitive. It must be 255 characters or less and must be unique in the domain. You cannot change this property after you create the connection. Default value is the connection name. |

Description | The description of the connection. Enter a string that you can use to identify the connection. The description cannot exceed 4,000 characters. |

Location | The domain where you want to create the connection. Select the domain name. |

Type | The connection type. Select Hadoop. |

Property | Description |

|---|---|

Resource Manager Address | The service within Hadoop that submits requests for resources or spawns YARN applications. Use the following format: <hostname>:<port> Where

For example, enter: myhostame:8032 You can also get the Resource Manager Address property from yarn-site.xml located in the following directory on the Hadoop cluster: /etc/hadoop/conf/ The Resource Manager Address appears as the following property in yarn-site.xml: <property> <name>yarn.resourcemanager.address</name> <value>hostname:port</value> <description>The address of the applications manager interface in the Resource Manager.</description> </property> Optionally, if the yarn.resourcemanager.address property is not configured in yarn-site.xml, you can find the host name from the yarn.resourcemanager.hostname or yarn.resourcemanager.scheduler.address properties in yarn-site.xml. You can then configure the Resource Manager Address in the Hadoop connection with the following value: hostname:8032 |

Default File System URI | The URI to access the default Hadoop Distributed File System. Use the following connection URI: hdfs://<node name>:<port> Where

For example, enter: hdfs://myhostname:8020/ You can also get the Default File System URI property from core-site.xml located in the following directory on the Hadoop cluster: /etc/hadoop/conf/ Use the value from the fs.defaultFS property found in core-site.xml. For example, use the following value: <property> <name>fs.defaultFS</name> <value>hdfs://localhost:8020</value> </property> If the Hadoop cluster runs MapR, use the following URI to access the MapR File system: maprfs:///. The Azure HDInsight File System default file system can be Windows Azure Storage Blob (WASB) or Azure Data Lake Store (ADLS). If the cluster uses WASB storage, use the following string to specify the URI: wasb://<container_name>@<account_name>.blob.core.windows.net/<path> where:

Note: <container_name> is optional. Example: wasb://infabdmoffering1storage.blob.core.windows.net/infabdmoffering1cluster/mr-history If the cluster uses ADLS storage, use the following format to specify the URI: adl://home The following is the fs.defaultFS property as it appears in hdfs-site.xml: <property>fs.defaultFS</property> <value>adl://home</value> |

Property | Description |

|---|---|

Impersonation User Name | User name of the user that the Data Integration Service impersonates to run mappings on a Hadoop cluster. If the Hadoop cluster uses Kerberos authentication, the principal name for the JDBC connection string and the user name must be the same. Note: You must use user impersonation for the Hadoop connection if the Hadoop cluster uses Kerberos authentication. If the Hadoop cluster does not use Kerberos authentication, the user name depends on the behavior of the JDBC driver. If you do not specify a user name, the Hadoop cluster authenticates jobs based on the operating system profile user name of the machine that runs the Data Integration Service. |

Temporary Table Compression Codec | Hadoop compression library for a compression codec class name. |

Codec Class Name | Codec class name that enables data compression and improves performance on temporary staging tables. |

Hadoop Connection Custom Properties | Custom properties that are unique to the Hadoop connection. You can specify multiple properties. Use the following format: <property1>=<value> Where

To specify multiple properties use &: as the property separator. Use custom properties only at the request of Informatica Global Customer Support. |

Property | Description |

|---|---|

Environment SQL | SQL commands to set the Hadoop environment. The Data Integration Service executes the environment SQL at the beginning of each Hive script generated in a Hive execution plan. The following rules and guidelines apply to the usage of environment SQL:

set hive.execution.engine='tez';set hive.exec.dynamic.partition.mode='nonstrict'; |

Database Name | Namespace for tables. Use the name default for tables that do not have a specified database name. |

Hive Warehouse Directory on HDFS | The absolute HDFS file path of the default database for the warehouse that is local to the cluster. For example, the following file path specifies a local warehouse: /user/hive/warehouse For Cloudera CDH, if the Metastore Execution Mode is remote, then the file path must match the file path specified by the Hive Metastore Service on the Hadoop cluster. You can get the value for the Hive Warehouse Directory on HDFS from the hive.metastore.warehouse.dir property in hive-site.xml located in the following directory on the Hadoop cluster: /etc/hadoop/conf/ For example, use the following value: <property> <name>hive.metastore.warehouse.dir</name> <value>/usr/hive/warehouse </value> <description>location of the warehouse directory</description> </property> For MapR, hive-site.xml is located in the following direcetory: /opt/mapr/hive/<hive version>/conf. |

Property | Description |

|---|---|

Metastore Execution Mode | Controls whether to connect to a remote metastore or a local metastore. By default, local is selected. For a local metastore, you must specify the Metastore Database URI, Metastore Database Driver, Username, and Password. For a remote metastore, you must specify only the Remote Metastore URI. You can get the value for the Metastore Execution Mode from hive-site.xml. The Metastore Execution Mode appears as the following property in hive-site.xml: <property> <name>hive.metastore.local</name> <value>true</true> </property> Note: The hive.metastore.local property is deprecated in hive-site.xml for Hive server versions 0.9 and above. If the hive.metastore.local property does not exist but the hive.metastore.uris property exists, and you know that the Hive server has started, you can set the connection to a remote metastore. |

Metastore Database URI | The JDBC connection URI used to access the data store in a local metastore setup. Use the following connection URI: jdbc:<datastore type>://<node name>:<port>/<database name> where

For example, the following URI specifies a local metastore that uses MySQL as a data store: jdbc:mysql://hostname23:3306/metastore You can get the value for the Metastore Database URI from hive-site.xml. The Metastore Database URI appears as the following property in hive-site.xml: <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://MYHOST/metastore</value> </property> |

Metastore Database Driver | Driver class name for the JDBC data store. For example, the following class name specifies a MySQL driver: com.mysql.jdbc.Driver You can get the value for the Metastore Database Driver from hive-site.xml. The Metastore Database Driver appears as the following property in hive-site.xml: <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> |

Metastore Database User Name | The metastore database user name. You can get the value for the Metastore Database User Name from hive-site.xml. The Metastore Database User Name appears as the following property in hive-site.xml: <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hiveuser</value> </property> |

Metastore Database Password | The password for the metastore user name. You can get the value for the Metastore Database Password from hive-site.xml. The Metastore Database Password appears as the following property in hive-site.xml: <property> <name>javax.jdo.option.ConnectionPassword</name> <value>password</value> </property> |

Remote Metastore URI | The metastore URI used to access metadata in a remote metastore setup. For a remote metastore, you must specify the Thrift server details. Use the following connection URI: thrift://<hostname>:<port> Where

For example, enter: thrift://myhostname:9083/ You can get the value for the Remote Metastore URI from hive-site.xml. The Remote Metastore URI appears as the following property in hive-site.xml: <property> <name>hive.metastore.uris</name> <value>thrift://<n.n.n.n>:9083</value> <description> IP address or fully-qualified domain name and port of the metastore host</description> </property> |

Engine Type | The engine that the Hadoop environment uses to run a mapping on the Hadoop cluster. Select a value from the drop down list. For example select: MRv2 To set the engine type in the Hadoop connection, you must get the value for the mapreduce.framework.name property from mapred-site.xml located in the following directory on the Hadoop cluster: /etc/hadoop/conf/ If the value for mapreduce.framework.name is classic, select mrv1 as the engine type in the Hadoop connection. If the value for mapreduce.framework.name is yarn, you can select the mrv2 or tez as the engine type in the Hadoop connection. Do not select Tez if Tez is not configured for the Hadoop cluster. You can also set the value for the engine type in hive-site.xml. The engine type appears as the following property in hive-site.xml: <property> <name>hive.execution.engine</name> <value>tez</value> <description>Chooses execution engine. Options are: mr (MapReduce, default) or tez (Hadoop 2 only)</description> </property> |

Job Monitoring URL | The URL for the MapReduce JobHistory server. You can use the URL for the JobTracker URI if you use MapReduce version 1. Use the following format: <hostname>:<port> Where

For example, enter: myhostname:8021 You can get the value for the Job Monitoring URL from mapred-site.xml. The Job Monitoring URL appears as the following property in mapred-site.xml: <property> <name>mapred.job.tracker</name> <value>myhostname:8021 </value> <description>The host and port that the MapReduce job tracker runs at.</description> </property> |

Property | Description |

|---|---|

Temporary Working Directory on HDFS | The HDFS file path of the directory that the Blaze engine uses to store temporary files. Verify that the directory exists. The YARN user, Blaze engine user, and mapping impersonation user must have write permission on this directory. For example, enter: /blaze/workdir |

Blaze Service User Name | The operating system profile user name for the Blaze engine. |

Minimum Port | The minimum value for the port number range for the Blaze engine. For example, enter: 12300 |

Maximum Port | The maximum value for the port number range for the Blaze engine. For example, enter: 12600 |

Yarn Queue Name | The YARN scheduler queue name used by the Blaze engine that specifies available resources on a cluster. The name is case sensitive. |

Blaze Service Custom Properties | Custom properties that are unique to the Blaze engine. You can specify multiple properties. Use the following format: <property1>=<value> Where

To enter multiple properties, separate each name-value pair with the following text: &:. Use custom properties only at the request of Informatica Global Customer Support. |

Property | Description |

|---|---|

Spark HDFS Staging Directory | The HDFS file path of the directory that the Spark engine uses to store temporary files for running jobs. The YARN user, Spark engine user, and mapping impersonation user must have write permission on this directory. |

Spark Event Log Directory | Optional. The HDFS file path of the directory that the Spark engine uses to log events. The Data Integration Service accesses the Spark event log directory to retrieve final source and target statistics when a mapping completes. These statistics appear on the Summary Statistics tab and the Detailed Statistics tab of the Monitoring tool. If you do not configure the Spark event log directory, the statistics might be incomplete in the Monitoring tool. |

Spark Execution Parameters | An optional list of configuration parameters to apply to the Spark engine. You can change the default Spark configuration properties values, such as spark.executor.memory or spark.driver.cores. Use the following format: <property1>=<value>

For example, you can configure a YARN scheduler queue name that specifies available resources on a cluster: spark.yarn.queue=TestQ To enter multiple properties, separate each name-value pair with the following text: &: |