Hive Targets

A mapping that is running in the Hadoop environment can write to a Hive target.

A Hive target can be an internal table or an external table. Internal Hive tables are managed by Hive and are also known as managed tables. External Hive tables are managed by an external source such as HDFS, Amazon S3, Azure Blob, WASB, or ADLS.

Consider the following restrictions when you configure a Hive target in a mapping that runs in the Hadoop environment:

- •A mapping fails to run when you use Unicode characters in a Hive target definition.

- •When you set up a dynamic target for a partitioned Hive table, the value used for the partition is the final column in the table. If the table has a dynamic partition column, the final column of the table is the dynamic partition column. To use a different column for the partition, move it to the last column of the table. If the table has multiple partition columns, the dynamic partition values are selected from the last columns of the upstream transformation. You can use an Expression transformation to reorder the columns if necessary.

When a mapping creates or replaces a Hive table, the type of table that the mapping creates depends on the run-time engine that you use to run the mapping.

The following table shows the table type for each run-time engine:

Run-Time Engine | Resulting Table Type |

|---|

Blaze | MANAGED_TABLE |

Spark | EXTERNAL_TABLE |

Hive | MANAGED_TABLE |

You can design a mapping to truncate an internal or external Hive table that is bucketed and partitioned.

In a mapping that runs on the Spark engine or the Blaze engine, you can create a custom DDL query that creates or replaces a Hive table at run time. However, with the Blaze engine, you cannot use a backtick (`) character in the DDL query. The backtick character is required in HiveQL when you include special characters or keywords in a query.

The Spark engine can write to bucketed Hive targets. Bucketing and partitioning of Hive tables can improve performance by reducing data shuffling and sorting.

PreSQL and PostSQL Commands

You can create SQL commands for Hive targets. You can execute the SQL commands to execute SQL statements such as insert, update, and delete on the Hive target.

PreSQL is an SQL command that runs against the Hive target before the mapping reads from the source. PostSQL is an SQL command that runs against the Hive target after the mapping writes to the target.

You can use PreSQL and PostSQL on the Spark engine. The Data Integration Service does not validate PreSQL or PostSQL commands for a Hive target.

Pre-Mapping SQL Commands

PreSQL is an SQL command that runs against a Hive target before the mapping reads from a source.

For example, you might use a Hive target in a mapping. The data stored in the Hive target contains old records from the previous day and you must delete the old records in the Hive target before you run the mapping that writes new records to the target. To delete the old records in the Hive target, you can configure a PreSQL command.

Post-Mapping SQL Commands

PostSQL is an SQL command that runs against a Hive source after the mapping writes to the target.

For example, you might use a Hive target in a mapping that runs in a test environment. After the mapping writes to the target, you might want to delete the records stored in the Hive target so that you can test the same Hive target again in the next mapping run. To delete the records in the Hive target, you can configure a PostSQL command.

Truncating Hive Targets

Truncate Hive target tables to delete the table contents. You can truncate internal and external Hive tables in the Hadoop environment.

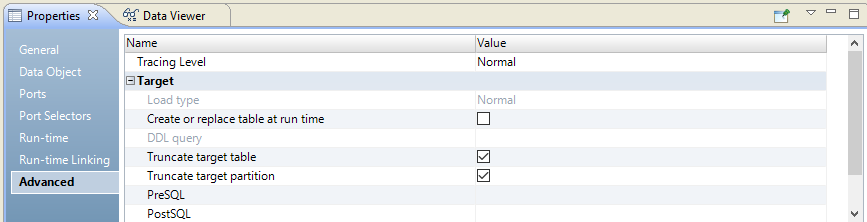

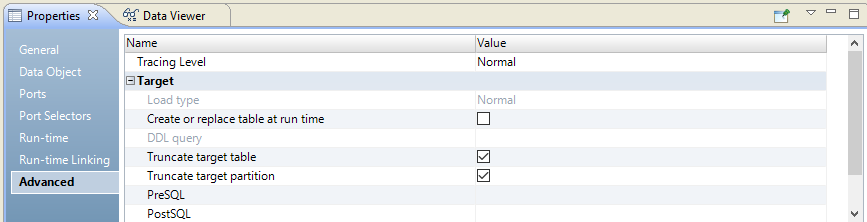

Truncate a Hive table in the Hive table properties. The following image shows the Hive table properties:

To truncate the entire Hive table, choose the option to truncate the target table. To truncate a partition in the Hive table, you must choose to truncate the target table and to truncate the partition in the Hive target table.

Consider the following restrictions when you truncate a Hive table in a mapping that runs in the Hadoop environment:

- •The Data Integration Service can truncate the partition in the Hive target in which the data is being inserted. You must choose to both truncate the partition in the Hive target and truncate the target table.

- •You must truncate the target table to overwrite data to a Hive table with Hive version 0.7. The Data Integration Service ignores write, update override, delete, insert, and update strategy properties when it writes data to a Hive target.

Updating Hive Targets with an Update Strategy Transformation

For mappings that run on the Spark engine, you can use Hive MERGE statements to perform Update Strategy tasks. When a query uses a MERGE statement instead of INSERT, UPDATE or DELETE statements, processing is more efficient.

To use Hive MERGE, select true for the option in the Advanced Properties of the Update Strategy transformation.

The mapping ignores the Hive MERGE option and the Data Integration Service uses INSERT, UPDATE and DELETE to perform the operation under the following scenarios:

- •The mapping runs on Blaze or Hive.

- •In scenarios where MERGE is restricted by Hive implementation on particular Hadoop distributions.

The mapping log contains results of the operation, including whether restrictions affected results.

When the update affects partitioning or bucketing columns, updates to the columns are omitted.

Note: The Developer tool and the Data Integration Service do not validate against this restriction. If the Update Strategy expression violates these restrictions, the mapping might produce unexpected results.

Rules and Guidelines for Hive Targets on the Blaze Engine

You can include Hive targets in an Informatica mapping that runs on the Blaze engine.

Consider the following rules and guidelines when you configure a Hive target in a mapping that runs on the Blaze engine:

- •A mapping that runs on the Blaze engine can have partitioned and bucketed Hive tables as targets. However, if you append data to a bucketed table, the Blaze engine overwrites the data in the bucketed target.

- •Mappings that run on the Blaze engine can read and write to sorted targets.

- •The Blaze engine supports Hive tables that are enabled for locking.

- •The Blaze engine can create or replace Hive target tables.

- •A mapping that runs on the Blaze engine can write to Hive ACID tables. To write to a Hive ACID table, the mapping must contain an Update Strategy transformation connected to the Hive target. The update strategy expression must flag each row for insert.

- •The Blaze engine can write to Hive tables that are compressed. To write to a Hive table in compressed format, you must set the TBLPROPERTIES clause.

- •For a mapping that writes to a Hive target on the Blaze engine, you cannot use update or delete update strategy properties.

RCFile as Hive Tables

The Blaze engine can read and write to RCFile as Hive tables. However, the Blaze engine supports only the ColumnarSerDe SerDe. In Hortonworks, the default SerDe for an RCFile is LazyBinaryColumnarSerDe. To read and write to an RCFile table, you must create the table by specifying the SerDe as org.apache.hadoop.hive.serde2.columnar.ColumnarSerDe.

For example:

CREATE TABLE TEST_RCFIle

(id int, name string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.serde2.columnar.ColumnarSerDe' STORED AS RCFILE;

You can also set the default RCFile SerDe from the Ambari or Cloudera manager. Set the property hive.default.rcfile.serde to org.apache.hadoop.hive.serde2.columnar.ColumnarSerDe.

Compressed Hive Tables

The Blaze engine can read and write to Hive tables that are compressed. However, to read from a compressed Hive table or write to a Hive table in compressed format, you must set the TBLPROPERTIES clause as follows:

- • When you create the table, set the table properties:

TBLPROPERTIES ('property_name'='property_value')

- • If the table already exists, alter the table to set the table properties:

ALTER TABLE table_name SET TBLPROPERTIES ('property_name' = 'property_value');

The property name and value are not case sensitive. Depending on the file format, the table property can take different values.

The following table lists the property names and values for different file formats:

File Format | Table Property Name | Table Property Values |

|---|

Avro | avro.compression | BZIP2, deflate, Snappy |

ORC | orc.compress | Snappy, ZLIB |

Parquet | parquet.compression | GZIP, Snappy |

RCFile | rcfile.compression | Snappy, ZLIB |

Sequence | sequencefile.compression | BZIP2, GZIP, LZ4, Snappy |

Text | text.compression | BZIP2, GZIP, LZ4, Snappy |

Note: Unlike the Hive engine, the Blaze engine does not write data in the default ZLIB compressed format when it writes to a Hive target stored as ORC format. To write in a compressed format, alter the table to set the TBLPROPERTIES clause to use ZLIB or Snappy compression for the ORC file format.

The following text shows sample commands to create table and alter table:

- •Create table:

create table CBO_3T_JOINS_CUSTOMER_HIVE_SEQ_GZIP

(C_CUSTKEY DECIMAL(38,0), C_NAME STRING,C_ADDRESS STRING,

C_PHONE STRING,C_ACCTBAL DECIMAL(10,2),

C_MKTSEGMENT VARCHAR(10),C_COMMENT vARCHAR(117))

partitioned by (C_NATIONKEY DECIMAL(38,0))

TBLPROPERTIES ('sequencefile.compression'='gzip')

stored as SEQUENCEFILE;

- •Alter table:

ALTER TABLE table_name

SET TBLPROPERTIES (avro.compression'='BZIP2');