Mapping Run-time Properties

The mapping run-time properties depend on the environment that you select for the mapping.

The mapping properties contains the Validation Environments area and an Execution Environment area. The properties in the Validation Environment indicate whether the Developer tool validates the mapping definition for the native execution environment, the Hadoop execution environment, or both. When you run a mapping in the native environment, the Data Integration Service processes the mapping.

When you run a mapping in the Hadoop environment, the Data Integration Service pushes the mapping execution to the Hadoop cluster through a Hadoop connection. The Hadoop cluster processes the mapping.

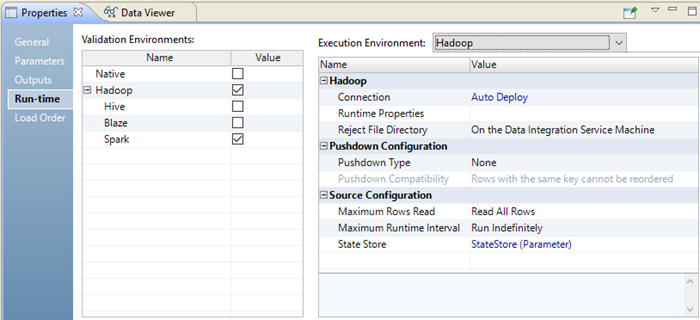

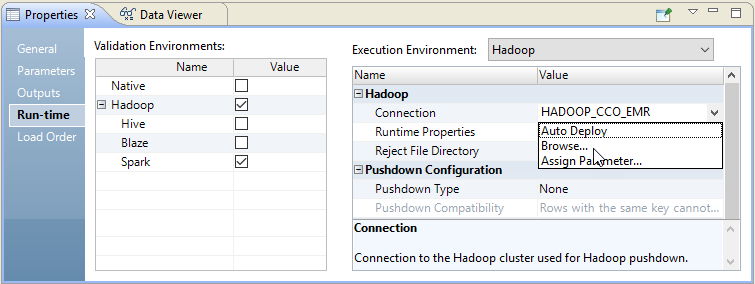

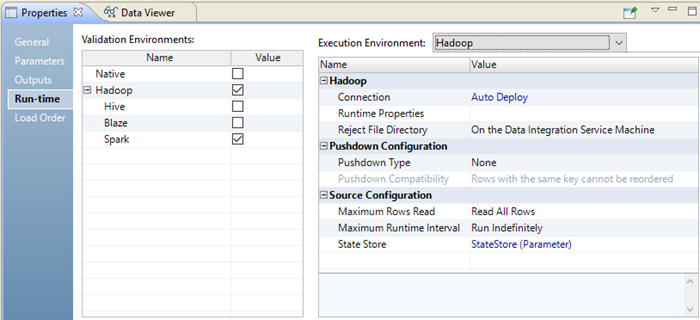

The following image shows the mapping

Run-time properties in a Hadoop environment:

Validation Environments

The properties in the Validation Environments indicate whether the Developer tool validates the mapping definition for the native execution environment or the Hadoop execution environment.

You can configure the following properties for the Validation Environments:

- Native

- Default environment. The Data Integration Service runs the mapping in a native environment.

- Hadoop

- Run the mapping in the Hadoop environment. The Data Integration Service pushes the transformation logic to the Hadoop cluster through a Hadoop connection. The Hadoop cluster processes the data. Select the engine to process the mapping. You can select the Blaze, Spark, or Hive engines.

You can use a mapping parameter to indicate the execution environment. When you select the execution environment, click Assign Parameter. Configure a string parameter. Set the default value to Native or Hadoop.

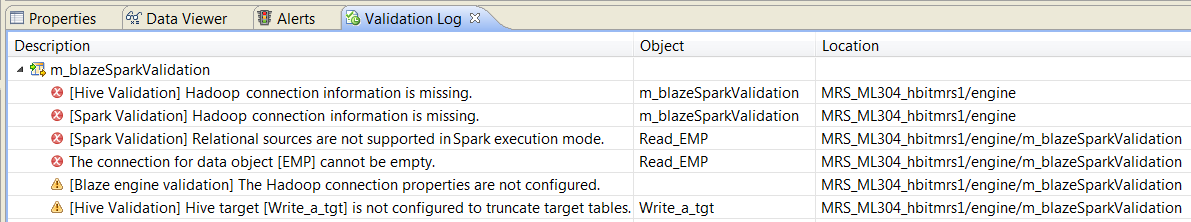

When you validate the mapping, validation occurs for each engine that you choose in the Validation Environments. The validation log might contain validation errors specific to each engine. If the mapping is valid for at least one mapping, the mapping is valid. The errors for the other engines appear in the validation log as warnings. If the mapping is valid for multiple Hadoop engines, you can view the execution plan to determine which engine will run the job. You can view the execution plan in the Data Viewer view.

The following image shows validation errors for the Blaze, Spark, and Hive engines:

Execution Environment

Configure Hadoop properties, Pushdown Configuration properties, and Source Configuration properties in the Execution Environment area.

Configure the following properties in a Hadoop Execution Environment:

Name | Value |

|---|

Connection | Defines the connection information that the Data Integration Service requires to push the mapping execution to the Hadoop cluster. Select the Hadoop connection to run the mapping in the Hadoop cluster. You can assign a user-defined parameter for the Hadoop connection. |

Runtime Properties | You can configure run-time properties for the Hadoop environment in the Data Integration Service, the Hadoop connection, and in the mapping. You can override a property configured at a high level by setting the value at a lower level. For example, if you configure a property in the Data Integration Service custom properties, you can override it in the Hadoop connection or in the mapping. The Data Integration Service processes property overrides based on the following priorities: - 1. Mapping custom properties set using infacmd ms runMapping with the -cp option

- 2. Mapping run-time properties for the Hadoop environment

- 3. Hadoop connection advanced properties for run-time engines

- 4. Hadoop connection advanced general properties, environment variables, and classpaths

- 5. Data Integration Service custom properties

|

Reject File Directory | The directory for Hadoop mapping files on HDFS when you run mappings in the Hadoop environment. The Blaze engine can write reject files to the Hadoop environment for flat file, HDFS, and Hive targets. The Spark and Hive engines can write reject files to the Hadoop environment for flat file and HDFS targets. Choose one of the following options: - - On the Data Integration Service machine. The Data Integration Service stores the reject files based on the RejectDir system parameter.

- - On the Hadoop Cluster. The reject files are moved to the reject directory configured in the Hadoop connection. If the directory is not configured, the mapping will fail.

- - Defer to the Hadoop Connection. The reject files are moved based on whether the reject directory is enabled in the Hadoop connection properties. If the reject directory is enabled, the reject files are moved to the reject directory configured in the Hadoop connection. Otherwise, the Data Integration Service stores the reject files based on the RejectDir system parameter.

|

You can configure the following pushdown configuration properties:

Name | Value |

|---|

Pushdown type | Choose one of the following options: - - None. Select no pushdown type for the mapping.

- - Source. The Data Integration Service tries to push down transformation logic to the source database.

- - Full. The Data Integration Service pushes the full transformation logic to the source database.

|

Pushdown Compatibility | Optionally, if you choose full pushdown optimization and the mapping contains an Update Strategy transformation, you can choose a pushdown compatibility option or assign a pushdown compatibility parameter. Choose one of the following options: - - Multiple rows do not have the same key. The transformation connected to the Update Strategy transformation receives multiple rows without the same key. The Data Integration Service can push the transformation logic to the target.

- - Multiple rows with the same key can be reordered. The target transformation connected to the Update Strategy transformation receives multiple rows with the same key that can be reordered. The Data Integration Service can push the Update Strategy transformation to the Hadoop environment.

- - Multiple rows with the same key cannot be reordered. The target transformation connected to the Update Strategy transformation receives multiple rows with the same key that cannot be reordered. The Data Integration Service cannot push the Update Strategy transformation to the Hadoop environment.

|

You can configure the following source properties:

Name | Value |

|---|

Maximum Rows Read | Reserved for future use. |

Maximum Runtime Interval | Reserved for future use. |

State Store | Reserved for future use. |

Parsing JSON Records on the Spark Engine

In the mapping run-time properties, you can configure how the Spark engine parses corrupt records and multiline records when it reads from JSON sources in a mapping.

Configure the following Hadoop run-time properties:

- infaspark.json.parser.mode

- Specifies the parser how to handle corrupt JSON records. You can set the value to one of the following modes:

- - DROPMALFORMED. The parser ignores all corrupted records. Default mode.

- - PERMISSIVE. The parser accepts non-standard fields as nulls in corrupted records.

- - FAILFAST. The parser throws an exception when it encounters a corrupted record and the Spark application goes down.

- infaspark.json.parser.multiLine

- Specifies whether the parser can read a multiline record in a JSON file. You can set the value to true or false. Default is false.

- Applies only to Hadoop distributions that use Spark version 2.2.x.

Reject File Directory

You can write reject files to the Data Integration Service machine or to the Hadoop cluster. Or, you can defer to the Hadoop connection configuration. The Blaze engine can write reject files to the Hadoop environment for flat file, HDFS, and Hive targets. The Spark and Hive engines can write reject files to the Hadoop environment for flat file and HDFS targets.

If you configure the mapping run-time properties to defer to the Hadoop connection, the reject files for all mappings with this configuration are moved based on whether you choose to write reject files to Hadoop for the active Hadoop connection. You do not need to change the mapping run-time properties manually to change the reject file directory.

For example, if the reject files are currently moved to the Data Integration Service machine and you want to move them to the directory configured in the Hadoop connection, edit the Hadoop connection properties to write reject files to Hadoop. The reject files of all mappings that are configured to defer to the Hadoop connection are moved to the configured directory.

You might also want to choose to defer to the Hadoop connection when the connection is parameterized to alternate between multiple Hadoop connections. For example, the parameter might alternate between one Hadoop connection that is configured to move reject files to the Data Integration Service machine and another Hadoop connection that is configured to move reject files to the directory configured in the Hadoop connection. If you choose to defer to the Hadoop connection, the reject files are moved depending on the active Hadoop connection in the connection parameter.

Changing the Hadoop Cluster for a Mapping Run

You can change the Hadoop cluster that a mapping runs on.

You might want to change the Hadoop cluster to run the mapping on a different Hadoop cluster type or different version.

To change the Hadoop cluster that a mapping runs on, click the connection where it appears in the mapping Run-time properties. Then click Browse, and select a Hadoop connection that uses a cluster configuration for the cluster you want the mapping to run on.

The following image shows the Browse choice for a Hadoop connection:

After you change the connection that the mapping uses, you must restart the Data Integration Service for the change to take effect.

Updating Run-time Properties for Multiple Mappings

You can enable or disable the validation environment or set the execution environment for multiple mappings. You can update multiple mappings that you run from the Developer tool or mappings that are deployed to a Data Integration Service. Use the Command Line Interface to perform these updates.

The following table describes the commands to update mapping run-time properties:

Command | Description |

|---|

dis disableMappingValidationEnvironment | Disables the mapping validation environment for mappings that are deployed to the Data Integration Service. |

mrs disableMappingValidationEnvironment | Disables the mapping validation environment for mappings that you run from the Developer tool. |

dis enableMappingValidationEnvironment | Enables a mapping validation environment for mappings that are deployed to the Data Integration Service. |

mrs enableMappingValidationEnvironment | Enables a mapping validation environment for mappings that you run from the Developer tool. |

dis setMappingExecutionEnvironment | Specifies the mapping execution environment for mappings that are deployed to the Data Integration Service. |

mrs setMappingExecutionEnvironment | Specifies the mapping execution environment for mappings that you run from the Developer tool. |