Data Integration Hub Publications and Subscriptions

Publications and subscriptions are entities that define how applications publish data to Data Integration Hub and how applications consume data from Data Integration Hub. Publications publish data to a defined topic and subscriptions subscribe to topics.

Publications and subscriptions control the data flow and the schedule of data publication or data consumption. An application can be a publisher and a subscriber. Multiple applications can publish to the same topic. Multiple applications can consume data from the same topic.

You can use automatic, custom, and modular publications and subscriptions to publish data and to consume data. You can publish from and subscribe to different sources of data. Because the publishing process and the consuming process are completely decoupled, the publishing source and the consuming target do not have to be of the same data type. For example, you can publish data from a file and consume it into a database.

Automatic publications and subscriptions can publish from and subscribe to a relational database, a file, or a cloud application, or over a REST API.

Custom publications and subscriptions can publish from and subscribe to on-premises applications.

Modular publications and subscriptions can publish from and subscribe to cloud applications.

Publication Process

The publication process includes retrieving the data from the publisher, running any associated mappers, such as a mapping or a task, and writing the data to the relevant topic in the Data Integration Hub publication repository. After the publication process ends, subscribers can consume the published data from the publication repository.

The publication process depends on the publication type.

- •Automatic publications can run a Data Integration Hub workflow that is based on a PowerCenter batch workflow or run over a REST API.

- •Custom publications can either run a Data Integration Hub workflow that is based on a PowerCenter batch workflow, PowerCenter real-time workflow, Data Engineering Integration mapping or workflow, Data Engineering Streaming mapping, or Data Quality mapping or workflow, or run an Informatica Intelligent Cloud Services task.

- •Modular publications run an Informatica Intelligent Cloud Services mapping.

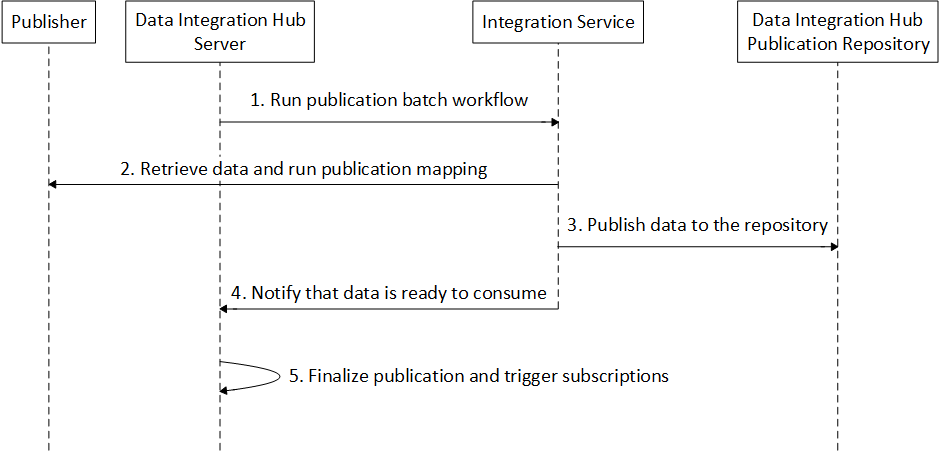

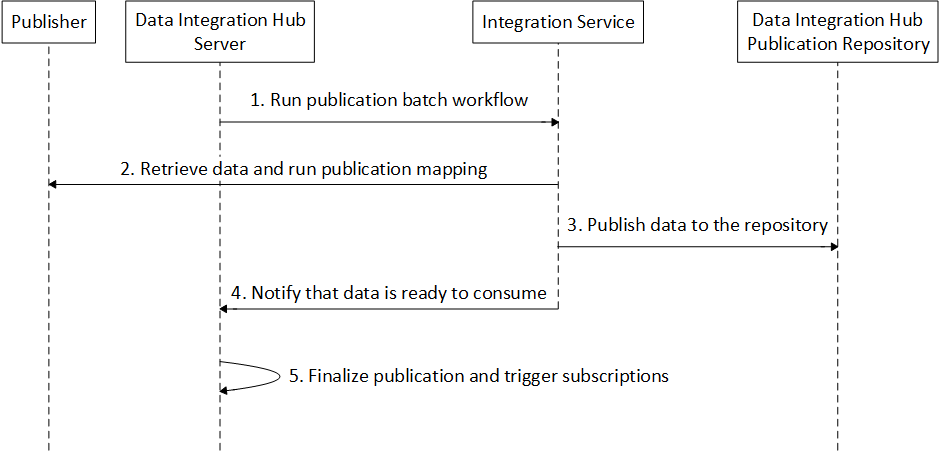

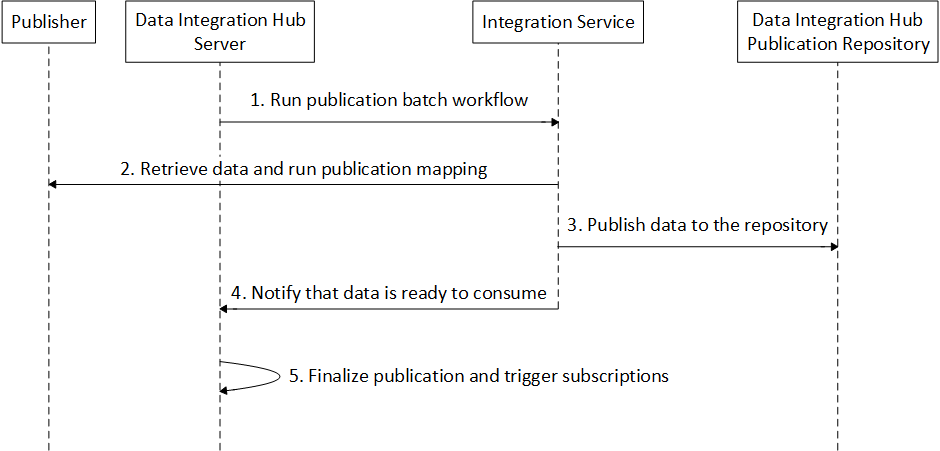

Publication Process with a Batch Workflow

The publication process for publications that run a Data Integration Hub batch workflow includes the following stages:

- 1. When the publisher is ready to publish the data, the Data Integration Hub server runs the publication batch workflow and sends a request to the relevant Integration Service, either the PowerCenter Integration Service or the Data Integration Service.

- 2. The Integration Service extracts the data from the publisher and runs the automatic or custom mapping on the data.

- 3. The Integration Service writes the data to the Data Integration Hub publication repository.

- 4. The Integration Service notifies the Data Integration Hub server that the published data is ready for subscribers.

- 5. The Data Integration Hub server changes the status of the publication event to complete and triggers subscription processing.

The following image shows the main stages of the publication process for publications that run a batch workflow:

Publication Process with a Real-time Workflow

The publication process for publications that run a Data Integration Hub real-time workflow includes the following stages:

- 1. The developer runs the real-time workflow. The workflow writes the data to the relevant tables in the Data Integration Hub publication repository.

- 2. The Data Integration Hub server triggers a scheduled process and checks for new data in the relevant tables in the Data Integration Hub publication repository.

- 3. If new data is found, Data Integration Hub updates the publication ID and the publication date of the data to indicate that the data is ready for consumption and creates a publication event in the Data Integration Hub repository.

- 4. The Data Integration Hub server changes the status of the publication event to complete and triggers subscription processing.

Publication Process with a Data Integration Task

The publication process for publications that run a Data Integration task includes the following stages:

- 1. When the publication is triggered, either according to schedule or by an external API, the Data Integration Hub server triggers the Data Integration task that is defined for the publication through an Informatica Intelligent Cloud Services REST API.

- 2. The publication process uses the Data Integration Hub cloud connector to write the data to Data Integration Hub.

- 3. The Data Integration Hub server changes the status of the publication event to complete and triggers subscription processing.

Publication Process of a Data-driven Publication

The publication process for data-driven publications includes the following stages:

- 1. After you create a a data-driven publication, you create a POST request to run the publication.

- 2. When you post the request, Data Integration Hub transfers published data from the request directly to the Data Integration Hub publication repository, to the topic that you define in the publication.

- 3. Data Integration Hub creates a Data-driven Publication event, based on the event grouping that is defined for the publication:

- - If the grouping time is set to zero, that is, no grouping is defined for the publication, Data Integration Hub creates an event each time data is published to the publication repository.

- - If you define a grouping time, Data Integration Hub creates an event at the end of each grouping period that contains publications. For example, if you configure the publication to group publications every ten seconds, Data Integration Hub creates an event every ten seconds, providing that data was published to the publication repository during the 10-second period.

Subscription Process

The subscription process includes retrieving the required data from the Data Integration Hub subscription repository, running any associated mappers, such as a mapping or a task, and writing the data to one or more subscriber targets. Data Integration Hub keeps the data in the subscription repository until the retention period of the topic expires.

- •Automatic subscriptions can run a Data Integration Hub workflow that is based on a PowerCenter batch workflow or run over a REST API.

- •Custom subscriptions can either run a Data Integration Hub workflow that is based on a PowerCenter batch workflow, Data Engineering Integration mapping or workflow, Data Engineering Streaming mapping, or Data Quality mapping or workflow, or run an Informatica Intelligent Cloud Services task.

- •Modular subscriptions run an Informatica Intelligent Cloud Services mapping.

Subscription Process with a Batch Workflow

The subscription process for subscriptions that run a Data Integration Hub batch workflow includes the following stages:

- 1. When the publication is ready for subscribers, the Data Integration Hub server runs the subscription batch workflow and sends a request to the relevant Integration Service, either the PowerCenter Integration Service or the Data Integration Service.

- 2. The Integration Service extracts the data from the Data Integration Hub publication repository, and runs the automatic or custom mapping on the data.

- 3. The Integration Service sends the required data to the subscriber.

- 4. The Integration Service notifies the Data Integration Hub server after the subscriber consumed the published data that they require.

- 5. The Data Integration Hub server changes the status of the subscription event to complete.

The following image shows the main stages of the subscription process for each subscription:

Subscription Process with a Data Integration Task

The subscription process for subscriptions that run a Data Integration task includes the following stages:

- 1. When the publication is ready for subscribers, the Data Integration Hub server triggers the Data Integration task that is defined for the subscription through an Informatica Intelligent Cloud Services REST API.

- 2. The subscription process uses the Data Integration Hub cloud connector to read data from Data Integration Hub.

- 3. The Data Integration task reads the data from Data Integration Hub and then writes the data to the cloud application.

- 4. The Data Integration Hub server changes the status of the subscription event to complete.

Subscription Process of a Data-driven Subscription

The subscription process for data-driven subscriptions includes the following stages:

- 1. When you configure the properties of a data-driven subscription, you enter the URL to where Data Integration Hub sends notifications when data is ready to consume from the Data Integration Hub publication repository, from the topic that you define in the subscription.

- 2. You create a POST request to run the subscription and fetch the data from the Data Integration Hub publication repository, from the topic that you define in the subscription.

- 3. When Data Integration Hub sends notifications that data is ready to be consumed from the topic, you post the request to run the subscription and to fetch the data.