Developing Data Quality Mappings for Subscriptions

To develop a Data Quality mapping for a subscription, perform the following steps in the Developer tool:

- 1. Create source and target connections. The source connection is a connection to the Data Integration Hub publication repository and the target connection is a connection to the subscribing application.

- 2. Create source and target data objects.

- 3. Create a mapping and add the source and target objects to the mapping.

- 4. Add a Data Integration Hub parameter to the mapping.

- 5. Add a Filter transformation to the mapping, configure the transformation filter, and connect ports between the source, the transformation, and the target.

- 6. Add a Filter query to the Reader object.

- 7. Configure the mapping run-time environment and create an application from the mapping.

Note: If you are creating a mapping for an unbound subscription you do not need add a Filter transformation and a Filter query to the mapping.

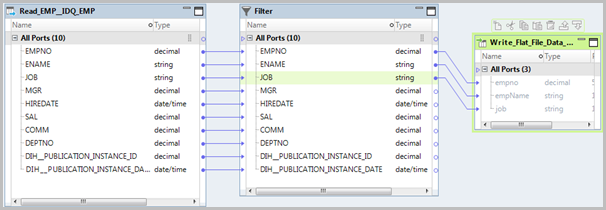

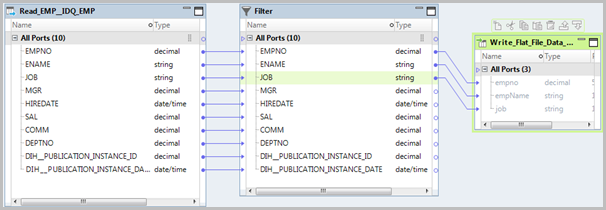

The following image shows a sample subscription mapping:

Step 1. Create Source and Target Connections

1. Create a relational source connection to the Data Integration Hub publication repository.

2. Create an Oracle or a Flat File target connection to the subscribing application.

Step 2. Create Source and Target Data Objects

Create data objects under Physical Data Objects.

1. Create a source data object and select the table in the source connection to consume. The object must be a relational data object.

2. Create a target data object and select the table in the target connection subscribes to the data. The object can be a relational data object or a flat file data object.

Step 3. Create a Mapping with Source and Target

1. Create and name a new mapping.

2. Add the source physical data object to the mapping as a Reader.

3. Add the target physical data object to the mapping as a Writer.

Step 4. Add Data Integration Hub Parameter to the Mapping

Add the following parameter to the mapping:

Add the following parameter to the mapping: <TOPIC_NAME>__DXPublicationInstanceIDs

Where <TOPIC_NAME> is the name of the topic from which the subscriber consumes the data.

Step 5. Add a Filter Transformation to the Mapping

Add a filter condition on the publication date. If you are creating a mapping for an unbound subscription do not add a Filter transformation to the mapping.

1. Add a Filter transformation to the mapping, between the source and target objects.

2. Link all the ports from the source object to the identical ports in the Filter transformation. For example, link the EMPNO port in the source object to the EMPNO port in the Filter transformation, and link the DXPublicationInstanceID port in the source object to the DXPublicationInstanceID port in the Filter transformation.

3. In the Filter tab of the Filter transformation, configure the following parameter:

<TOPIC_NAME>__PublicationInstanceDates

Where <TOPIC_NAME> is the name of the topic from which the subscriber consumes the data.

Verify that Default Value is set to TRUE.

4. Save the mapping.

Step 6. Add a Filter Query to the Reader Object

Add a filter condition on the publication ID parameter. If you are creating a mapping for an unbound subscription do not add a filter query to the mapping.

1. Configure a Filter query on the source with the following mapping parameter:

<TOPIC_NAME>__DXPublicationInstanceIDs

Where <TOPIC_NAME> is the name of the topic from which the subscriber consumes the data.

Do not enclose filter the query parameter within quotation marks.

For example:

dih__publication_instance_id in ($MY_TOPIC__PublicationInstanceIDs)

2. Save the mapping.

Step 7. Configure the Mapping Run-time Environment and Create an Application

1. In the Properties pane select Run-time, and then, under Validation Environments, select Native.

2. Create an application from the mapping.

The mapping is deployed to the Data Integration Service native environment.