Cache Configuration

Proxy Cache

The EntityProxyCache is responsible to resolve any entity item by it's external identifier. Additionally to that, it also holds the current Object Permission ID (aka AclID) and the last modified timestamp for each object. With this it is also used within the Status Cache and every time a list of objects is filtered for their permission.

There are various use cases in which this cache plays an important role. Every time customers provide an identifier to read or update or create a new object in Product 360 the identifier first needs to be resolved. The internal id of the object need to be found so the system knows if this is a creation or an update. Crucial for import, Service API and other use cases. Even manual creation of an item uses this cache to check if the item already exists or not.

This resolve process, if executed without a cache, would lead to a database query for each call. Putting high load on the database layer.

As this cache is so important, it's implementation is optimized and the data is kept fully in memory. During server startup the cache will be pre loaded with all objects. This way, the cache knows if an item is in the system or not and a database query for new items can be avoided.

Available Settings

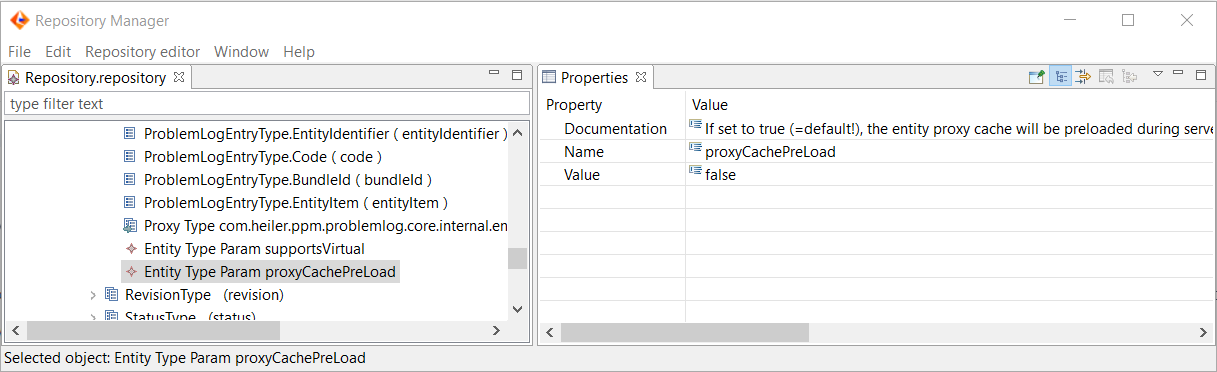

Disable Pre-Load

The pre load of the proxy cache can be disabled for specific entity types by using an entity type param in the repository.

The proxy cache is of course still active for these entity types, it's just not pre loaded during startup.

Enable database as final source

There is a preference for the proxy cache which will enable the database as final source of truth for the proxy cache. If resultIsNeverFinal is set to true, the cache will check the database if an item is really not there. This will slow down performance in item creation scenarios!

# If set to true the proxy cache will never return his results as "final", even if all data has been preloaded# during startup. See also HPM-53444. This should only be activated after consultation with R&D as it slows down # the system in case of new itemscom.heiler.ppm.std.server/entity-proxy-cache.resultIsNeverFinal = falseIn past releases the synchronization between servers of a multi-server deployment had an event buffer delay of about 5 seconds.

This has been resolved and the delay between the servers is now typically below 5 milliseconds, depending on the network infrastructure of course.

ResultIsNeverFinal should not be enabled unless being told so by the Informatica Support.

Status Cache

The status cache holds all data quality results for all objects in a optimized and compressed form in memory. It is able to filter a list of items based on data quality results in a matter of seconds without additional database queries. It also calculates the channel specific data quality status by combining the results of the rules on the fly. The status cache is fundamentally needed for data quality dashboards, filters and queries.

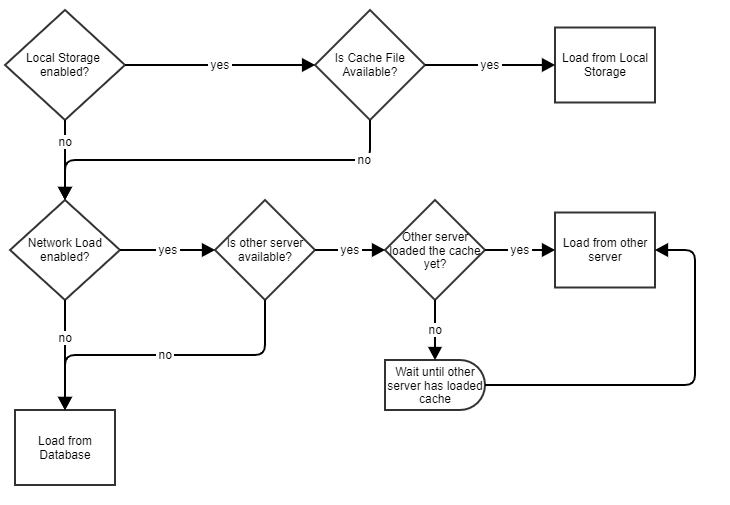

During startup the cache is fully initialized from the database. Starting with version 10.1.0.02 we optimized the startup behavior drastically and enabled intelligent strategies to use already running servers or the servers local hard disk to improve the startup performance.

A fallback mechanism always makes sure that the status cache is loaded in the fastest way possible:

Load from local storage

In case a server is orderly shut down, it will also store the status cache on the local disk to improve the startup time for the next startup. A corresponding check file will be saved to recognize if the server has been orderly shut down. The shutdown of the server takes a few minutes extra time to persist the cache locally. This depends on the size of the cache of course.

# Allows the status cache initialization from local storage.# Default: truecom.heiler.ppm.status.server/statusCache.localStorageEnabled = true# The local file path of the status cache snapshot# Default: empty (using workspace)com.heiler.ppm.status.server/statusCache.localStoragePath =# The maximum amount of parallel threads to read the status cache from the local storage.# This process is I/O bound, i.e. increasing parallel degree could lead to worse performance.# In case there are less CPU cores available, all CPU cores except one will be utilized.# Default: 4com.heiler.ppm.status.server/statusCache.localStorageParallelDegree = 4Load over network

In multi-server scenarios cache is loaded from already running servers. So, in a multi-server scenario, all but the first server will load the cache from another server. This improves the startup time even further and reduces the overall load on the database during cluster startup (as only one server needs to access the persisted data there). This is especially useful in deployment scenarios in which the IOPS of the database is limited.

# Allows the status cache initialization over network.# Default: truecom.heiler.ppm.status.server/statusCache.networkEnabled = true# The amount of status cache elements contained in every network request.# Default: 500000com.heiler.ppm.status.server/statusCache.networkBatchSize = 500000# The maximum amount of parallel threads to transfer the status cache over the network.# While there can be only one active socket at a time, the other threads will be preparing their payload.# In case there are less CPU cores available, all CPU cores except one will be utilized.# Default: 4com.heiler.ppm.status.server/statusCache.networkParallelDegree = 4Load from database

The status cache initialization will use multiple threads to load the data quality results from the database

Preferences to control the database load:

# Status cache initialization will utilize multiple threads once the threshold has been reached.# Default: 200000com.heiler.ppm.status.server/statusCache.parallelTreshold = 200000# The maximum amount of concurrent threads to query the database.# Default: empty (using "number of DB CPU cores" configured in server.properties)com.heiler.ppm.status.server/statusCache.parallelDegree =Persistence Cache

The persistence cache, aka data graph cache, is a lazy loaded cache based on the caffeine cache framework. Every time the data (aka the detail model) of an object is read, the persistence cache is used. If the object is in the cache, it's returned from there. In case the object is modified, the object is removed from the cache. The next read request will put it back in.

With the introduction of the Service API's ObjectAPI this cache becomes even more important. As the ObjectAPI uses our detailed model to obtain all data for a single entity item, the data access for this is also done via the persistence cache. Integration scenarios which make excessive use of the object api might very well profit from an increase in available memory for this cache. Customers could also deploy a separate server which e.g. is primarily for REST and MQ processing and give this server a higher part of the heap to improve the caching.

If that really improves the performance can only be known when the cache utilization is monitored.

Configuration

The cache can be configured with the CacheConfig.xml file. By default, the file contains two cache configurations. A global one which is used for all entity types, and a specific one for JobHistoryType and ProblemLogEntryType. These entity types are specially used and it makes not much sense to keep them as long in memory as other entity types.

The global cache is used for all other entity types, like ArticleType (Item, Product, Variant) but also StructureTypes and UserTypes etc.

The persistence cache is not configured with a "maximum number of objects" but with a specific amount of memory it is allowed to use. The data in the cache is stored as compressed byte arrays which allows us to keep a larger number of objects in the cache with a reduced memory footprint. The maxWeight parameter defines the available memory for the cache in mega byte, or percentage of the heap. The global cache is configured to use 20% of the available heap where the other cache is configured to use a maximum of 128 MB.

<!-- Global Cache for all EntityTypes --> <cacheConfig> <cacheName>persistenceManagerCache.global</cacheName> <entityTypes></entityTypes> <isGlobal>true</isGlobal> <isEternal>false</isEternal> <initialSize>1000000</initialSize> <maxElements>-1</maxElements> <maxWeight>20%</maxWeight> <timeToIdleSeconds>3600</timeToIdleSeconds> <timeToLiveSeconds>3600</timeToLiveSeconds> </cacheConfig> <!-- JobHistoryType, ProblemLogEntryType --> <cacheConfig> <cacheName>persistenceManagerCache.job</cacheName> <entityTypes>JobHistoryType,ProblemLogEntryType</entityTypes> <isGlobal>false</isGlobal> <isEternal>false</isEternal> <initialSize>10000</initialSize> <maxElements>-1</maxElements> <maxWeight>128</maxWeight> <timeToIdleSeconds>300</timeToIdleSeconds> <timeToLiveSeconds>300</timeToLiveSeconds> </cacheConfig>|

Element |

Description |

|

cacheName |

The unique name of the cache. Used to prefix all metrics of this cache instance |

|

entityTypes |

Comma seperated list of entity types this cache is responsible for. Can only be empty when isGlobal is true (and there can only be one global cache!) |

|

isGlobal |

True in case this cache configuration is global, false if not. There can only be one cache which is defined as global |

|

isEternal |

True in case the entries in the cache should not be removed in a timely manner. timeToIdle and timeToLive will be ignored in this case |

|

initialSize |

Sets the minimum total size for the internal data structures of the cache. Providing a large enough estimate avoids the need for expensive resizing operations later, but setting this value unnecessarily high wastes memory. |

|

maxElements |

Maximum number of elements in the cache. If -1 is used, there is no maximum number of objects. The cache is then unlimited (or limited by the weight!) |

|

maxWeight |

The maximum amount of memory the cache can use. If zero or below, no maximum is defined. |

|

timeToIdleSeconds |

Specifies that each entry should be automatically removed from the cache once a fixed duration has elapsed after the entry's creation, the most recent replacement of its value, or its last read. (In seconds) |

|

timeToLiveSeconds |

Specifies that each entry should be automatically removed from the cache once a fixed duration has elapsed after the entry's creation, or the most recent replacement of its value. (In seconds) |

Monitoring

The persistence cache is fully integrated in the Micrometer framework and valuable metrics are available.

Other Caches (EH-Cache based)

EH-Cache Configuration

For detail on the general structure of the ehcache.xml file, we kindly ask you to see the official eh-cache documentation. However, for the purpose of this document we will use a small part of it.

|

Mandatory Attributes |

Description |

|

name |

Sets the name of the cache. This is used to identify the cache. It must be unique. This must never be changed as the application uses the name to find the configuration! |

|

maxElementsInMemory |

Sets the maximum number of objects that will be created in memory |

|

maxElementsOnDisk |

Sets the maximum number of objects that will be maintained in the DiskStore. The default value is zero, meaning unlimited. |

|

eternal |

Sets whether elements are eternal. If eternal, timeouts are ignored and the element is never expired. |

|

overflowToDisk |

Sets whether elements can overflow to disk when the memory store has reached the maxInMemory limit. |

|

Optional Attributes |

Description |

|

timeToIdleSeconds |

Sets the time to idle for an element before it expires. i.e. The maximum amount of time between accesses before an element expires |

|

timeToLiveSeconds |

Sets the time to live for an element before it expires. i.e. The maximum time between creation time and when an element expires. |

|

diskPersistent |

Whether the disk store persists between restarts of the Virtual Machine. The default value is false. |

|

diskExpiryThreadIntervalSeconds |

The number of seconds between runs of the disk expiry thread. The default value is 120 seconds. |

|

diskSpoolBufferSizeMB |

This is the size to allocate the DiskStore for a spool buffer. Writes are made to this area and then asynchronously written to disk. The default size is 30MB. |

|

clearOnFlush |

Whether the MemoryStore should be cleared when flush() is called on the cache. By default, this is true i.e. the MemoryStore is cleared. |

|

memoryStoreEvictionPolicy |

Policy would be enforced upon reaching the maxElementsInMemory limit. Default policy is Least Recently Used (specified as LRU). Other policies available - |

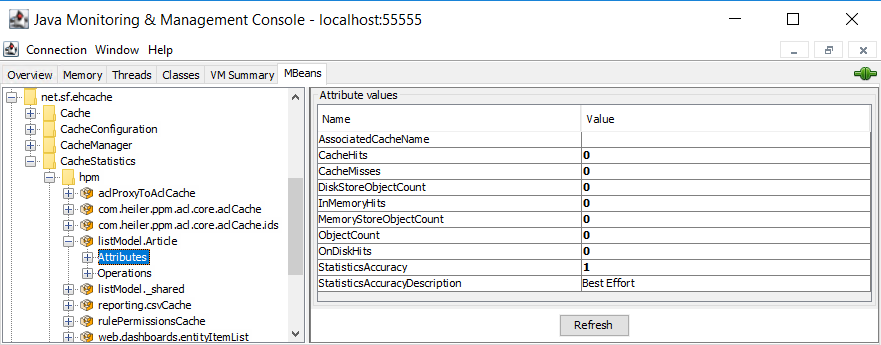

Monitoring

EH-Cache can be monitored with JMX

The MemoryStoreObjectCount is very important here, especially in combination with the CacheHits and CacheMisses.

In case there are just a few CacheHits and a lot CacheMisses the cache is not very useful all.

In case of the List Model Cache this tends to be a hint for a wrong usage of the Service API V1 (in which the caching is enabled by default).

In case the object count is at it's limit and the hit rate is high, the cache is very effective - but could also profit from a higher maxObjectsInMemory setting.

EH Cache tends to block on cache operations in case the cache has reached it's maximum, this leads to a throughput degeneration.

List Model Cache

The List Model Cache is only used in the Service API's ListAPI of Product 360 - specifically for the purpose of "paging" in larger list models.

In case client application don't want to, or just can't process large list models at once, they have the possibility to use the ListModel cache in order to do that.

This way the larger list model is kept on the server until the time to live is expired or the client sends an evict message.

The ehCache.xml file has two cache configurations predefined. One specific for the Item entity (listModel.Article) and one for all other entities (listModel._shared).

As described in the ehCache.xml file you can also add a specific configuration for other entities if needed. The application will check if there is an appropriate configuration in the ehCache.xml file, if not it will use the listModel._shared.

An "element" in the context of a list model cache is a "full list model", not single rows of the model. Be careful with this cache, it can easily bind quite a lot of memory.

<!-- shared cache for list models. Comment it to disable shared list mode caching --><cachename="listModel._shared"maxElementsInMemory="100"eternal="false"timeToIdleSeconds="600"timeToLiveSeconds="3600"overflowToDisk="false"memoryStoreEvictionPolicy="LRU"/><!-- entity specific cache for list models. use 'listmodel.' prefix to configure cache for a specific entity --><cachename="listModel.Article"maxElementsInMemory="100"eternal="false"timeToIdleSeconds="600"timeToLiveSeconds="3600"overflowToDisk="false"memoryStoreEvictionPolicy="LRU"/>Dashboard Cache

For details on this cache see Data caching for dashboard components