Data Quality Operation

Logging

Additional logging information for data quality execution can be increased on several levels: rule engine execution, data provisioning and performance analysis

Rule engine execution

In order to see logging information of the execution of a data quality rule by the rule engine set the category 'IDQ' to DEBUG in server log4j.xml

<category name="IDQ"> <priority value="DEBUG"/></category>As opposed to normal logging information a server restart is required for changes to take effect.

The logs can be found in separate files in the server logs folder. Each of them has a pattern like <Execution Thread name>-<Executed rule name>-<Execution start time>.log

Data provisioning

In order to see logging information of the data provisioning (data input into and data output from rule) set the category 'DQ_EXECUTION' to DEBUG in server log4j.xml.

<category name="DQ_EXECUTION"> <priority value="DEBUG"/></category>The output is available in the ususal error.log respectively console.log files. Example output:

13:01:25,166 DEBUG [exe-IDQ-SDK-Check_PresetValues-2] [DQ_EXECUTION] DQ IN line: 0 (rule: preset violiation) inObjectID: '33762@1' (field: Object_ID) inAttributeName: 'Color' (field: ArticleAttribute:name) inLanguage: 'English' (field: ArticleAttributeLang:language) inStructure: '10058' (field: ArticleAttributeStructureGroupAttributeMap:structure) inStructureGroup: '232@10058' (field: ArticleAttributeStructureGroupAttributeMap:structureGroup) inStructureFeature: '74@10058' (field: ArticleAttributeStructureGroupAttributeMap.StructureAttribute) inAttributeValue: 'green2' (field: ArticleAttributeValue.Value) inValueIdentifier: 'DEFAULT' (field: ArticleAttributeValue:identifier) inStructurePresetValue: '' (field: ArticleAttributeStructureGroupAttributeMap.DomainValue) inDataType: 'Character string' (field: ArticleAttribute.Datatype)13:01:25,317 DEBUG [exe-IDQ-SDK-Check_PresetValues-1] [DQ_EXECUTION] DQ OUT line: 0 (rule: preset violiation) status_code: '0' (field: QualityStatusEntry.Status) status_message: 'Primary, Pflichtgruppe, Feature 'Color': Value 'green2' (English, DEFAULT) of attribute 'Color' does not conform to the preset values' (field: QualityStatusEntry.Message) object_id: '33762@1' (field: Object_ID)Performance analyzing

Enable the following category to DEBUG for additional information about DQ initialization parameters and DQ Executor Pool usage.

<category name="com.heiler.ppm.dataquality.server"> <priority value="DEBUG"/></category>Performance tuning

Increase number of maximum jobs executed in parallel by adjusting the setting com.heiler.ppm.job.server/maxRunningServerJobs accordingly.

Each pool properties are available via JMX and also the size of each DQ Executor Pool can be adjusted at runtime via JMX (MBeans → com.heiler.ppm → dataQuality → dqExecutorPools)

Use synchronous execution of DQ via REST API instead of asynchronous jobs and waiting for job finished result (e.g. when executing rules inside a bpm workflow).

This decreases the general load of the job framework and avoids the general overhead of job scheduling; see also: Rest Management API -> Rest Data Quality API -> Rule Execution.The precision of the mapping input ports should be sized as small as reasonably possible, since this has a direct impact on rule execution performance and DTM memory allocation

Rule execution performance could benefit from setting a lower value for com.heiler.ppm.dataquality.server/dataquality.executor.pageSize as the data sets that are needed to be revtrieved will be smaller, hence faster

Also consider having a smaller value for com.heiler.ppm.dataquality.server/dataquality.executor.pageSize in general since the executor can then be used earlier in a different execution sceneario while the system is busy writing the result of the current elements of the DQ Execution Result to the database (the executor pool serves FIFO)

Rule execution performance could benefit from setting a higher value for com.heiler.ppm.dataquality.server/dataquality.execution.dtmMaxMemPerRequest to avoid that DTM has to swap out cache and index files to disk during rule execution, however this is subject to the available physical memory of the host. An alternative could be moving the temporary DQ folder to a RAMDisk by setting com.heiler.ppm.dataquality.server/dataquality.execution.tmpFileLocation

Depending on the hardware the setting com.heiler.ppm.dataquality.server/dataquality.execution.maxThreads can be increased to utilize the available CPU cores

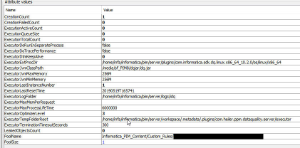

The following properties in the preferences.ini show default values and may be adjusted to improve performance depending on corresponding use-cases:

# Enables an alternative implementation for the DQ Rule Check_IsEmpty that doesn't use the IDQ SDK. # Set to true in case you want to avoid DTM overhead for simple isEmpty checks.# Default: falsecom.heiler.ppm.dataquality.server/dataquality.alternativeCheckIsEmpty=false# Specifies the page size when loading the list model of data to be checked for IDQ rule execution.# Default: 10000 objectscom.heiler.ppm.dataquality.server/dataquality.executor.pageSize=10000# Maximum amount of concurrent executors in the executor pool. # Each executor can run independent from each other to avoid contention on specific IDQ rules. # Default: 2com.heiler.ppm.dataquality.server/dataquality.executionPool.maxTotalPerRule=2# Example: maximum amount of concurrent executors in the executor pool per specific IDQ rule.# The '/' in the rule identifier has to be replaced with '_'com.heiler.ppm.dataquality.server/dataquality.executionPool.maxTotalPerRule.Informatica_PIM_Content_PreDefined_Rules_Check_DataTypes=5# Specifies in seconds how long the MappingExecutor instances will be kept in the pool before aging out.# Exceptions:# - Executors will be destroyed immediately in case a new version of a rule will be uploaded# - Executors will be destroyed immediately when related dictionaries have been changed# - Executors can only age out without a dictionary change in case they have been used at least once# Default: 3600 seconds (60 minutes)com.heiler.ppm.dataquality.server/dataquality.executionPool.executorKeepAliveInterval=3600# Example: specifies in seconds how long the MappingExecutor instances will be kept in the pool before aging out per specific IDQ rule.# The '/' in the rule identifier has to be replaced with '_'com.heiler.ppm.dataquality.server/dataquality.executionPool.executorKeepAliveInterval.Informatica_PIM_Content_PreDefined_Rules_Check_DataTypes=14400# Specifies the amount in bytes the DTM should be allocating from the host OS memory (note that this is not JVM heap memory).# If configured properly this will avoid swapping cache files to disk during transformations.# Default: empty (DTM will automatically determine a value)dataquality.execution.dtmMaxMemPerRequest=# Specifies the amount in bytes the DTM should be allocating from the host OS memory per specific IDQ rule.# The '/' in the rule identifier has to be replaced with '_'#dataquality.execution.dtmMaxMemPerRequest.Informatica_PIM_Content_PreDefined_Rules_Check_DataTypes=58720256# Specifies the amount of threads in the IDQ SDK executor thread pool resp. how many executors can run at the same time.# This roughly translates to dedicated CPU cores for IDQ execution.# Default: 5com.heiler.ppm.dataquality.server/dataquality.execution.maxThreads=5# Specifies the location for the temporary files for each executor that are created during IDQ execution.# Default: empty (using 'workspace/.metadata/com.heiler.ppm.dataquality.server' as default)dataquality.execution.tmpFileLocation=An additional note to the property dataquality.executionPool.maxTotalPerRule: all rule executors will be already created during server startup in background threads.

Cleanup of Status Entries

When executing data quality, the execution result is written to the database table StatusEntry, referring to the used executed data quality rule configuration.

If a data quality rule configuration is deleted (e.g. via Desktop Client DQ Perspective), the status entries for that deleted configuration are not deleted immediately.

A cleanup job will handle this, which runs every day at midnight per default.

The identifier of that job is 'CleanUpDataQualityStatusEntries' and the (english) name 'Status entries cleanup (scheduled)'.

com.heiler.ppm.dataquality.server/dataquality.status.cleanup.job.pattern = 0 0 0 ? * 1,2,3,4,5,6,7Additionally, it is recommended to activate soft deletion of status entries. To do this, the already existing soft delete job has to be used.

Please refer to the 'Soft Delete Cleanup Job' chapter in the 'Server Operation' documentation and apply it to the sub entity type 'StatusEntryType'. Also ensure, to configure the lifetime for the root entity type 'StatusType' to another value than "unlimited" (e.g. '1y'), and be aware, that thereby also other sub entity types of 'StatusType' (e.g. 'PublicationStatusEntryType', which is used by the GDSN Accelerator) will also be cleaned up.

This is because the most concrete definition for an entity will be used. So if you define a life time for a root entity, then you can overwrite it for the sub entity. But this is only valid if the life time for the sub entity is shorter than the one of the parent.

Issue reporting guide

This guide explains required information and steps to retrieve them in order to ensure a certain overall quality level for issues concerning Data Quality relevant topics.

Please provide as much information as described as follows in order to have a quick success in solving the issues.

Configuration setup

All DQ relevant configuration and rule files contained in the server configuration subfolder 'dataquality'. This can simply be done by zipping the whole folder and attaching it to the issue.

Screenshots of specific rule configurations are also helpful, especially when they are custom ones. The same is true for the configured execution triggers.

It may also important to provide information about the setup workflows if executions are called from there.

Information about the execution context

Data Quality executions have following main informations

The rule configurations containing information about the data provisioning

The actual data quality rules that are used in the rule configuration

The execution may contain more than one rule configuration, mostly if the execution is done for specific channels containing several configurations

If the rule configurations are executed as jobs as f.g. by manual executions, see the job log details in the processes overview for the used rule configurations.

For triggered executions this information may also be retrieved by looking at the trigger configuration

Execution may be also triggered from calls inside workflows

Can the problem be pinned down to a specific rule configuration or rule? If so, Please specify them. Is the rule a custom rule and if so, what is it supposed to do?

Logging data

General logging data as always (error.log, console.log)

DQ specific logging data as described in the previous 'Logging' section. This may require to change the logging level to DEBUG as described there and then execute the rules again in order to see the additional logging information.

Performance

Provide information about the settings as described in the Performance tuning section

Is the execution manually or triggered e.g. after import or every night or for each item change?

How many items are affected? Execution for single item or many items of a catalog or other data set f.g.?

Are there many executions in parallel?

Crashes

In case of crashes get the heap and thread dump of the P360 Server.

If the crash happens on the DQ engine itself, also provide heap dumps of the underlying engine shared libraries.

For Windows this can be done like this:

ensure

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\Windows Error Reporting\LocalDumps

registry key is present

try to reproduce the problem. Dumps are available in

%LOCALAPPDATA%\CrashDumps

or

C:\Windows\System32\config\systemprofile\AppData\Local\CrashDumps

in case service is run with system account.