Trigger Backpressure

Introduction

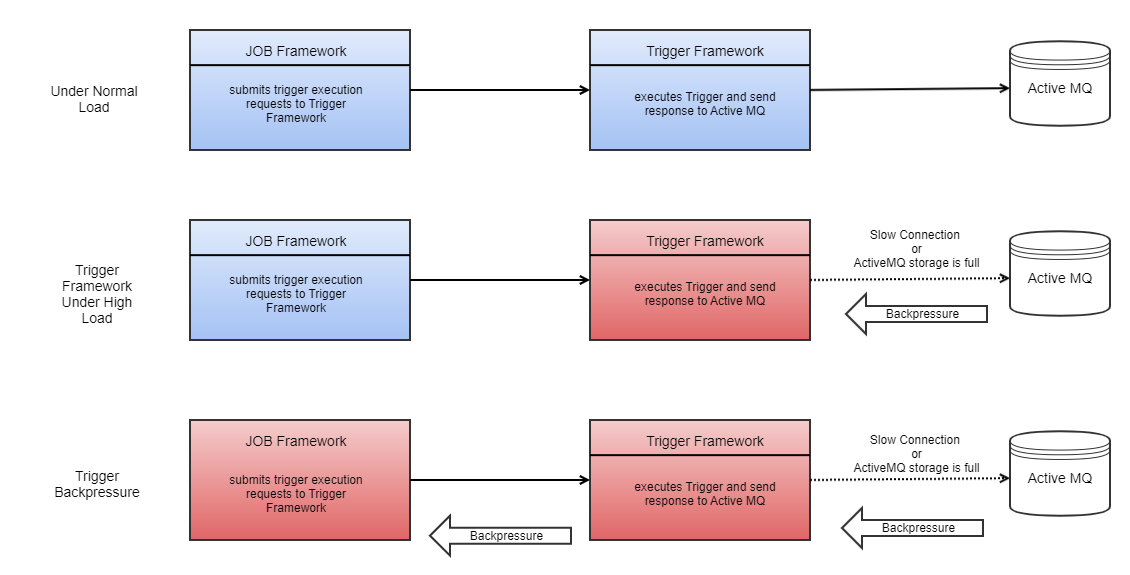

Trigger framework goes into high load situation when the rate at which it is receiving trigger requests is more than the rate at which it can execute them. When such a situation arises, Trigger Framework has the capability to propagate it's load to Job framework ( which is producer of trigger execution requests) and make it little slow in bringing new requests. This propagation of high load from Trigger Framework to it's caller Job Framework is known as Trigger Backpressure.

When does Backpressure scenario happens?

Trigger framework can execute some requests parallelly(default value is 3 times of available number of CPU cores) and can queue some requests(default value is 10000) to be executed as soon as any of execution threads in trigger framework is available. When Trigger Framework is working on it's full potential and still not able to catch up with all the request that Job Framework put on it, then Trigger Backpressure kicks in.

As soon as Trigger Backpressure kicks in, it marks the Trigger Framework under high load.

Let's see high level diagram depicting this behavior.

What happens during backpressure?

As seen in the above diagram, high load from Trigger Framework is propagated back to Job Framework. This means job framework is now aware that it has to slow down request submission to Trigger Framework. Not just that, Job Framework help trigger Framework by executing some of the triggers by itself( triggers whose initiator module is other than UI or OTHER), thus further reducing load on trigger framework. No other service of Product 360 is affected or slowed due to this. Once Trigger Framework comes out of high load state to normal state, Job Framework is also notified and it resumes it's normal operations.

Let's understand this with a scenario :

Assume we started Import of 10k items and we have an Entity Created trigger configured which will fire after each item import. So we will have 10k trigger fire requests.

Once import is started, connection between PIM server and Active MQ is lost.

Trigger Framework will come under high load after some time as it has messages to write in Active MQ which is down.

But Job Framework is still working on it's full potential, so injecting more requests in trigger framework.

After some time, as we have trigger backpressure functionality, high load intimation will reach Job framework.

Job Framework will slow down and eventually Import operation will be stalled.

We will see trigger service under high load logs in console which signifies that backpressure has kicked in.

All other functionality in PIM will still work normally.

Once the lost connection is resumed, Trigger Framework will come out of high load.

Eventually Job Framework will be notified and it will resume the Import operation.

We will see that Import of 10K items are completed.

Trigger Framework comes out of high load state automatically after 5 mins from last time it has entered High Load state.

Log Messages during Trigger Backpressure

Different log messages are logged based on different types of trigger requests.

Request from Job Framework : If Trigger backpressure kicks in and we have a new trigger request with Initiator module other than UI or OTHER (like IMPORT, MERGE, SERVICEAPI etc.), then we get following message in log

Trigger service underhigh load. Job thread will execute the trigger.

Request from UI or OTHER initiator module : When the request is from UI or OTHER initiator module and Trigger Framework is under high load, then we get following log message

Trigger service underhigh load. Discarding the trigger, watchdog will execute it.

Trigger Watchdog

Trigger Watchdog is a thread which continuously runs throughout the lifecycle of server with sole motive of finding the discarded or un-executed trigger requests in the system and execute them.

Trigger watchdog only executes these un-fired abandoned triggers when Trigger Service is not under high load.

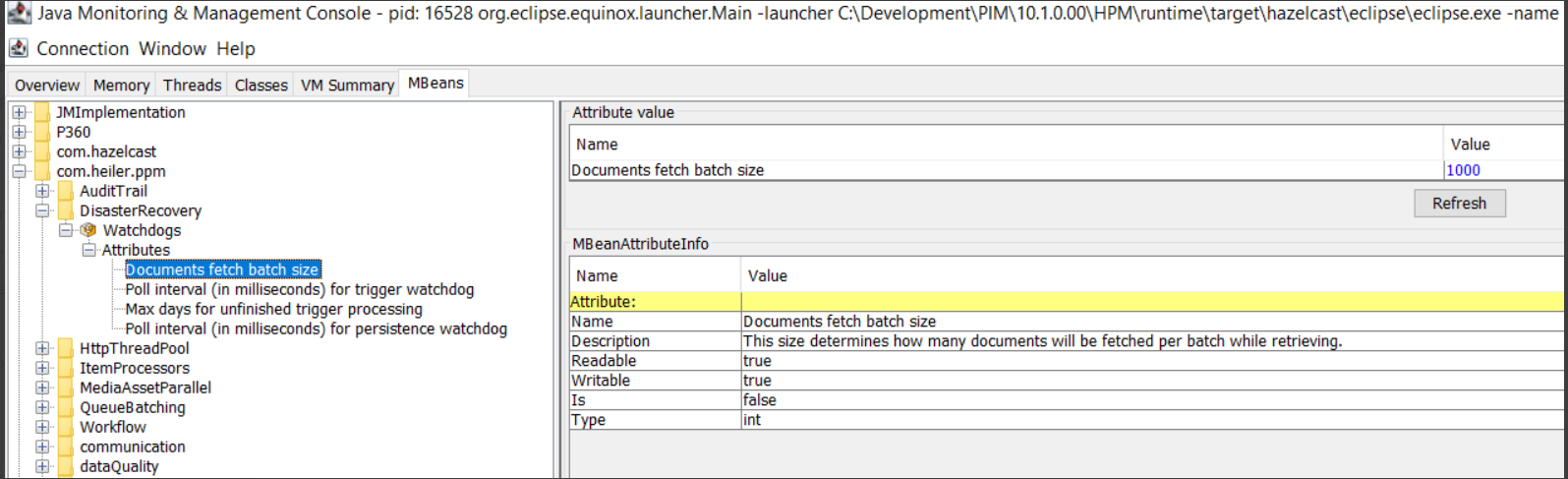

Documents fetch batch size : There is an upper limit on number of un-fired triggers watchdog fetches at a time for processing. Default size is : 1000.

Poll interval (in milliseconds) for trigger watchdog : This is amount of time trigger watchdog waits before it again looks for un-fired triggers if it gets zero un-fired triggers in the current scan. Default value(in milliseconds) is : 300000

Max days for unfinished trigger processing : This states the max number of days, beyond which older documents won't be fetched for processing of trigger if not already executed. Default value(in days) is : 7

Values of all 3 parameters defined above can be updated by either updating plugin_customization.ini file or at runtime using management beans.

Properties in plugin_customization.ini file :

# The value of this property determines for how many milli-seconds should the watchdog wait# until next execution in case it doesn't find any records.# com.heiler.ppm.persistence.dr.server/disasterRecovery.watchdog.trigger.pollIntervalInMills=300000 # This size determines how many documents watchdog should fetch per batch size while retrieving.# com.heiler.ppm.persistence.dr.server/disasterRecovery.watchdog.fetchBatchSize=1000 # The timestamp decides the maximum number of days beyond which older records won't be picked up.# com.heiler.ppm.persistence.dr.server/disasterRecovery.watchdog.trigger.fromTimestampInDays=7Updating values at runtime(does not require server restart through management beans using JConsole)

Trigger Framework Metrics

Trigger Framework captures some metrics while it is trying to fire triggers or enters in to high load situation. These metrics are mentioned in table below :

|

Metrics Name |

Description |

|

triggerService.rejectedAsExecutorServiceFull |

counter for number of times Trigger Framework reaches high load situation. |

|

triggerService.discardedAsNonJobThread |

counter for number of trigger execution requests that are discarded as trigger service is under high load and this request is initiated from UI/OTHER initiator module. |

|

triggerService.pickedByTriggerWatchdog |

Counter for total number of triggers that are picked for firing by trigger watchdog |

|

triggerService.attemptedToFire |

Counter for number of triggers firing attempts made by Trigger Service |

|

triggerService.triggerFireFailed |

Counter for number of trigger firing attempts that failed |