Compatibility

This article provides a list of possible issues coming up with new PIM versions.

Refactoring of Unit entity in 10.1

With version 10.1 the Unit entity has been refactored, the "System units" unit system doesn't exist anymore. All unit system specific fields have been renamed into "Alternative ...", e.g. "Alternative Code", "Alternative Symbol" etc.

The "Units" export data provider is based on the Unit root entity now, the "Unit system" parameter to filter the list of exported units is not mandatory anymore.

Most of the changes can be handled by the export template conversion which is done automatically when an export template is loaded. Some of the export templates may need to be reviewed or adjusted.

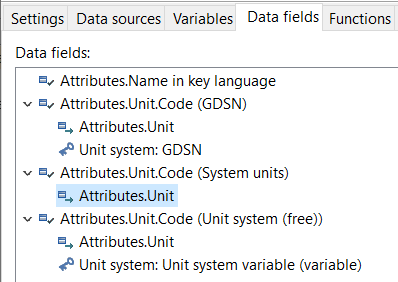

Data fields qualified with a unit system

This is the most important and most common use case. Usually, you want to output the code of a unit that is assigned to a field. You had to qualify the unit code field with a unit system as every unit code was unit system specific. If you used the "System units" unit system, the field gets changed to the "Code" field. If you used another unit system or a variable, nothing is changed.

Usually, all used export data fields can be converted automatically to the new version without any issue.

Note: the names of the fields are not changed which might be confusing at first. You could rename such fields easily to avoid misunderstandings.

"All units in a unit system" export data provider

The "Unit system" parameter of this export data provider is not mandatory anymore. If you don't configure a value for it, all existing units will be exported.

This data provider is using the Unit root entity now, before it was UnitSystemSpecific. All used export data fields are converted into fields of the Unit entity without changing the qualification.

Example:

|

Data field name |

Field identifier and qualification |

Converted field and qualification |

|

Units.Code |

UnitSystemSpecific.Code |

Unit.Code |

|

Units.Description (German) |

UnitSystemSpecificLang.Description, UnitSystemSpecificLangType.LK.Language ="7" |

UnitLang.Description, UnitLangType.LK.Language ="7" |

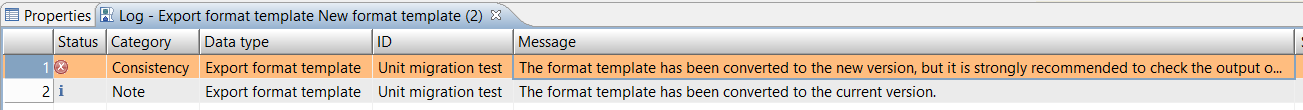

It is strongly recommended to check all export templates that use the "All units in a unit system" export data provider. If you want to export unit system specific codes or names, you have to adjust the data fields accordingly. To emphasize this, the log shows an error when you load an export template that should be checked.

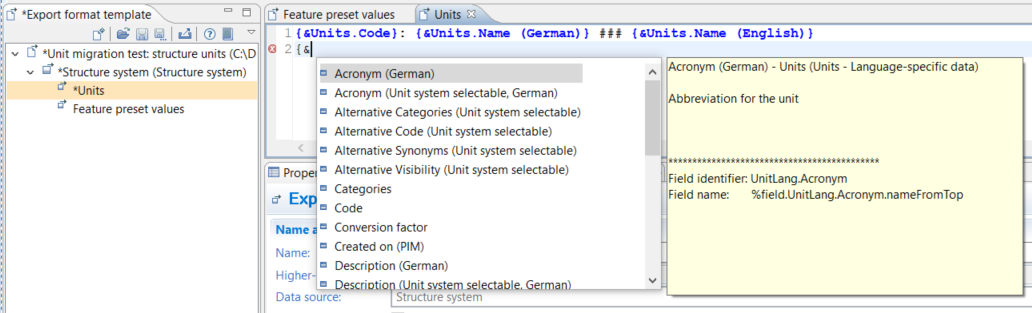

"Structure system" export data provider

The sub-data type "Units" of the "Structure system" export data provider now also uses the Unit root entity instead of UnitSystemSpecific. All export data fields will also be migrated to fields of the Unit entity.

Not covered use cases

If you have a variable of data type "Unit system" in your export template with "System units" as value, the variable will still be used in data field qualifications or as data provider parameter but it doesn't have a valid value anymore.

Parallel Export in 10.0

It is possible to export data in parallel in order to fully utilize the resources of your system and to heavily speed up the export process in general.

When opening an old export template, it will be migrated automatically to the new version. However, parallel processing of each module will be disabled. That means all existing export jobs will run in non-parallel mode and will work as before. If you want an export to run in parallel mode you have to actively adjust the export template and re-schedule the corresponding export job.

The behavior of some export functions has changed. All templates that use these functions must be checked and adjusted if necessary.

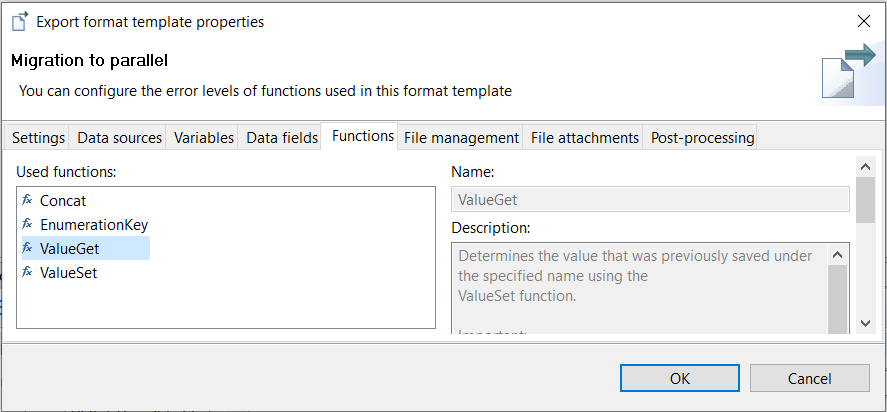

Find out what functions are used

In order to find out if a certain function is used in your export template, you can open the export template properties dialog (right-click on the root node in the "Export format template" view) and switch to the "Functions" tab. All functions used in the template are listed here.

The affected functions are:

ValueGet/ValueSet

These functions use the global export function context. This ensures that all parallel processed packages can access that data.

But you have to make sure that the names under which you want to store data are unique and that the values are not overwritten by others.

If you only want to collect data of sub-modules within one main module, you should use the ValueSetLocal/ValueGetLocal export functions.

You can find a detailed description of these functions in the chapter Export functions.

NumberSet/NumberGet/NumberIncrement

These functions use the global export function context. This ensures unique numbers when incrementing.

Due to parallel processing, you could get another number with NumberGet than you've just set by NumberSet within a module because that number has just been incremented by another - parallel - export package. That means, the counting with NumberIncrement works in parallel mode, but it is not possible to get the current number within a module that is processed in parallel mode.

What does work is to set a number in a first module, increment that number in a second module in parallel mode, and read the number in a third module.

LoopCounter/DatasetCounter

If data rows are removed by data field validation, there may be gaps in the numbering. If you need consecutive numbering without gaps, you cannot use parallel mode for that export.

The reason for that is, that each package gets its own range for data set counters.

Example:

You have 20 items in two packages of ten each. The first package will use 0 - 9 or 1 - 10, the second package 10 - 19 or 11 - 20. If an item of the first package is removed, there will be a gap; the last number reserved for the first package is not used.

API Changes in PIM 8

With PIM 8 it's much easier to export data of new repository sub-entities or to implement a new data provider. For that some changes in the API were needed.

Summary

The most important changes are listed here:

The ExportComponent class provides access to all factories you need:

ExportComponent.getDataProviderFactory()

ExportComponent.getDataTypeFactory()

ExportComponent.getFunctionRegistry()

The base implementation for all data providers ReportListModelQueryDataProviderBaseImpl has been replaced by the new DataProviderByReportResultBaseImpl class

The new extension point com.heiler.ppm.texttemplate.core.exportListModelProviders provides the possibility to map an export sub-data type to all data providers supporting a certain repository root entity

We have separated responsibilities now: the search for objects to be exported is performed by the corresponding data providers, but data will be loaded by list model providers called by the data providers.

The advantages are:

You can now either

implement a new data provider in order to provide a new algorithm to search for objects to be exported OR

you can create a new export sub-data type to export data of repository sub-entities in a special way

Your new data provider will automatically provide all export sub-data types contributed for the root entity of your data provider

Your new export sub-data type will automatically provide by all existing data providers of the corresponding root entity

Short migration guide based on the "AllUsers" data provider SDK example (PIM 7.1)

If you have to migrate DataType or DataProvider custom implementations you should first have a look at the following example which is part of the SDK.

DataTypeItemCreatedByUser, DataTypeUserList

There're little changes in some of the DataType methods.

old: init()

new: init( RepositoryService repositoryService )

UserDataProvider

The old ReportListModelQueryDataProviderBaseImpl class has been replaced by the new DataProviderByReportResultBaseImpl class.

Base implementation

old: ReportListModelQueryDataProviderBaseImpl

new: DataProviderByReportResultBaseImpl

getDataType(), getSourceDataType()

old: DataTypeFactory.getInstance()

new: ExportComponent.getDataTypeFactory()

getSubDataTypes()

We have to delete this method, because sub-data types are contributed now at the com.heiler.ppm.texttemplate.core.exportListModelProviders extension point.

But we cannot use a generic algorithm since there's no EntityRelationHandler für items and users. That's why we have to implement a new ListModelProvider.

New ItemsOfUsersListModelProvider

We have to create a new list model provider. Since we have to get referenced objects of another root entity, we'll extend the GenericReferencedObjectsListModelProvider class:

The getItems() method should be copied to the list model provider. It's needed to get the items of the master catalog.

All other stuff is done by the super implementation

It's important to not forget to contribute the new list model provider...

<extension point="com.heiler.ppm.std.core.listModelProviders"> <provider entityIdentifier="Article" identifier="ItemsCreatedByUsers" listModelProvider="com.heiler.ppm.customizing.export.core.dataprovider.ItemsOfUsersListModelProvider" name="ItemsOfUsersListModelProvider" priority="100"> </provider> </extension>... and to map the list model provider to the sub-data type (this is called export list model provider)

<extension point="com.heiler.ppm.texttemplate.core.exportListModelProviders"> <exportListModelProvider dataType="com.heiler.ppm.customizing.export.core.datatype.DataTypeItemCreatedByUser" name="Items created by user" priority="100" rootEntityIdentifier="User" transitionProxyFieldType="ArticleLogType.CreationUser" virtualEntityIdentifier="ItemsCreatedByUsers"> </exportListModelProvider> </extension>Note: the identifier "ItemsCreatedByUsers" of the list model provider contribution (line 5) is used as virtualEntityIdentifier of the export list model provider contribution (line 9).

queryData()

This method is not needed anymore, the new list model provider will retrieve the data.

AASGAM handling - changes in PIM 7

Changes of the AASGAM business model require changes of data field access in case you've implemented custom data providers that use content of StructureAttribute fields.

Field newField = repositoryService.getFieldByIdentifier( "ArticleAttributeStructureGroupAttributeMap.StructureAttributeId" );moreFields.add( new Pair( newField, null) );newField = repositoryService.getFieldByIdentifier("ArticleAttributeStructureGroupAttributeMap.StructureAttributeIdentifier");moreFields.add( new Pair(newField, null ) );// ...// prepare params for queryfinal FieldsAndFieldPaths buildFieldPaths = buildFieldPaths( entityType, fields );FieldPath[] fieldPathsArray = buildFieldPaths.getFieldPaths();// ...// execute querythis.query.setEntityTypeIdentifier( entityType.getIdentifier() );listModel = this.query.query( fieldPathsArray, -1, getRevisionToken( null), additionalInfoMap );Field aasgamStructureAttributeTransitionField = repositoryService.getFieldByIdentifier( "ArticleAttributeStructureGroupAttributeMap.StructureAttribute" );Field structureAttributeId = repositoryService.getFieldByIdentifier( "StructureFeature.Id" );Field structureAttributeIdentifier = repositoryService.getFieldByIdentifier( "StructureFeature.Identifier" );// build transition aasgam.StructureAttribute --> StructureFeature.IdmoreFields.add( new Pair( structureAttributeId, new Pair( aasgamStructureAttributeTransitionField, null ) ) );// build transition aasgam.StructureAttribute --> StructureFeature.IdentifiermoreFields.add( new Pair( structureAttributeIdentifier, new Pair( aasgamStructureAttributeTransitionField, null ) ) );// ...// prepare query ...// execute query ...