Communication

The provided approach for the communication choreography to the according 1WS data pool of the GDSN Accelerator is file based. Although in case of using another certified data pool for GDSN this could be differently. That's why the following chapters are covering adjustments concerning the choreography supported by the GDSN Accelerator as well as hints in case of using a different certified data pool. Furthermore we assume that you already have a GLN (Global Location Number) as well as all needed connection data to the data pool.

Communication to the 1WS data pools

In case you want to send more or less GDSN related information to the data pool in the data source scenario or if you want to receive more or less GDSN related information from the data pool in the data recipient scenario you need to adjust the used mechanism for the communication accordingly.

In this chapter it will be explained, how those adjustments should be done and what needs to be considered.

Resources

There are several documents on which these sections are referring to. Please make sure that you are familiar with those documentations.

"Informatica MDM - Product 360 - Desktop_ <Version>_UserManual_en.pdf" which will be called "Product 360 User Manual" in the following sections.

Adjust or generate an import mapping (Data Recipient)

In data recipient scenario product information will be sent by using a CIN file from the data pool to Product 360 via different components of the GDSN Accelerator. If using the IM connection, the received CIN file will be forwarded to the hotfolder of Product 360. But if you use the DSE connection, hierarchical product information will be flattened before the file is transferred to the hotfolder of Product 360. Because of this you need to take the accordingly adjusted XML to create an import mapping.

For being able to import the CIN file correctly, you should have a working 1WS pool connection where you can receive an example CIN file. In case of not having a working connection you can simulate the process through B2B by using your own generated XML file. The "Product 360 User Manual" describes in chapter "Data import" the needed Import perspective of Product 360 and the available functionalities to define the import mapping. With this knowledge you will be able to define the needed import mapping.

Please consider the according chapters "Data validation" and "Testing" of this documentation.

Example

For a better understanding we will assume that we will have to add the mapping of a new GDSN Module. For this example we will take the "MicrobiologicInformationModule" of the IM data pool.

Pick up a CIN file in the hotfolder of Product 360 which contains product information for the "MicrobiologicInformationModule".

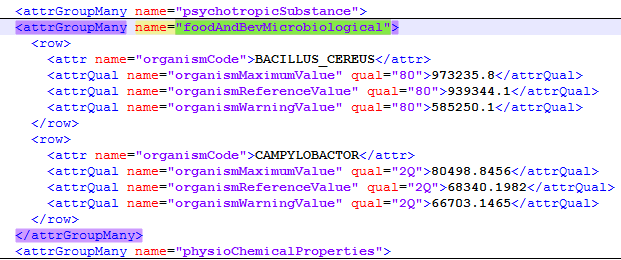

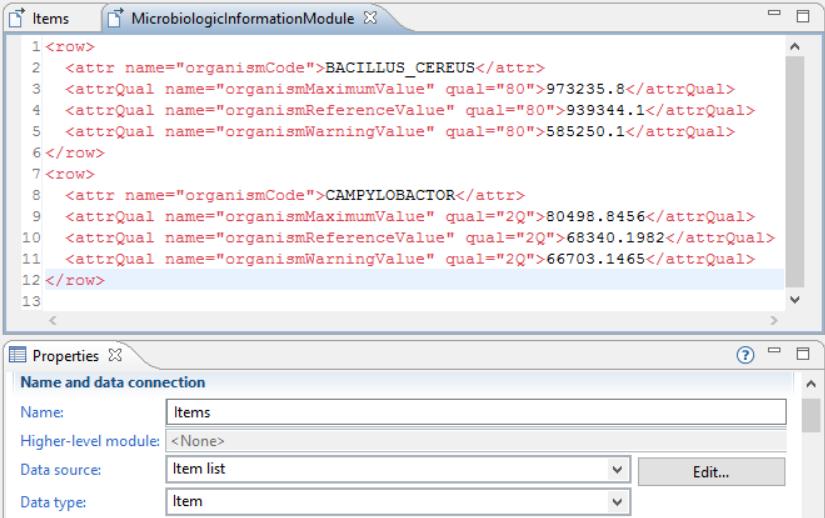

The product information could look like this:

Create a new import project with the XML file of step 1 in the Import perspective of Product 360.

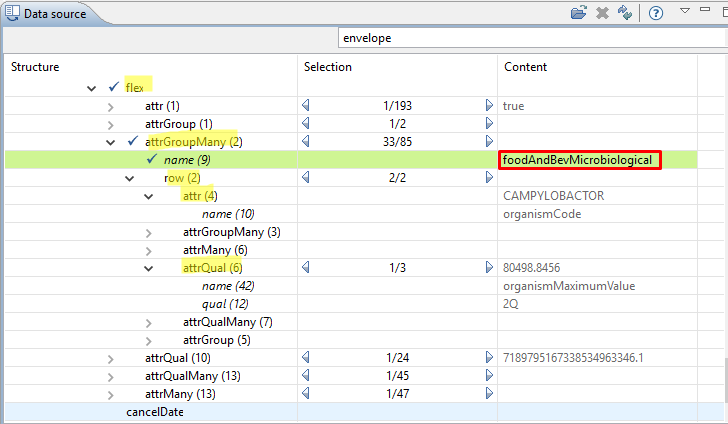

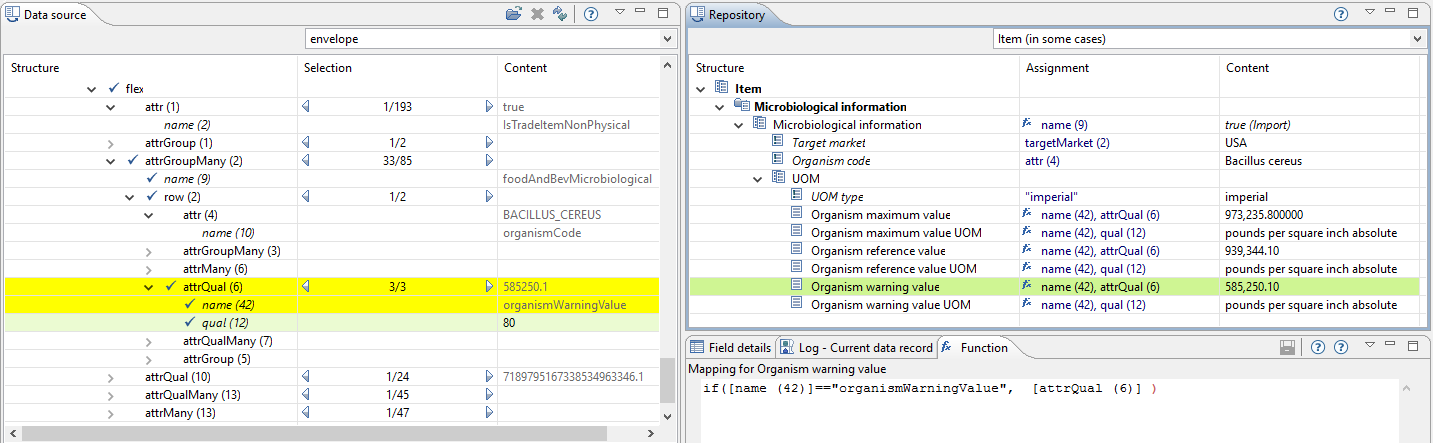

Navigate in the Data Source view to the "foodAndBevMicrobiological" flex attributes. The XML path has been looked up in the chapter "Analyze requirements".

Map the fields accordingly to the Product 360 data model.

Execute the import and check if the product information is available in Product 360 as expected.

Execute the complete workflow for receiving a CIN of the GDSN Accelerator.

Adjust the export template (Data Source)

In this chapter we will explain, how to adjust the export format template to send product information to the 1WS pool with the GDSN Accelerator. Therefore we need a XML example to be able to see in which XML structure that product information has to be sent. Therefore you can use the XSD files or download an example file from 1WS. Please note that the XML path for the DSE connection will have an additional "Article" tag, because we are exporting a flattened hierarchy, which will be hierarchically transformed before sending to the data pool.

You can generate a XML file by using the provided (or downloaded) XSD files to be able to create an import mapping by various third party tools. But usually you can only take the generated structure and need to modify the attributes regarding valid values of GDSN.

You can find general information about how to work with the Product 360 Export in the "Product 360 User Manual" chapter "Export". Furthermore also consider the according chapters regarding export in " Data validation " and " Testing " of this documentation.

Example

As example we assume that we've added the GDSN module " MicrobiologicInformationModule" to the Product 360 data model and want to send those product information to the 1WS pool by using the IM connection.

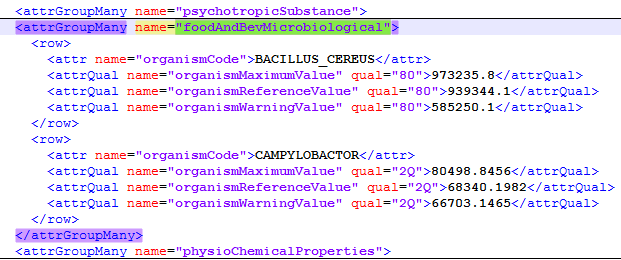

Get a XML snippet of the " MicrobiologicInformationModule" in the "CatalogueRequest" message and ensure that it contains valid data. Usually you find GDSN modules mapped to "flex" attributes when using IM.

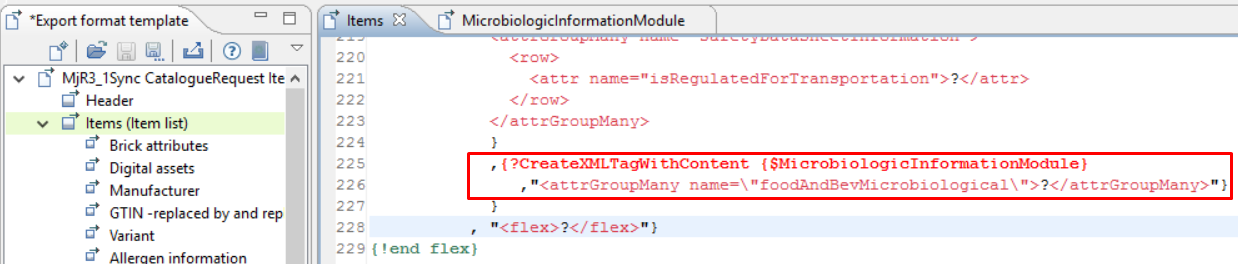

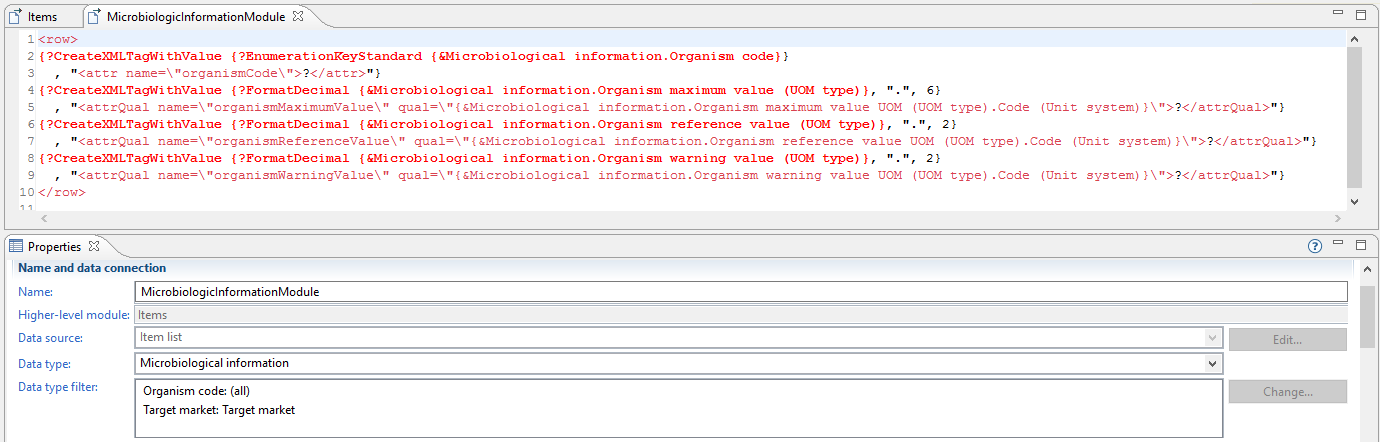

Create a sub-module for the GDSN "MicrobiologicInformationModule" in the export format template and copy the XML snippet in it.

Call the sub-module in the "Items" module of the export format template. Please note that there is no ordering of "flex"-Attributes, so we can add it in the end.

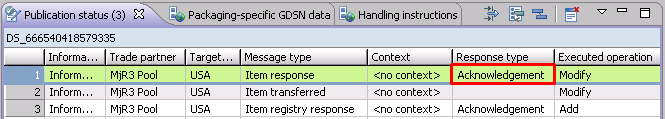

Execute an export against the data pool with a single item and check if the product information for the GDSN module really was in the generated file and if the response from the data pool was without errors.

Exchange the static values with the field values and test with a single item against the data pool. Now adjust the data type of the new sub-module.

Test the template against the data pool with several items as explained in chapter "Testing".

Please keep in mind that the maximum depth of the hierarchy taken by the hierarchy data sources in the export is limited by 10 levels. In case of using more levels, the standard assumes that you have an endless loop.

Adjustments to B2B Data Exchange GDSN Accelerator

GDSN integration requirements can vary from customer to customer. These requirements should be considered carefully in order to understand if and to what extent B2B Data Exchange GDSN Accelerator can support.

On the one hand, a new requirement or a change to the GDSN data model might require a simple adjustment to one or more of the B2B Data Exchange GDSN Accelerator components. Moreover, sometimes no adjustments will be needed at all. On the other hand, there are cases in which a significant effort is required in order to adjust the B2B Data Exchange GDSN Accelerator to meet the new requirement or change.

This section describes some of the common use case and their implication on the B2B Data Exchange GDSN Accelerator:

Q: How do XSD changes in modules or fields affect B2B Data Exchange GDSN Accelerator?

A: Let's divide the question into different use cases:

If a new module is added to the GDSN data model → an updated XSD with the new module should be deployed to the appropriate B2B DT Services.

If a field in the XSD got changed → an updated XSD with the new module should be deployed to the appropriate B2B DT Services.

If a new field of an existing module is added in Product 360 without XSD change → no change required in B2B.

If an existing field is removed in Product 360 without XSD change → no change required in B2B.

In general, in case of a requirement leading to a change in any of the XSDs that are used in B2B Data Exchange GDSN Accelerator the updated XSDs should be deployed to the appropriate B2B DT services.

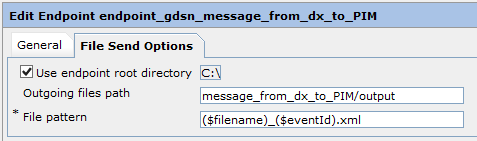

Q: In case there is a requirement to specify or parameterize the file name of incoming files from the data pool, how can it be achieved?

A: It depends what kind of change or parameterization is required. In general, the file name can be specified as part of the DX endpoint properties. In case the change is to add a fixed value or a parameter that is already used in the current implementation, then this can be achieved by adding it in the file pattern within the DX endpoint option.

For example, the image below shows how the "eventId" is added to the file name of incoming files from the data pool.

In case the parameter is not used in the current implementation, then this change will require an analysis and development effort to understand where the required parameter can be captured from and then implement it in the PowerCenter mapping and/or DT service.

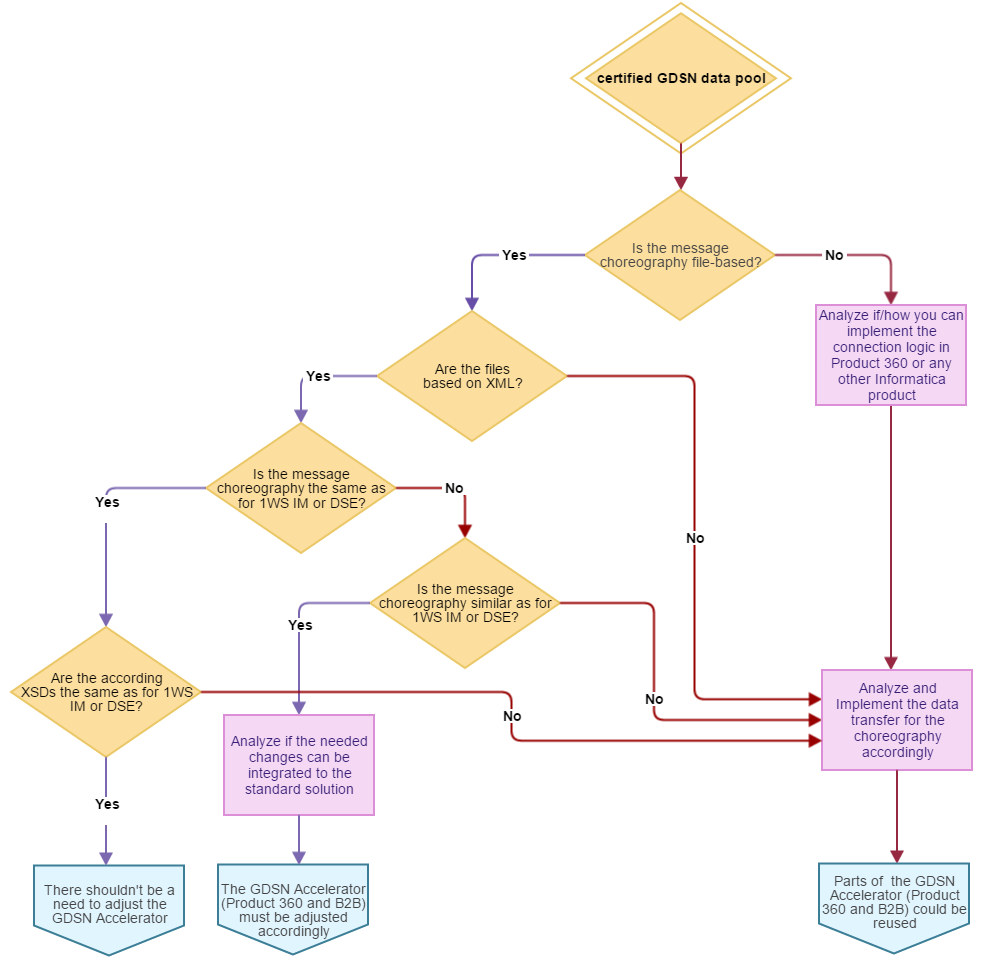

Communication to other data pools

The GDSN Accelerator relates to the 1WS data pool. 1WS is one of round about 30 GDSN certified data pool providers. In case of not using the 1WS data pool, you might be able to reuse parts or the whole GDSN Accelerator in order to connect to your data pool. This chapter is more a mind map about questions you have to ask yourself in order to implement the communication to a different pool. You should be familiar with your data pool and how the message choreography is working to be able to evaluate the similarities and differences to the 1WS approach and the provided GDSN Accelerator.

The following diagram gives you a high-level overview of what to review regarding the provided GDSN Accelerator and how many changes you can expect.

File-based communication choreography

If you need to use a file-based communication choreography then you have high chances that you can manage your needed behavior by adjusting the GDSN Accelerator on Product 360 and B2B side. Please note that the implementation can still turn out in a high effort.

The standard assumes different conditions for the communication which is differing for DSE and IM solution. You need to ensure that your data pool will support the same conditions.

IM (Data Source)

The standard assumes, that all your items are in the master catalog. Although service API call for the publication status would work for all messages except the "ItemAuthorizationResponse".

DSE (Data Source)

The standard assumes, that all your items are in the master catalog. If this is not the case, the service API call for the publication status will fail.

The standard assumes, that the GTIN is unique in the master catalog. If this is not the case, the first found item with this GTIN will be taken.

The standard assumes, that the item hierarchy will be exported flattened by Product 360.

DSE (Data Recipient)

The standard assumes, that the GDSN information is not arriving hierarchical in Product 360. They must be flattened in order to be able to import them.

B2B Data Exchange (Communication protocols)

Informatica MFT product is responsible for the connection with the data pool. The most common communication protocol is AS2, yet MFT can support many types of protocols. You need to understand with the relevant data pool which file transfer protocol should be used to send the file to it and then configure it accordingly in MFT. The communication protocol has no impact on B2B Data Exchange and PowerCenter implementation, only on the MFT.

General checks

Are the used files for the communication based on XML? If not then you have to create the according import mapping or export format template on your own.

Is the communication based on the same or similar XSD files as for 1WS IM or DSE? If similar, then you can adjust the according import mapping or export format template of the GDSN Accelerator.

Are the valid value lists and the unit system the same or similar as those for 1WS IM or DSE? If similar, then you can extend the enumerations in the repository.

Are the needed data fields qualified as they should be?

Do you need further data quality checks?

Does the added export and import functions for the GDSN Accelerator fit your needs as well?

Different communication choreography

First, you need to analyze how the communication choreography can be implemented. You might need to implement a new view in Product 360 to handle your specific data connection or you can consider using another Informatica product to do the communication accordingly. Next, you need to take care of the data transfer which needs to be done in the defined communication choreography. It is very likely that the communication choreography completely differs from the used choreography of the GDSN Accelerator.

Check if the standard enumerations in the repository match the requirements of your GDSN certified data pool.

Check if the standard repository fields match the requirements of your GDSN certified data pool.

Check which target markets you need.

Check if the standard data quality checks of the GDSN Accelerator still fit your needs.

Check which Product 360 components can support your requirements.

Check if an Informatica product can help you with your requirements.

Data Source

How can a user see the current status of an item or hierarchy in your GDSN certified data pool?

Can you modify or correct the data which is already in your GDSN certified data pool?

How can you create an automatic message process?

Data Recipient

How can you import the data of your GDSN certified data pool?

How can you provide feedback on the received data?

Example Electronic Commerce Council of Canada Network Services (ECCnet) - Recipient

In this example an analysis of the ECCnet data pool for the data recipient scenario will be shown which describes changes you need to consider.

Connection logic and data transfer

In contrast to the GDSN Accelerator that is using a file transfer via B2B, the communication of ECCnet is based on SOAP calls. In this case the mechanisms of the export to send data to the pool and the import to receive data from the pool cannot be used and the following two questions have to be answered:

Source scenario: How to collect the data and send it to the pool?

Recipient scenario: How to receive data from the pool and process the data?

In the recipient scenario the differences in the message choreography helped as described in the next section.

The "import" of the data in case of ECCnet has been done by item processors reading the data from the SOAP call and writing them to the detailModels using put commands.

Another point that came up while implementing the ECCnet Accelerator were data transformations. For example think about units: the data pool sends a string based code but in the data model (detailModel) a UnitProxy is stored. The GDSN Accelerator makes use of the capabilities of the import and export components, which provide some built-in transformations which can be extended by using import and export functions. You need to find a place for these transformations in your specific data processing.

Since we want to implement the ECCnet data recipient scenario, we assume to get valid data and don't need to care about (DQ based) validations. However, in case you need validations (in the source scenario for example) you might be able to reuse existing DQ rule configurations. A

nyway, in general validations have to be analyzed and needed validations have to be created.

Message choreography

The ECCnet choreography differs from the assumed choreography of the GDSN Accelerator. ECCnet uses a pull mechanism, while the GDSN Accelerator assumes a push mechanism.

In ECCnet first of all you have the possibility to search for products you want to get in your system. So a search UI is needed as well as an implementation of the according calls to the pool. From the search result you can "subscribe" items. As a result you will get the data, and the data pool keeps in mind that you have this data. However, this does not mean that you will get updates after the item changed in the data pool automatically. Remember, ECCnet uses a pull mechanism. When you want to update the data in your system you have to actively trigger a call to the data pool. For this action a UI is needed and maybe some tasks or workflows might come in handy.

In GDSN the requirement is to keep track of the messages that were sent and received. Additionally you want to know if something went wrong while the message has been processed by B2B. In ECCnet there is much less information. Here an item can be marked as created, updated and might been marked as deleted in the context of ECCnet. That's the reason why ECCnet does not reuse the PublicationStatus but instead creates additional ArticleLog entries.

Reusable parts

Regarding the communication nothing from the GDSN Accelerator can be reused.

ECCnet provides a mapping from ECCnet attributes to GDSN attributes. Therefore the major part of the data model and the maintenance UI can be reused. However, this doesn't mean that an analysis of the data model is unnecessary. There are many small differences like field lengths, sometimes data types and so on to consider and to adjust the data model accordingly. For example ECCnet only supports the Canadian target market, so all target market logical keys get the default value "Canada" and are deactivated. Also different codes for the units make a new unit system for ECCnet necessary.