Testing

There are several ways to ensure your changes are correct. In this chapter we will describe how your test data has to look like to test the correct behavior in Product 360. Those can be used to test different modules of Product 360, like import, maintain functionality, data quality checks and exporting the data.

Test data

The test data should cover various data constellations. Therefore you always have to create the data with following specifications:

Always test with several datasets of each entity of your created/adjusted data model

Always test limits of your created/adjusted data model

Always test with data which are valid and with data which are invalid (also known as good and bad test data). Check if the invalid test data lead to the expected severity (error/warning/info).

Always create some "complete" items, that means items containing all data you want to send to the GDSN pool.

You usually can reuse the test data for testing all modules of Product 360 except invalid test data.

If you enhance your test data with a remarks field you can note your expected result in it.

Test data model

In the chapter "Data model" we introduced how to add or adjust GDSN modules and their fields in Product 360 which includes a "Data model check list". This check list ensures a correct defined data model. It's also checking if the changes in the Product 360 data model are visible by the generic functionality of Product 360. For the mentioned check list you should create test data for exhaustive testing.

For example you can create an Excel file as import file, like shown below for the fields Packaging type, Packaging feature code, Usable product volume and Usable product volume UOM of the sub entity ArticlePackaging, which is representing the GDSN Module PackagingInformationModule:

|

Item no. |

Target market (LK) (Enumeration) |

Packaging type (LK) (Enumeration) |

Packaging refuse obligation name (String) |

UOM type (LK) (Enumeration) |

Usable product volume (Decimal value) |

Usable product volume UOM (Enumeration) |

Remarks |

|

TestPackInfoMod_1 |

US |

AA |

obligation name |

IMPERIAL |

1001.101 |

4G |

Should pass |

|

TestPackInfoMod_1 |

US |

AA |

obligation name |

IMPERIAL |

123456.12345 |

Should pass, but data quality check will fail because of missing UOM |

|

|

TestPackInfoMod_1 |

US |

CNG |

obligation name |

IMPERIAL |

9999999999.999999 |

LTR |

Should pass |

|

TestPackInfoMod_1 |

US |

CNG |

METRIC |

1253.6264 |

LTR |

Should pass |

|

|

TestPackInfoMod_1 |

ES |

PUG |

<String of 200 characters> |

Should pass |

|||

|

TestPackInfoMod_2 |

US |

AA |

IMPERIAL |

0 |

Should pass, but data quality check will fail because of missing UOM |

||

|

TestPackInfoMod_3 |

BB |

ABC |

obligation name |

Should fail due to not existent Packaging type |

|||

|

TestPackInfoMod_3 |

BB |

STR |

obligation name |

METRIC |

10000000000.000000 |

LTR |

Should fail due to a range failure at Usable product volume |

|

TestPackInfoMod_3 |

BB |

STR |

<String of 201 characters> |

Should fail due to a range failure at Packaging obligation name |

|||

|

TestPackInfoMod_3 |

BB |

STR |

obligation name |

TEST |

234.3 |

4G |

Should fail due to not existent UOM type |

(Note: this table does not contain all scenarios to test)

If you write the max value "9,999,999,999.999999" for decimal values, Product 360 is actually transforming it to "9,999,999,999.999998".

Test import

Create an import mapping for your excel file and execute the import. Ensure that all expected errors are printed in the import log. Please check the created data after for correctness.

Test export

Create an export to check if you can export the data as expected. This also ensures that all fields are visible with the needed qualification.

This test is usually not made with the GDSN XML schemata.

Test Service API

If you are planning to use the Service API for your fields, ensure that you have tested it.

Test data quality (Data source)

This chapter is only needed in case you created data quality rules or data quality configurations.

Testing created data quality rules

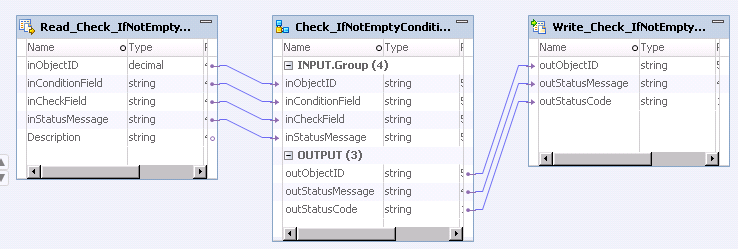

There are many ways to test your created data quality rules. For example you can test the created data quality rules within the Informatica Developer. The necessary steps are explained by using the "IfNotEmptyConditionNotEmpty" rule:

Create an Excel file which contains all input ports and an expected behavior as description.

inObjectID

inConditionField

inCheckField

inStatusMessage

Description

10001

GDSN1

both inputs empty, ok

10002

somevalue

GDSN1

first input empty, ok

10003

somevalue

GDSN1

first input not empty, but second one, error

10004

somevalue

another

GDSN1

both inputs not empty, ok

10005

somevalue

first input not empty, but second one, with default error message

10101

somevalue

GDSN1

aggregated, not ok

10101

somevalue

another

GDSN1

(Note: not all possible test scenarios are covered here)

Create an Excel file which contains the expected output after the data quality rule was running.

outObjectID

outStatusMessage

outStatusCode

10001

No Error

1

10002

No Error

1

10003

No Error

1

10004

GTIN is required but not provided

0

10005

inCheckField must be empty because inConditionField is not empty

0

10101

GTIN is required but not provided

0

Create a mapping to send the Excel file data created at step 1 through your data quality rule.

Compare the expected result from step 2 with the actual result of step 3.

Testing created data quality configuration

Depending on your data quality check, you can reuse the data you already created for the data model test. But you might need to extend those with further data constellations.

A recommended way to test if your data quality configuration is working as expected is:

Think about all possible data constellations and create your data accordingly.

Execute the data quality check for your configuration on the data of step 1.

Evaluate if your expectation and the actual result is equal.

In case the data quality configuration result is differently as your assumption, please check following:

Are the correct fields mapped to your data quality configuration?

If existent, is the correct qualification of the fields used?

If existent, is the port "InObjectID" mapped?

Did you use the correct data type?

In case you are using a sub entity of item as data type, then there must be one data entry for this sub entity to execute the rule.

If you want to ensure that a value is in a specific field of a sub entity use the item as data type instead of the sub entity and qualify the field accordingly. You could also implement a data quality rule which is checking the field via service API.Is the used data quality rule the correct rule for your validation? Please check the description of the rule again.

Test against certified GDSN pool (Data source)

If you have done all the testing and you ensured that all data is correct in Product 360 you need to enhance your export format template for GDSN accordingly. In chapter "Data validation" is already described how to manage the correct formatting of each field in the export.

You can reuse your test data created for the data model testing but in addition, you have to ensure that all mandatory fields of GDSN are maintained. Otherwise the XSD validation will fail during export.

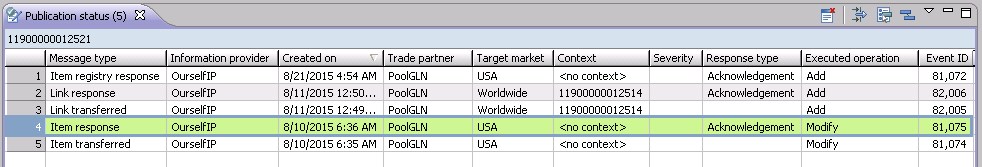

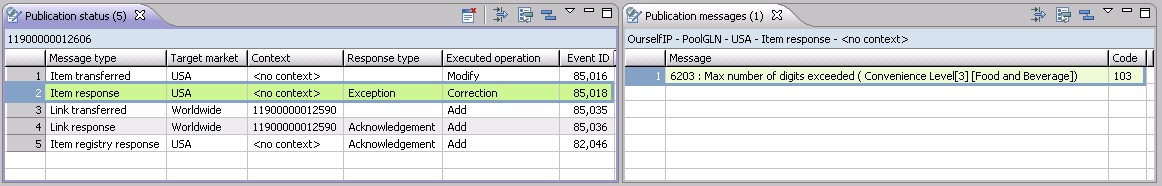

When sending data to the certified pool, ensure that you have the data to export validated. The exported data should get accepted by your data pool. In case of using 1WS data pool, you can verify the correctness of the data in the XML file by opening the "Publication status" view in Product 360. You will see an entry with "Item response" as message type and the according response type. If the response type is "Acknowledgement", then your changes seem to work. Please check the exported file to ensure that data for changes are exported.

In case of an exception as response type something is wrong at your data. Please evaluate what the exact error message is saying. Sometimes it is already helpful to just investigate the exported XML file for "strange" things.

Please always publish your data to the 1WS data pool. This is needed since some validations are only running during the publish process.

Test against certified GDSN pool (Data recipient)

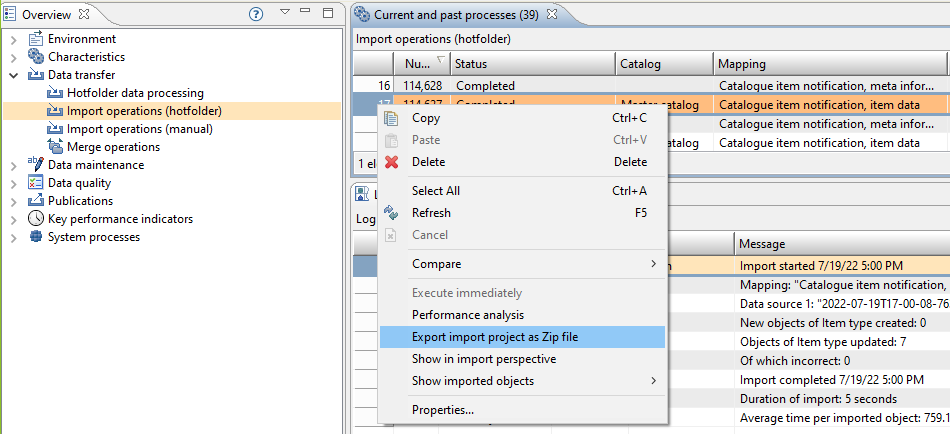

In order to test the adjusted import mappings containing additional fields, we recommend to reimport a Catalogue Item Notification (CIN) message.

In the process overview in node "Import operations (hotfolder)", find an entry using a mapping called "Catalogue item notification, item data". Download the data source by using context menu entry "Export import project as Zip file".

Open the downloaded data file and check that it contains values for the fields you added to your data model.

Create a new import project using the new enhanced mapping and the downloaded data file and start the import.

Check if import has been successful in process overview and in the item UI check for the imported values.